library(janitor)

library(naniar)

library(patchwork)

library(broom)

library(Epi)

library(glue)

library(gt)

library(infer)

library(readxl)

library(xfun)

library(easystats)

library(tidyverse)

## Load Love-431 script

source("data/Love-431.R")

## Global options

knitr::opts_chunk$set(comment=NA)

theme_set(theme_bw())431 Project B Sample Study 1 Report

Remember that each subsection should include at least one complete sentence explaining what you are doing, specifying the variables you are using and how you are using them, and then conclude with at least one complete sentence of discussion of the key conclusions you draw from the current step, and a discussion of any limitations you can describe that apply to the results.

If you want to download the Quarto code I used to create this document to use as a template for your own work, click on the Code button near the title of this Sample Study.

In general, DO NOT use my exact words (other than the section and subsection headings) included in this sample report in your project. Rewrite everything to make it relevant to your situation. Do not repeat my instructions back to me.

- One partial exception is that I have demonstrated the interpretation of point estimates (and in some cases, like the paired tests in Analysis A, and the independent sample tests in Analysis B, the confidence intervals, too) using language that I would be happy to see you use.

1 Setup and Data Ingest

1.1 Initial Setup and Package Loads

1.2 Loading the Raw Data into R

This document demonstrates a variety of things required in your Project B Study 1. We will demonstrate ideas using data from a 2015 class survey, gathered in three data files available on the 431-data website. The files are called:

projectB-study1-demo-survey-2015a.xlsxprojectB-study1-demo-survey-2015b.csvprojectB-study1-demo-survey-2015c.csv

After merging, the complete data set should include data on 21 variables for 53 subjects.

- I’m going to use

clean_names()from thejanitorpackage before I do the merging. It would also be OK to do this after the merge is complete. - I’m also going to tell R to interpret both a blank “” and an “NA” in a cell of the Excel sheet as indicating a missing value, since that’s what I need, and since that’s what

read_csvalready does, by default.

sur15_raw_a <- read_excel("data/projectB-study1-demo-survey-2015a.xlsx",

na = c("", "NA")) |>

janitor::clean_names()

sur15_raw_b <- read_csv("data/projectB-study1-demo-survey-2015b.csv") |>

janitor::clean_names()

sur15_raw_c <- read_csv("data/projectB-study1-demo-survey-2015c.csv") |>

janitor::clean_names()1.3 Contents of the Raw Tibbles

We have three tibbles now, which (once we have merged them together) will contain complete data on all 53 subjects for all 21 variables.

sur15_raw_acontains data on all 21 variables for the first 20 subjects.

dim(sur15_raw_a)[1] 20 21sur15_raw_bcontains data on 9 of the variables (including the subject ID code:s.id) for the other 33 subjects.

dim(sur15_raw_b)[1] 33 9sur15_raw_ccontains data on 13 of the variables (also including the subject ID code:s.id) for the same 33 subjects that are insur15_raw_b.

dim(sur15_raw_c)[1] 33 131.4 Two Merging Steps

- Join the columns in

sur15_raw_band insur15_raw_cto obtain a new tibble, which I’ll callsur15_last33, holding information on all 21 variables for the last 33 subjects.

sur15_last33 <- inner_join(sur15_raw_b, sur15_raw_c, by = "s_id")

dim(sur15_last33)[1] 33 21- Combine the rows together from

sur15_raw_a(which has the first 20 subjects) andsur15_last33(which has the other 33 subjects) to create a tibble calledsur15_mergedwhich has all 21 variables for all 53 subjects.

sur15_merge <- bind_rows(sur15_raw_a, sur15_last33)

dim(sur15_merge)[1] 53 21OK. We have 53 subjects and 21 variables, as expected.

1.5 Checking the Merge

We need to perform two checks here.

- First, we should check to ensure that the number of distinct (unique) subject identification codes (shown below) matches the number of rows. Those two values should be identical. Are they?

identical(n_distinct(sur15_merge$s_id),

sur15_merge |> nrow())[1] TRUEExcellent.

- Second, we should also check that we haven’t added any new variables. The

sur15_raw_atibble included all of the variable names we should have in the final result. Do the names insur15_raw_amatch the names in our merged tibble exactly?

identical(names(sur15_merge),

names(sur15_raw_a))[1] TRUEAll right. Our merge was successful.

1.6 Selecting only the variables we’ll use

The sur15_merge data includes some variables we don’t need, so we’ll prune down to the 11 variables we’ll actually use in the analyses we’ll do. This should certainly include the subject identification code, so we’ll include that, and also switch it to a character representation instead of numeric.

sur15_m <- sur15_merge |>

mutate(s_id = as.character(s_id)) |>

select(s_id, r_pre, r_now, height, weight,

comfort_431, grades, r_before, english,

medium, fiction)2 Cleaning the Data

2.1 Our Survey Items

The 10 survey items that we will actually use in this demonstration are listed below.

2.1.1 Rating Variables

For each of these, subjects gave a response between 0 and 100 indicating their agreement with the statement as presented. The scale was 0 = Strongly disagree, 100 = Strongly agree.

r_pre: Prior to taking 431, I was totally confident and comfortable with using R. (0 = Strongly Disagree, 100 = Strongly Agree)r_now: Right now, I am totally confident and comfortable with using R. (0 = Strongly Disagree, 100 = Strongly Agree)comfort_431: I am very comfortable with my understanding of the material discussed so far in 431.

2.1.2 Other Quantitative Variables

height: What is your height, in inches?weight: What is your weight, in pounds?

2.1.3 Binary Variables

r_before: Before taking 431, had you ever used R before? (Yes, No)english: Is English the language you speak better than any other? (Yes, No)

2.1.4 Multi-Categorical Variables

grades: In your graduate and undergraduate educational experience, which of the following types of assignments have you received the HIGHEST grades for?- Available responses were “A. Individual Assignments”, “B. Partner Assignments (you and 1 other student)”, and “C. Group Assignments (you and 2 or more others)”.

medium: Which medium do you use most to get your fictional stories (containing plot)?- Available Responses: “A. Movies”, “B. Television”, “C. Print (including books, comics, etc.)”, and “D. Other”.

fiction: Which type of fictional stories do you consume most?- Available Responses: “A. Comedy”, “B. Drama”, “C. Action”, “D. Horror / Thriller”, and “E. Fantasy / Science Fiction”.

Our analytic tibble will be called sur15 for this demonstration.

- This tibble will need to contain information developed from the variables listed above, plus the subject identifying code

s_id. - As we add variables to the analytic tibble, we’ll also check to see that all of the values fall in a reasonable range (with no results that fall outside of the parameters of how we are measuring the results) and we’ll identify whether there are any missing values.

Note that we’ve already checked our subject identification codes to ensure that we have no missing values there and that we have a unique identifier for each row in the data back when we did the merge.

2.2 Checking our Quantitative Variables

We have five quantitative variables. We want to check the range (minimum and maximum) plus for each, to ensure that we have no impossible or missing values.

sur15_m |>

select(r_pre, r_now, comfort_431, height, weight) |>

data_codebook()select(sur15_m, r_pre, r_now, comfort_431, height, weight) (53 rows and 5 variables, 5 shown)

ID | Name | Type | Missings | Values | N

---+-------------+---------+----------+--------------+---

1 | r_pre | numeric | 0 (0.0%) | [0, 90] | 53

---+-------------+---------+----------+--------------+---

2 | r_now | numeric | 0 (0.0%) | [0, 95] | 53

---+-------------+---------+----------+--------------+---

3 | comfort_431 | numeric | 0 (0.0%) | [15, 100] | 53

---+-------------+---------+----------+--------------+---

4 | height | numeric | 0 (0.0%) | [22.83, 217] | 53

---+-------------+---------+----------+--------------+---

5 | weight | numeric | 0 (0.0%) | [1, 320] | 53

---------------------------------------------------------- For the three rating variables, all values are in the range [0, 100], as they must be.

- However, the

heightrange doesn’t seem reasonable. Withheightmeasured in inches, do we really think there should be a height as small as 22.83 inches? - The

weightminimum is also a problem. Is 1 pound a reasonable value? - We also want to create a body mass index from the

heightandweightdata

2.2.1 Combining height and weight into bmi and Specifying NA for Implausible Values

We will calculate bmi (body-mass index) from the available height (inches) and weight (pounds) data. The BMI formula for inches and pounds is available at http://www.bmi-calculator.net/bmi-formula.php. A reasonable range for BMI values is probably about 15 to 50.

sur15_m <- sur15_m |>

mutate(bmi = 703 * weight / height^2)

sur15_m |> reframe(lovedist(bmi))# A tibble: 1 × 10

n miss mean sd med mad min q25 q75 max

<int> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 53 0 26.2 23.5 23.2 3.25 0.159 21.2 25.5 189.The minimum calculated bmi value seems impossibly low, and the highest bmi seems impossibly high. Let’s look at the heights and weights involved.

sur15_m |> select(s_id, height, weight) |> arrange(height) |> head()# A tibble: 6 × 3

s_id height weight

<chr> <dbl> <dbl>

1 504 22.8 140

2 530 61 140

3 513 61.4 112

4 519 62 126

5 528 62 155

6 540 62 140sur15_m |> select(s_id, height, weight) |> arrange(height) |> tail()# A tibble: 6 × 3

s_id height weight

<chr> <dbl> <dbl>

1 532 72 160

2 549 72 220

3 506 73 145

4 507 74 320

5 535 74 172

6 529 217 165sur15_m |> select(s_id, height, weight) |> arrange(weight) |> head()# A tibble: 6 × 3

s_id height weight

<chr> <dbl> <dbl>

1 550 66.5 1

2 521 63 107

3 512 65 112

4 513 61.4 112

5 552 65 120

6 526 64 121sur15_m |> select(s_id, height, weight) |> arrange(weight) |> tail()# A tibble: 6 × 3

s_id height weight

<chr> <dbl> <dbl>

1 505 70 178

2 516 71 200

3 511 65 202

4 546 69 210

5 549 72 220

6 507 74 320The subjects with heights of 22.83 inches and 217 inches are implausible, and the subject with weight 1 pound is also not reasonable. We want to focus on actually plausible results.

- A reasonable guess is that no one in the class was less than about 122 centimeters, or 4 feet tall (48 inches) nor were they greater than about 213 centimeters, or 7 feet tall (84 inches) so we’re going to change any values outside that range to

NA. - Similarly, it seems reasonable to assume that no one in the class was below 80 pounds (36.3 kg) or above 400 pounds (181.4 kg) so again, we’ll regard any values we see outside that range is implausible and change them to

NA.

I’ll do this by creating new variables height_r and weight_r where the _r (meaning “revised”) indicates to me that I’ve revised the original variable in some way without adding a lot of characters to its name.

sur15_m <- sur15_m |>

mutate(height_r = replace(height, height < 48 | height > 84, NA),

weight_r = replace(weight, weight < 80 | weight > 400, NA)) |>

mutate(bmi = 703 * weight_r / height_r ^2)

sur15_m |> select(height_r, weight_r, bmi) |>

data_codebook()select(sur15_m, height_r, weight_r, bmi) (53 rows and 3 variables, 3 shown)

ID | Name | Type | Missings | Values | N

---+----------+---------+----------+----------------+---

1 | height_r | numeric | 2 (3.8%) | [61, 74] | 51

---+----------+---------+----------+----------------+---

2 | weight_r | numeric | 1 (1.9%) | [107, 320] | 52

---+----------+---------+----------+----------------+---

3 | bmi | numeric | 3 (5.7%) | [18.64, 41.08] | 50

--------------------------------------------------------So now, we have 2 missing values of height_r, 1 missing value of weight_r and we have calculated BMI results, with 3 missing values, and our ranges (minimum and maximum) for each of these variables now look OK.

2.3 Checking our Binary Variables

We have two binary variables.

sur15_m |> select(english, r_before) |> glimpse()Rows: 53

Columns: 2

$ english <chr> "No", "Yes", "Yes", "No", "Yes", "Yes", "Yes", "No", "Yes", "…

$ r_before <chr> "Yes", "No", "No", "Yes", "Yes", "No", "Yes", "Yes", "No", "N…I’d like those to be factors in R, rather than characters.

sur15_m <- sur15_m |>

mutate(r_before = factor(r_before),

english = factor(english))

sur15_m |> count(r_before, english)# A tibble: 4 × 3

r_before english n

<fct> <fct> <int>

1 No No 10

2 No Yes 18

3 Yes No 8

4 Yes Yes 17OK. No missingness, and no values out of the range of our expectations. Good.

2.4 Checking our Multi-Category Variables

For each of our multi-categorical variables, I’ll run a quick tabyl to see if we have any surprising results or missing values. Then I’ll revise each of them (as needed) to have more suitable (mostly, shorter) level names. In addition to checking for missingness and inappropriate values, we want to collapse some categories, or adjust names or labeling to mirror what we need in our analyses.

2.4.1 The grades variable

sur15_m |>

tabyl(grades) grades n percent valid_percent

A. Individual Assignments 40 0.75471698 0.7692308

B. Partner Assignments (you and 1 other student) 6 0.11320755 0.1153846

C. Group Assignments (you and 2 or more others) 6 0.11320755 0.1153846

<NA> 1 0.01886792 NAFor grades, we want to create a new factor called grades_r which is a factor and which has shorter level names, specifically: Individual, Partner and Group, in that order. We’ll use the fct_recode function from forcats:

sur15_m <- sur15_m |>

mutate(grades_r = fct_recode(factor(grades),

"Individual" = "A. Individual Assignments",

"Partner" = "B. Partner Assignments (you and 1 other student)",

"Group" = "C. Group Assignments (you and 2 or more others)"))

# sanity check to ensure we coded correctly

sur15_m |> count(grades, grades_r)# A tibble: 4 × 3

grades grades_r n

<chr> <fct> <int>

1 A. Individual Assignments Individual 40

2 B. Partner Assignments (you and 1 other student) Partner 6

3 C. Group Assignments (you and 2 or more others) Group 6

4 <NA> <NA> 1- That looks like we’ve correctly renamed the values.

- For this demonstration, we’ll allow counts as low as 5 for individual levels of a categorical variable, because of the small sample size, so I won’t collapse the levels at all.

- We have a missing value here, so we’ll need to deal with that later.

2.4.2 The medium variable

sur15_m |>

tabyl(medium) medium n percent

A. Movies 17 0.32075472

B. Television 22 0.41509434

C. Print (including books, comics, etc.) 9 0.16981132

D. Other 5 0.09433962- We have no missing values, so that’s good.

- In this demonstration, we will require that each category have at least 5 responses, so while this just barely meets that standard, I think I will go ahead and collapse the variable down to just three categories.

For the medium variable, we want to collapse the Print and Other levels to form a three category variable (with levels Movies, TV and Other) called medium_r.

sur15_m <- sur15_m |>

mutate(medium_r = fct_recode(factor(medium),

"Movies" = "A. Movies",

"TV" = "B. Television",

"Other" = "C. Print (including books, comics, etc.)",

"Other" = "D. Other"))

sur15_m |> count(medium, medium_r) # sanity check# A tibble: 4 × 3

medium medium_r n

<chr> <fct> <int>

1 A. Movies Movies 17

2 B. Television TV 22

3 C. Print (including books, comics, etc.) Other 9

4 D. Other Other 5OK. Looks good now.

2.4.3 The fiction variable

sur15_m |>

tabyl(fiction) fiction n percent

A. Comedy 18 0.33962264

B. Drama 15 0.28301887

C. Action 5 0.09433962

D. Horror / Thriller 1 0.01886792

E. Fantasy / Science Fiction 14 0.26415094- No signs of missing values, so that’s good.

- With only one value in category D and only 5 in category C, we will need to do some collapsing to use this variable later.

2.4.4 Collapsing and recoding levels of fiction

For the fiction variable, we want to form a four category variable (with levels Comedy, Drama, Fantasy/SF, Other) called fiction_r.

sur15_m <- sur15_m |>

mutate(fiction_r = fct_recode(factor(fiction),

"Comedy" = "A. Comedy",

"Drama" = "B. Drama",

"Fantasy/SF" = "E. Fantasy / Science Fiction",

"Other" = "C. Action",

"Other" = "D. Horror / Thriller"))

sur15_m |> count(fiction, fiction_r) # sanity check# A tibble: 5 × 3

fiction fiction_r n

<chr> <fct> <int>

1 A. Comedy Comedy 18

2 B. Drama Drama 15

3 C. Action Other 5

4 D. Horror / Thriller Other 1

5 E. Fantasy / Science Fiction Fantasy/SF 14Actually, I’d like to reorder fiction_r to put Other last.

sur15_m <- sur15_m |>

mutate(fiction_r = fct_relevel(fiction_r,

"Comedy", "Drama",

"Fantasy/SF", "Other"))OK. Let’s see what we have now…

sur15_m |>

tabyl(medium_r, fiction_r) |>

gt()| medium_r | Comedy | Drama | Fantasy/SF | Other |

|---|---|---|---|---|

| Movies | 4 | 5 | 6 | 2 |

| TV | 11 | 5 | 2 | 4 |

| Other | 3 | 5 | 6 | 0 |

OK. I wish we didn’t have that zero cell in the cross-tabulation, but we’ll leave it alone, rather than collapsing further, given our small number of observations in this demonstration.

2.5 Creating our Analytic Tibble

So our analytic tibble, which I’ll call sur15 should contains only the twelve variables that appear in our code book.

sur15 <- sur15_m |>

select(s_id, r_pre, r_now, comfort_431,

height_r, weight_r, bmi,

r_before, english,

grades_r, medium_r, fiction_r)2.6 List of Missing Values

We can count the number of missing observations in each variable, with …

miss_var_summary(sur15)# A tibble: 12 × 3

variable n_miss pct_miss

<chr> <int> <num>

1 bmi 3 5.66

2 height_r 2 3.77

3 weight_r 1 1.89

4 grades_r 1 1.89

5 s_id 0 0

6 r_pre 0 0

7 r_now 0 0

8 comfort_431 0 0

9 r_before 0 0

10 english 0 0

11 medium_r 0 0

12 fiction_r 0 0 We can see the subjects who have missing values in several ways, including…

miss_case_summary(sur15)# A tibble: 53 × 3

case n_miss pct_miss

<int> <int> <dbl>

1 4 2 16.7

2 29 2 16.7

3 50 2 16.7

4 16 1 8.33

5 1 0 0

6 2 0 0

7 3 0 0

8 5 0 0

9 6 0 0

10 7 0 0

# ℹ 43 more rowssur15[which(!complete.cases(sur15)),]# A tibble: 4 × 12

s_id r_pre r_now comfort_431 height_r weight_r bmi r_before english

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <fct> <fct>

1 504 20 80 50 NA 140 NA Yes No

2 516 0 50 70 71 200 27.9 No Yes

3 529 25 75 85 NA 165 NA No Yes

4 550 0 0 15 66.5 NA NA No No

# ℹ 3 more variables: grades_r <fct>, medium_r <fct>, fiction_r <fct>In our sample of respondents, we have:

- 49 subjects with no missing values,

- 1 subject (

s_id= 516) who is missinggrades_r, - 2 subjects (

s_id= 504 and 529) who are missingheight_randbmi, and - 1 subject (

s_id= 550) who is missingweight_randbmi.

2.7 Filtering to Complete Cases

Now, in Study 1, I’ve asked you to filter your data to complete cases throughout. So I’ll do that now, and wind up with just 49 subjects in the data. I’ll include an explicit statement here (and you should, too) that I’m assuming Missing Completely at Random for the missing values in these data.

According to the mcar_test() function (part of the naniar package) which runs Little’s missing completely at random test1, this is a debatable assumption (we’d like to see a high \(p\) value here) but we’ll make it anyway for Study 1.

sur15 |> mcar_test()# A tibble: 1 × 4

statistic df p.value missing.patterns

<dbl> <dbl> <dbl> <int>

1 42.9 31 0.0763 4sur_15 <- sur15 |> drop_na()

nrow(sur_15)[1] 493 Codebook and Data Description

3.1 Variable Descriptions

The 12 variables in our tidy data set sur_15 for this demonstration are as follows. The Type column indicates the number of levels in each categorical (factor) variable. Recall that we had missing data in height_r, weight_r, bmi and grades_r but I’ve filtered that away, so we have no missingness left now. As for the Type information, I’m using Quant to indicate quantitative variables, and Cat-x indicates a categorical variable (factor) with x levels.

| Variable | Type | Description / Levels |

|---|---|---|

s_id |

ID | subject code |

r_pre |

Quant | 0 (SD) - 100 (SA) with Prior to taking 431, I was totally confident and comfortable with using R. |

r_now |

Quant | 0 (SD) - 100 (SA) with Right now, I am totally confident and comfortable with using R. |

comfort_431 |

Quant | 0 (SD) - 100 (SA) with I am very comfortable with my understanding of the material discussed so far in 431. |

height_r |

Quant | What is your height, in inches |

weight_r |

Quant | What is your weight, in pounds |

bmi |

Quant | 703 x weight/(height squared) |

r_before |

Cat-2 | yes, no: Before taking 431, had you ever used R before? |

english |

Cat-2 | yes, no: Is English the language you speak better than any other? |

grades_r |

Cat-3 | Individual, Partner, Group: In your graduate and undergraduate educational experience, which of the following types of assignments have you received the HIGHEST grades for? |

medium_r |

Cat-3 | Movies, TV, Other: Which medium do you use most to get your fictional stories (containing plot)? |

fiction_r |

Cat-4 | Comedy, Drama, Fantasy/SF, Other: Which type of fictional stories do you consume most? |

3.2 Analytic Tibble

Now, I’ll prove that sur_15 is a tibble by printing it.

sur_15# A tibble: 49 × 12

s_id r_pre r_now comfort_431 height_r weight_r bmi r_before english

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <fct> <fct>

1 501 0 70 90 68.1 162 24.6 Yes No

2 502 0 70 50 67 151 23.6 No Yes

3 503 0 10 70 62.5 127 22.9 No Yes

4 505 80 90 85 70 178 25.5 Yes Yes

5 506 0 50 80 73 145 19.1 No Yes

6 507 50 50 50 74 320 41.1 Yes Yes

7 508 60 75 80 70 165 23.7 Yes No

8 509 0 50 75 64 135 23.2 No Yes

9 510 30 30 50 69 155 22.9 No Yes

10 511 0 50 88 65 202 33.6 No Yes

# ℹ 39 more rows

# ℹ 3 more variables: grades_r <fct>, medium_r <fct>, fiction_r <fct>3.3 Data Summary

3.3.1 data_description()

Here’s a data_description() result to show some information about the distribution of each quantitative variables in the sur_15 tibble.

describe_distribution(sur_15 |> select(-s_id))Variable | Mean | SD | IQR | Range | Skewness | Kurtosis | n | n_Missing

----------------------------------------------------------------------------------------------

r_pre | 21.94 | 31.31 | 50.00 | [0.00, 90.00] | 1.03 | -0.52 | 49 | 0

r_now | 57.02 | 26.34 | 38.00 | [0.00, 95.00] | -0.62 | -0.54 | 49 | 0

comfort_431 | 77.65 | 16.39 | 20.00 | [30.00, 100.00] | -1.15 | 0.94 | 49 | 0

height_r | 67.42 | 3.49 | 5.50 | [61.00, 74.00] | -0.02 | -0.87 | 49 | 0

weight_r | 154.97 | 34.31 | 37.50 | [107.00, 320.00] | 2.46 | 10.33 | 49 | 0

bmi | 23.86 | 4.11 | 4.22 | [18.64, 41.08] | 1.94 | 5.61 | 49 | 03.3.2 data_codebook()

And here’s the data_codebook() result, which adds in tabulations of the categorical variables.

data_codebook(sur_15 |> select(-s_id))select(sur_15, -s_id) (49 rows and 11 variables, 11 shown)

ID | Name | Type | Missings | Values | N

---+-------------+-------------+----------+----------------+-----------

1 | r_pre | numeric | 0 (0.0%) | [0, 90] | 49

---+-------------+-------------+----------+----------------+-----------

2 | r_now | numeric | 0 (0.0%) | [0, 95] | 49

---+-------------+-------------+----------+----------------+-----------

3 | comfort_431 | numeric | 0 (0.0%) | [30, 100] | 49

---+-------------+-------------+----------+----------------+-----------

4 | height_r | numeric | 0 (0.0%) | [61, 74] | 49

---+-------------+-------------+----------+----------------+-----------

5 | weight_r | numeric | 0 (0.0%) | [107, 320] | 49

---+-------------+-------------+----------+----------------+-----------

6 | bmi | numeric | 0 (0.0%) | [18.64, 41.08] | 49

---+-------------+-------------+----------+----------------+-----------

7 | r_before | categorical | 0 (0.0%) | No | 25 (51.0%)

| | | | Yes | 24 (49.0%)

---+-------------+-------------+----------+----------------+-----------

8 | english | categorical | 0 (0.0%) | No | 16 (32.7%)

| | | | Yes | 33 (67.3%)

---+-------------+-------------+----------+----------------+-----------

9 | grades_r | categorical | 0 (0.0%) | Individual | 40 (81.6%)

| | | | Partner | 4 ( 8.2%)

| | | | Group | 5 (10.2%)

---+-------------+-------------+----------+----------------+-----------

10 | medium_r | categorical | 0 (0.0%) | Movies | 15 (30.6%)

| | | | TV | 21 (42.9%)

| | | | Other | 13 (26.5%)

---+-------------+-------------+----------+----------------+-----------

11 | fiction_r | categorical | 0 (0.0%) | Comedy | 17 (34.7%)

| | | | Drama | 14 (28.6%)

| | | | Fantasy/SF | 12 (24.5%)

| | | | Other | 6 (12.2%)

-----------------------------------------------------------------------4 Analysis A: Compare 2 Population Means using Paired Samples

4.1 The Question

We’ll compare the r_now scores to r_pre scores. The scores are paired by subject, as each subject gives us both a r_pre and r_now score, and computing and assessing within-subject differences in comfort with R makes sense, because we are interested in the change in each person’s comfort level. We’ll generally use r_now - r_pre in our calculations, so that positive numbers indicate improvements in confidence. Note that we’ll use a 90% confidence level throughout this demonstration project for all analyses, and I encourage you to do this in your actual Project B Study 1 work, as well.

So, our research question might be something like:

What is a typical change in comfort with R experienced by students in 431 through the first couple of months in the course?

4.2 Describing the Data

4.2.1 Compute and summarize the paired differences

The natural first step is to compute paired differences between the r_now and r_pre samples, and then use graphical and numerical summaries to assess whether the sample (of differences) can be assumed to follow a Normal distribution. First, we’ll calculate the paired differences.

sur_15 <- sur_15 |>

mutate(r_diff = r_now - r_pre)

sur_15 |> reframe(lovedist(r_diff)) |> gt()| n | miss | mean | sd | med | mad | min | q25 | q75 | max |

|---|---|---|---|---|---|---|---|---|---|

| 49 | 0 | 35.08163 | 29.06017 | 25 | 37.065 | 0 | 10 | 60 | 95 |

OK. It appears that we have successfully subtracted the PRE data from the NOW data, and everyone has a difference of at least zero. Now, we’ll assess whether or not a Normal distribution might be a reasonable model for the data.

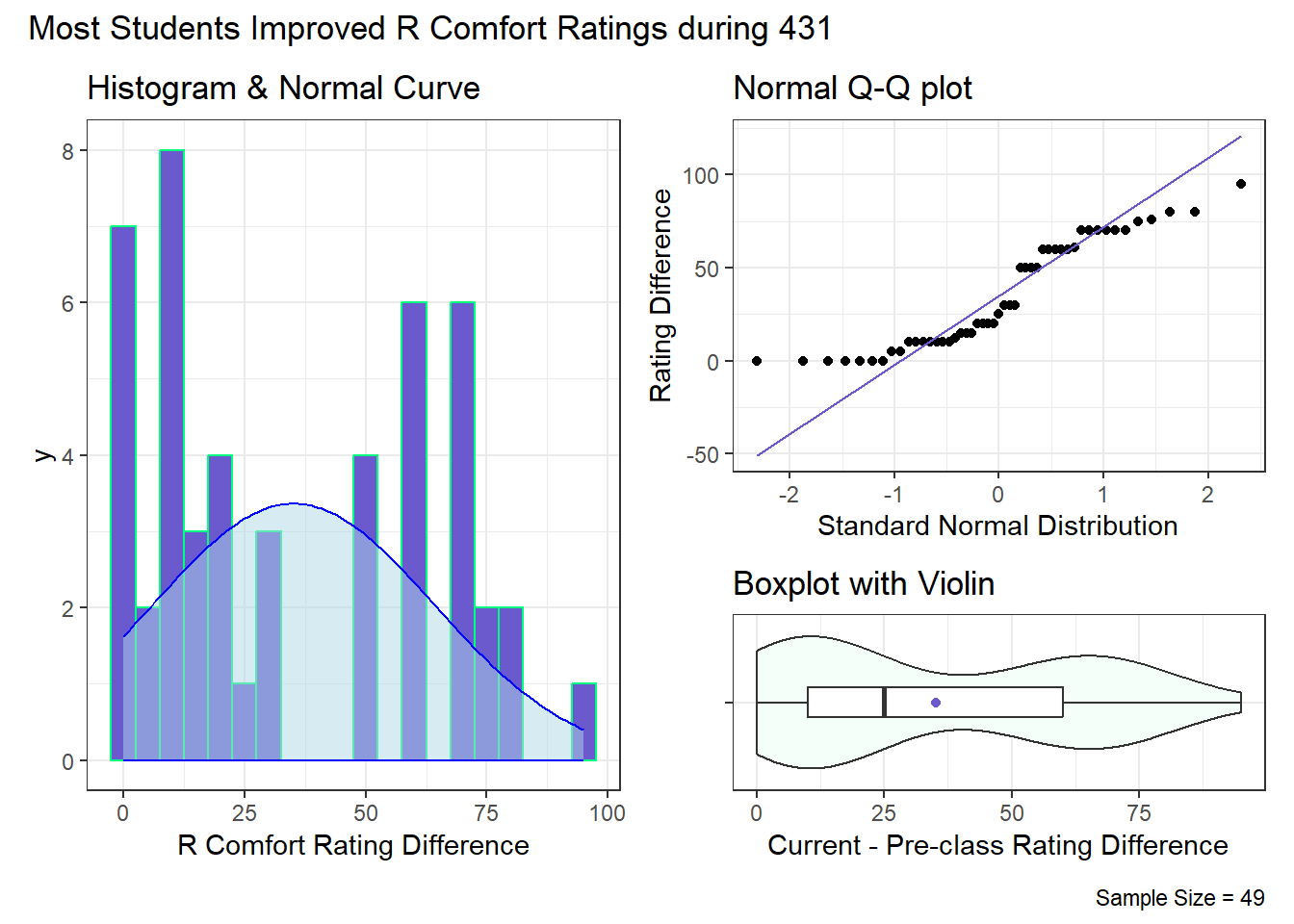

4.2.2 Graphical Summaries to Assess Normality

We should start by looking at the distribution of these 49 values of r_diff. As we’ve seen, there’s a floor effect at zero.

A histogram with 49 values won’t give us a lot of information. Perhaps we should focus instead on a Normal Q-Q plot and boxplot with violin? We’ll draw all three here.

bw = 5 # specify width of bins in histogram

p1 <- ggplot(sur_15, aes(x = r_diff)) +

geom_histogram(binwidth = bw,

fill = "slateblue", col = "springgreen") +

stat_function(fun = function(x)

dnorm(x, mean = mean(sur_15$r_diff), sd = sd(sur_15$r_diff)) *

length(sur_15$r_diff) * bw,

geom = "area", alpha = 0.5, fill = "lightblue", col = "blue") +

labs(x = "R Comfort Rating Difference",

title = "Histogram & Normal Curve")

p2 <- ggplot(sur_15, aes(sample = r_diff)) +

geom_qq() + geom_qq_line(col = "slateblue") +

labs(y = "Rating Difference",

x = "Standard Normal Distribution",

title = "Normal Q-Q plot")

p3 <- ggplot(sur_15, aes(x = r_diff, y = "")) +

geom_violin(fill = "mintcream") +

geom_boxplot(width = 0.2) +

stat_summary(fun = mean, geom = "point", shape = 16, col = "slateblue") +

labs(y = "", x = "Current - Pre-class Rating Difference",

title = "Boxplot with Violin")

p1 + (p2 / p3 + plot_layout(heights = c(2, 1))) +

plot_annotation(

title = "Most Students Improved R Comfort Ratings during 431",

caption = glue("Sample Size = ", nrow(sur_15)))

With just 49 observations, it will be a little difficult to get a clear picture of whether a Normal approximation is reasonable or not. I would conclude that a bootstrap approach would be a better choice here than a Normal model for the paired differences, owing to the floor effect (many zeros) in the paired differences.

sur_15 |> reframe(lovedist(r_diff)) |> gt()| n | miss | mean | sd | med | mad | min | q25 | q75 | max |

|---|---|---|---|---|---|---|---|---|---|

| 49 | 0 | 35.08163 | 29.06017 | 25 | 37.065 | 0 | 10 | 60 | 95 |

The data seem a bit right skewed, as well. The sample mean is 34.7% of a standard deviation larger than the sample median2.

4.2.3 Did Pairing Help Reduce Nuisance Variation?

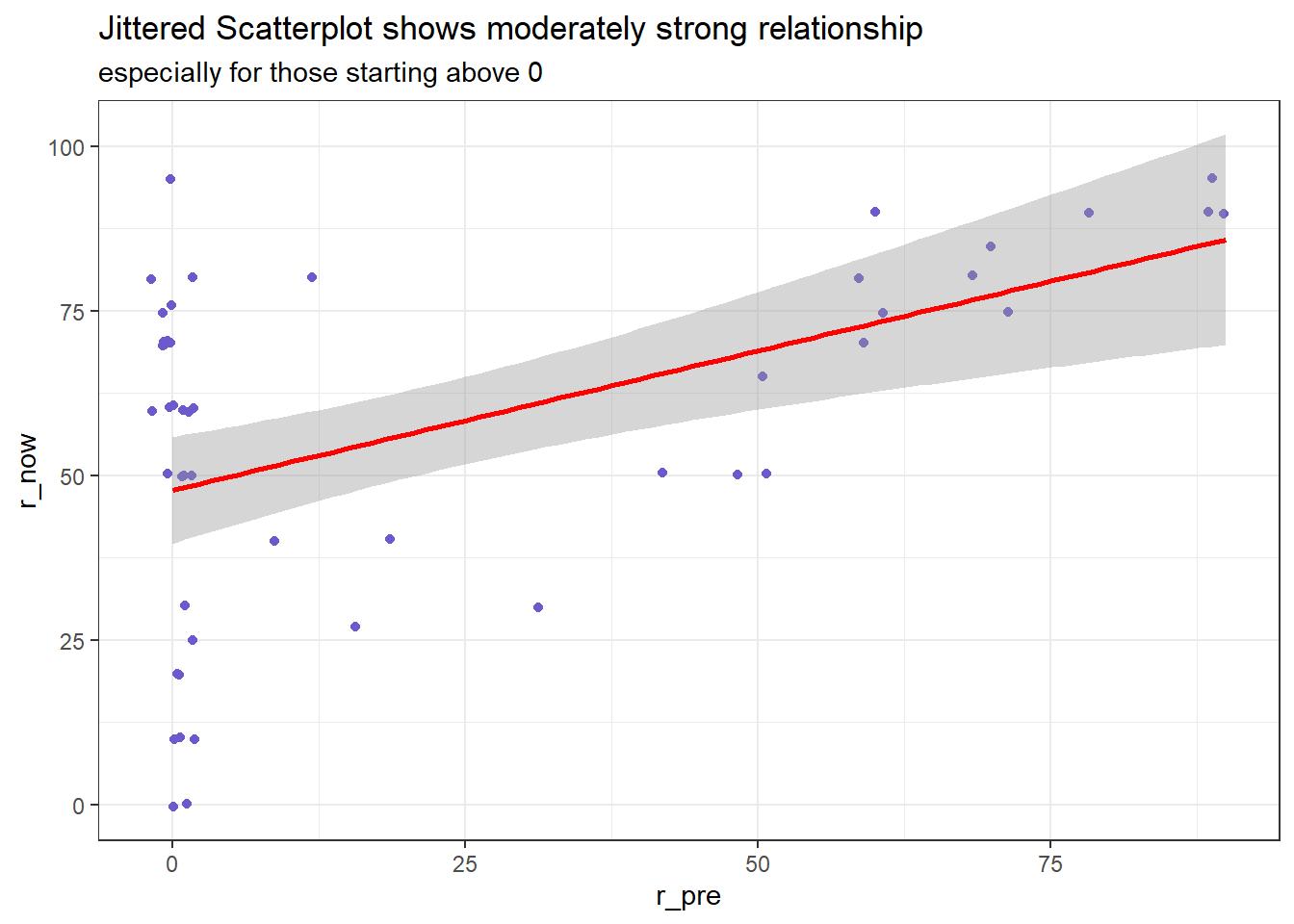

We would expect a strong correlation between the r_pre and r_now scores in this repeated measures analysis where each subject is assessing both their confidence before the class and then again during the class. To assess whether pairing helped reduce nuisance variation, I’ll build a scatterplot of the r_pre and r_now scores, supplemented by a Pearson correlation coefficient. Since we have so many ties in the data, with two or more points in the same place, I’ll use geom_jitter rather than geom_point to plot the points. The larger the correlation, the more that pairing will help reduce the impact of differences between subjects on the r_pre score on the comparison we’re trying to make.

ggplot(sur_15, aes(x = r_pre, y = r_now)) +

geom_jitter(col = "slateblue") +

geom_smooth(formula = y ~ x, method = "lm", col = "red") +

labs(title = "Jittered Scatterplot shows moderately strong relationship",

subtitle = "especially for those starting above 0")

For people with a r_pre score greater than zero, we see a pretty strong linear relationship between r_pre and r_now.

sur_15 |> select(r_pre, r_now) |> correlation()# Correlation Matrix (pearson-method)

Parameter1 | Parameter2 | r | 95% CI | t(47) | p

-----------------------------------------------------------------

r_pre | r_now | 0.50 | [0.26, 0.69] | 3.99 | < .001***

p-value adjustment method: Holm (1979)

Observations: 49The Pearson correlation is quite strong at 0.5 so that a linear model using the r_pre score accounts for a reasonably large fraction (25.3%) of the variation in r_now scores.

- If the Pearson correlation had been small but still positive (perhaps less than 0.2), we might conclude that pairing wouldn’t be exceptionally helpful, but if the samples are meant to be paired, we should still do a paired samples analysis, but such a small correlation would imply that an independent samples comparison would come to about the same conclusion.

4.3 Main Analysis

As you’ll recall, we have two main methods for building confidence intervals in a paired samples analysis:

- The Paired t test

- The Bootstrap

Let’s run each in this demonstration just so you have the code, even though, as mentioned, I’d be most interested in what the bootstrap approach suggests, owing to the modest non-Normality we see in the sample of differences. I’ll even throw in a Wilcoxon signed rank test approach here, even though I wouldn’t recommend you include that in Project B. In each case, we’ll build a 90% confidence interval for the population mean (or pseudo-median, in the case of the signed rank test) of the r_now - r_pre differences.

4.3.1 The Paired t test approach

Here is a 90% confidence interval for the population mean of the paired r_now - r_pre differences.

lm(r_diff ~ 1, data = sur_15) |>

model_parameters(ci = 0.90) Parameter | Coefficient | SE | 90% CI | t(48) | p

------------------------------------------------------------------

(Intercept) | 35.08 | 4.15 | [28.12, 42.04] | 8.45 | < .001

Uncertainty intervals (equal-tailed) and p-values (two-tailed) computed

using a Wald t-distribution approximation.- The point estimate for the population mean of the differences is 35.08, indicating that the average subject rated agreement with the statement about confidence in R 35 points higher now than when they started the class.

- Our 90% confidence interval for the population mean of the differences is (28.12, 42.04).

- The confidence interval reflects imprecision in the population estimate, based only on assuming that the participants are selected at random from the population of interest.

- When we generalize beyond study participants to the population they were selected at random from, then our data are compatible (at the 90% confidence level) with population mean differences between 28.12 and 42.04, depending on the assumptions of our linear model (here, our paired t test) being correct.

- The assumptions of the paired t procedure are

- that the matched differences are independent of each other,

- that the matched differences represent a random sample of the population of possible matched differences,

- and that the matched differences are drawn from a Normally distributed population.

- The last of these assumptions is hard to justify given these data, which is why I’d prefer the bootstrap approach in this case.

- Here, I’ve assumed a two-sided confidence interval procedure Use a two-sided confidence interval for everything you do in 431.

4.3.2 The Bootstrap approach for the mean from paired samples

Here is a 90% confidence interval for the population mean of the paired r_now - r_pre differences, as estimated by a bootstrap approach using a random seed of 431. (Note: when you set a seed for this or other analyses in the project, pick something other than 431.)

set.seed(431)

x_bar <- sur_15 |> observe(response = r_diff, stat = "mean")

res1 <- sur_15 |>

specify(response = r_diff) |>

generate(reps = 1000, type = "bootstrap") |>

calculate(stat = "mean") |>

get_confidence_interval(level = 0.90, type = "percentile")

res1 <- res1 |> mutate(pt_est = x_bar$stat) |> relocate(pt_est)

res1 |> gt()| pt_est | lower_ci | upper_ci |

|---|---|---|

| 35.08163 | 28.38673 | 41.79898 |

- The point estimate for the population mean of the differences is 35.08, indicating that the average subject rated agreement with the statement about confidence in R 35 points higher now than when they started the class.

- Our 90% confidence interval for the population mean of the differences is fairly close to what we got from the paired t test, as it turns out.

- The confidence interval reflects imprecision in the population estimate, based only on assuming that the participants are selected at random from the population of interest.

- When we generalize beyond study participants to the population they were selected at random from, then our data are compatible (at the 90% confidence level) with population mean differences between 28.39 and 41.80, depending on the assumptions of bootstrap estimation procedure being correct.

- Again, I’ve assumed a two-sided confidence interval procedure, and so we can discuss a range of reasonable estimates for the true difference in

r_preandr_nowscores. - The assumptions of this bootstrap procedure are

- that the matched differences are independent of each other, and

- that the matched differences represent a random sample of the population of possible matched differences.

These assumptions seem more reasonable.

4.4 Conclusions

Subjects appear to improve in their comfort with R an average of 35.1 points on the 0-100 scale, with a 90% confidence interval for that average improvement of (28.4, 41.8) points. This conclusion is motivated by a bootstrap estimate to compare paired responses from students before and after the first couple of months of the course, and I feel this is the most justified approach based on my assessment of Normality in the data from these 49 students.

A natural next step would be to look at values of something like this over multiple years, or perhaps comparing students at more than just two stages. It would also be appealing to measure comfort with R at the earlier time, and then return to the students later, rather than asking them to remember where they were at the start a couple of months later. There are several other possible next steps here, too, depending on what population you might decide to target.

5 Analysis B: Compare 2 Population Means using Independent Samples

5.1 The Question

We’ll compare bmi by english in this analysis using independent samples. We’re comparing the mean bmi of the population represented by respondents who speak English best to the mean bmi of the population represented by the respondents who speak some other language better. There is nothing to suggest that the two samples (English bmi and non-English bmi values) are paired or matched in any way. Plus, as we’ll see, there are different numbers of English and non-English preferring subjects, so there’s no way their bmi values could be paired. As a result, we’re going to be interested in looking at the two samples separately to help us understand issues related to hypothesis testing assumptions. Note that we’ll use a 90% confidence level throughout this demonstration project for all analyses, and I encourage you to do this in your actual Project Study B work, as well.

Our research question is:

Did students who speak English best have meaningfully different average body mass index values than students who speak some other language better than they speak English?

5.2 Describing the Data

Can the samples (of Yes and No respondents, separately) each be modeled appropriately by a Normal distribution?

5.2.1 Graphical Summaries

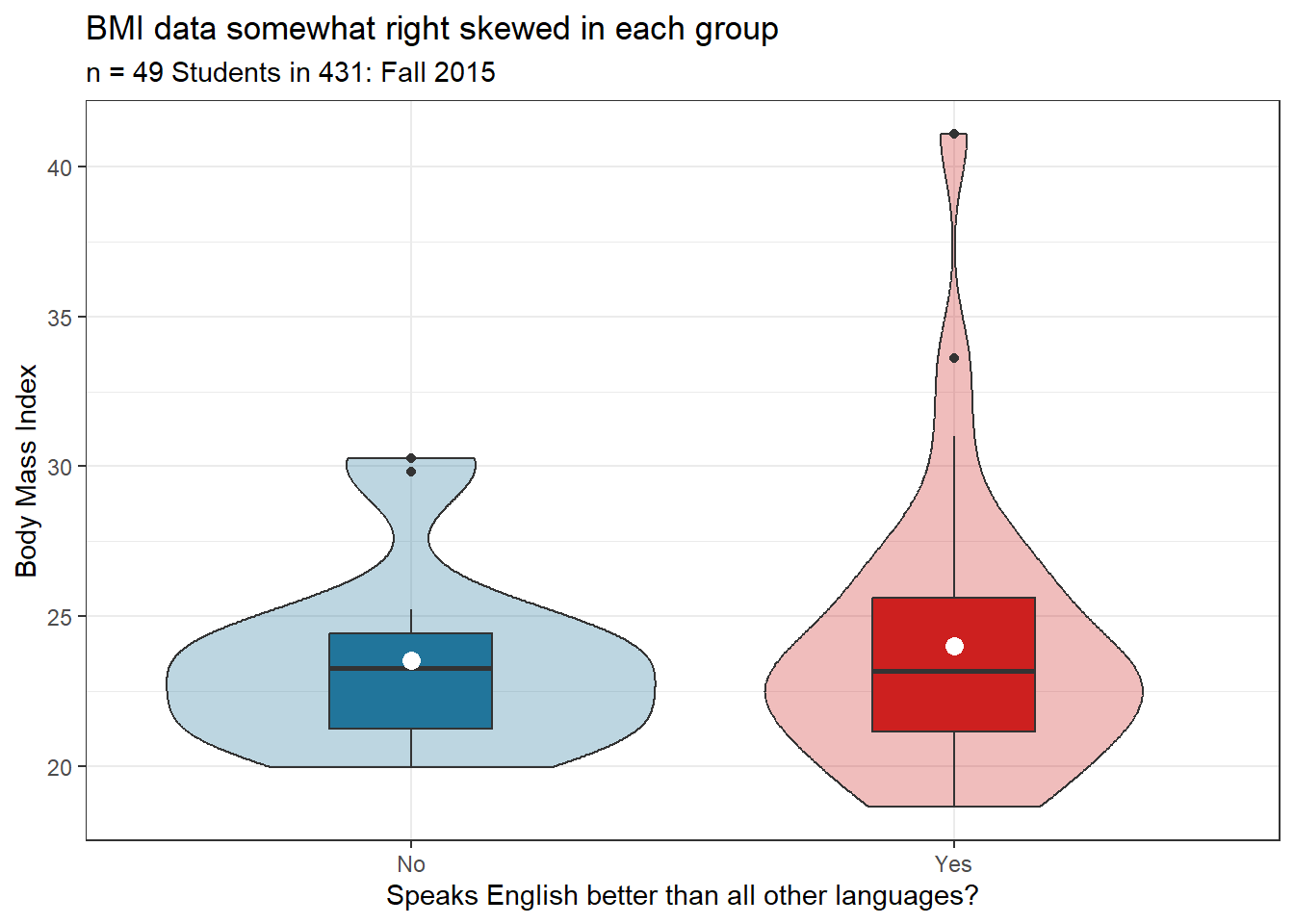

Let’s build a comparison boxplot (with violins and means) to start.

ggplot(sur_15, aes(x = english, y = bmi, fill = english)) +

geom_violin(alpha = 0.3) +

geom_boxplot(width = 0.3) +

stat_summary(fun = mean, geom = "point", size = 3, col = "white") +

scale_fill_social() +

guides(fill = "none") +

labs(title = "BMI data somewhat right skewed in each group",

subtitle = "n = 49 Students in 431: Fall 2015",

x = "Speaks English better than all other languages?", y = "Body Mass Index")

There are at least a couple of candidate outliers in each group on the high end, which suggest some potential for meaningful skew.

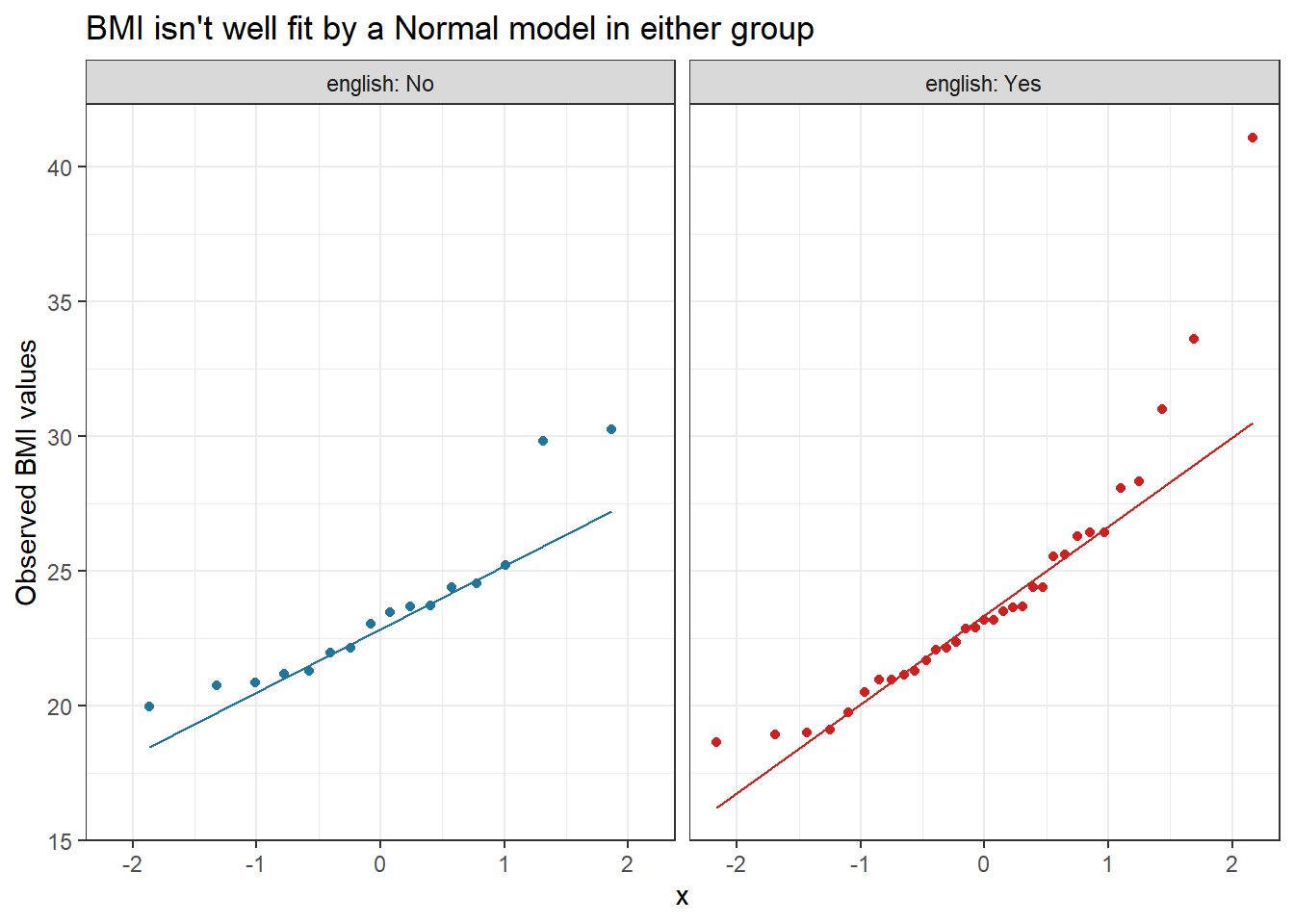

We could also build a pair of Normal Q-Q plots.

ggplot(sur_15, aes(sample = bmi, col = english)) +

geom_qq() + geom_qq_line() +

facet_wrap(~ english, labeller = "label_both") +

scale_color_social() +

guides(col = "none") +

labs(y = "Observed BMI values",

title = "BMI isn't well fit by a Normal model in either group")

It looks like the right skew is large enough, at least in the Yes (speaks English best) group to warrant avoiding tests that require Normality. So again it looks like it’s not reasonable to assume Normality here, or even symmetry.

5.2.2 Numerical Summaries

We have 16 No and 33 Yes respondents to the English language question who have known BMI values.

sur_15 |> group_by(english) |> reframe(lovedist(bmi)) |> gt()| english | n | miss | mean | sd | med | mad | min | q25 | q75 | max |

|---|---|---|---|---|---|---|---|---|---|---|

| No | 16 | 0 | 23.52387 | 2.971336 | 23.26601 | 2.409531 | 19.96686 | 21.25653 | 24.43161 | 30.27053 |

| Yes | 33 | 0 | 24.02736 | 4.601075 | 23.17017 | 3.246759 | 18.63574 | 21.15509 | 25.60354 | 41.08108 |

- In the “Yes” group, the sample mean BMI is 24.03 and sample median BMI is 23.17, while the sample standard deviation of BMI is 4.60. So the mean is 18.7% of a standard deviation above the median3.

- In the “No” group, the sample mean BMI is 23.52 and sample median BMI is 23.27, while the sample standard deviation of BMI is 2.97. So the mean is 8.4% of a standard deviation above the median.

There’s room for concern about whether a test that requires Normal distributions in the populations is a good choice here. With these small sample sizes, we’d probably be better off not making too many strong assumptions.

5.3 Main Analysis

As you’ll recall, we have three main methods for building confidence intervals in an independent samples analysis:

- Welch’s t test (t test without assuming equal variances)

- The Pooled t test (t test with equal variances assumed)

- The Bootstrap

Let’s run each here just so you have the code, even though, as mentioned, I’d be most interested in what the bootstrap approach suggests, owing to the fact that the samples aren’t well described by Normal models or even symmetric ones. In each case, we’ll build a 90% confidence interval for the population mean comparing bmi for people who answered Yes and No to the question about English being the language they speak best.

5.3.1 The Welch’s t test approach

With a somewhat unbalanced design (16 No and 33 Yes), the assumption of equal population variances will probably require us to look at the sample variances.

sur_15 |> group_by(english) |>

summarise(n = n(), mean = mean(bmi), variance = var(bmi)) |> gt()| english | n | mean | variance |

|---|---|---|---|

| No | 16 | 23.52387 | 8.828839 |

| Yes | 33 | 24.02736 | 21.169895 |

That’s a pretty substantial difference in variance with the Yes group a good deal more than 50% larger than the No group, so we might expect the Welch t test and pooled t test to look fairly different, and that would motivate me to focus on the Welch approach over the pooled t test. Of course, neither is a great choice here, due to the samples showing some non-Normality. Regardless, here is a 90% confidence interval for the difference between the “No” group and the “Yes” group population mean bmi based on Welch’s test.

t.test(bmi ~ english, data = sur_15, conf.level = 0.90)

Welch Two Sample t-test

data: bmi by english

t = -0.4609, df = 42.944, p-value = 0.6472

alternative hypothesis: true difference in means between group No and group Yes is not equal to 0

90 percent confidence interval:

-2.339923 1.332950

sample estimates:

mean in group No mean in group Yes

23.52387 24.02736 - The point estimates for the two population

bmimeans are 23.523 for No and 24.027 for Yes, so the average student who speaks English best has a BMI estimated to be about 0.504 points higher points higher than the average for a student who speaks another language better than English, based on our samples. - Our 90% confidence interval for the difference (English - non-English) of the population means is (-1.33, 2.34).

- The confidence interval reflects imprecision in the population estimate, based only on assuming that the participants are selected at random from the population of interest.

- When we generalize beyond study participants to the population they were selected at random from, then our data are compatible (at the 90% confidence level) with differences between the two population means between -1.33 and 2.34, depending on the assumptions of our Welch t test being correct.

- The assumptions of the Welch’s t test are

- that the samples in each group are drawn independently of each other,

- that the samples in each group represent a random sample of the population of interest,

- and that the samples in each group are drawn from a Normally distributed population.

- The last of these assumptions is especially hard to justify given these data.

- Here, I’ve also used a two-sided confidence interval procedure, which is what we’ll do in all work for 431, not just Project B.

5.3.2 The Pooled t test (t test with equal variances)

The pooled t test, of course, actually adds an assumption (that either the sample sizes or the population variances are equal) to the assumptions of the Welch test. As mentioned above, the large difference in the sample variances and sample sizes makes this test unattractive, in addition to the problems with assuming Normality. Regardless, here is a 90% confidence interval for the difference between the non-English and English population mean bmi based on the pooled t test.

fitB <- lm(bmi ~ english, data = sur_15)

model_parameters(fitB, ci = 0.90)Parameter | Coefficient | SE | 90% CI | t(47) | p

--------------------------------------------------------------------

(Intercept) | 23.52 | 1.04 | [21.78, 25.27] | 22.67 | < .001

english [Yes] | 0.50 | 1.26 | [-1.62, 2.63] | 0.40 | 0.692

Uncertainty intervals (equal-tailed) and p-values (two-tailed) computed

using a Wald t-distribution approximation.- The point BMI estimates are 23.523 for No and 24.027 for Yes, so the average student who speaks English best has a BMI estimated to be about 0.504 points higher than the average for a student who speaks another language better than English, based on our samples.

- Our 90% confidence interval for the difference (English - non-English) of the population means is now (-1.62, 2.63) based on the pooled t procedure.

- The confidence interval reflects imprecision in the population estimate, based only on assuming that the participants are selected at random from the population of interest.

- When we generalize beyond study participants to the population they were selected at random from, then our data are compatible (at the 90% confidence level) with differences between the two population means between -1.62 and 2.63, depending on the assumptions of our linear model being correct.

- The assumptions of the pooled t test are

- that the samples in each group are drawn independently of each other,

- that the samples in each group represent a random sample of the population of interest,

- the samples in each group are drawn from a Normally distributed population,

- and that either the sample sizes or the population variances are equal.

- The Normality assumption remains hard to justify given these data, so we should look at alternatives.

- Here, I’ve again used a two-sided confidence interval procedure, as we’ll do in all 431 work.

5.3.3 The Bootstrap for comparing means from two independent samples

An independent samples comparison that doesn’t require Normality is the bootstrap. Here is a 90% confidence interval for the difference between the English and non-English population bmi distributions based on the bootstrap using a seed of 431. (Note: when you set a seed for this or other analyses in the project, pick something other than 431.)

set.seed(431)

sur_15 |>

specify(bmi ~ english) |>

generate(reps = 1000, type = "bootstrap") |>

calculate(stat = "diff in means",

order = c("Yes", "No") ) |>

get_confidence_interval(level = 0.90, type = "percentile")# A tibble: 1 × 2

lower_ci upper_ci

<dbl> <dbl>

1 -1.19 2.28- The population mean BMI in those who said Yes is estimated to be about 0.504 points higher than the population mean BMI for those who said No, based on our samples. So the mean differences’ point estimate is 0.504

- Our 90% bootstrapped confidence interval for the difference (Yes - No) of the population means is (-1.19, 2.28).

- When we generalize beyond study participants to the population they were selected at random from, then our data are compatible (at the 90% confidence level) with differences between the two population means between -1.19 and 2.28, depending on the assumptions of our bootstrap estimation procedure being correct.

- The assumptions of this bootstrap procedure are:

- that the samples in each group are drawn independently of each other,

- and that the samples in each group represent a random sample of the population of interest.

- Again, I’ve used a two-sided confidence interval procedure.

So, I think the bootstrap procedure would be most appropriate here, due to the non-Normality (and in particular the asymmetry) in the samples.

5.4 Conclusions

We find a point estimate of 0.51 and a 90% confidence interval of (-1.19, 2.28) for the difference between the population mean BMI for those who speak English best minus the population mean BMI for those who speak another language best, based on our sample. This conclusion is motivated by a bootstrap estimate to compare the two groups (English and non-English) across 49 subjects. I feel this is the most justified approach based on the apparent skew in the data (particularly among those who speak English best) in these students.

If this were an actual Project B Study 1, I’d discuss the other issues that go into a conclusion, including limitations of this study and suggestions about logical next steps. But I’ll leave that to you.

6 Analysis C: Comparing 3 Population Means via ANOVA

6.1 The Question

We’ll compare comfort_431 by grades_r in this analysis, using the analysis of variance, and related tools. We’re comparing the mean comfort_431 scores of the population represented by the respondents who got their best grades_r on individual work, to the population represented by the respondents who got their best grades_r with a partner, to the population represented by the respondents who got their best grades_r on group work. There is no link between subjects across the three grades_r groups, so the samples are independent. Plus, as we’ll see, there are different numbers of subjects in the three grades_r groups, so there’s no way their comfort_431 values could be matched. As a result, we’re going to be interested in looking at the three samples separately to help us understand issues related to hypothesis testing assumptions. Note that we’ll use a 90% confidence level throughout this demonstration project for all analyses, and I encourage you to do this in your actual Project B Study 1 work, as well.

If this were an actual Study 1, rather than a demonstration, I’d build a research question here, but that will be your job.

6.2 Describing the Data

I’ll start by looking at the range of the comfort_431 data within each grades_r group.

sur_15 |> group_by(grades_r) |> reframe(lovedist(comfort_431))# A tibble: 3 × 11

grades_r n miss mean sd med mad min q25 q75 max

<fct> <int> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Individual 40 0 79.0 15.3 80 14.8 35 73.8 90 100

2 Partner 4 0 63.8 23.6 70 11.1 30 60 73.8 85

3 Group 5 0 78.4 17.8 85 14.8 50 73 89 95We have only 4 and 5 respondents in the Partner and Group grades_r categories, respectively, so that will make it difficult to say much about the distributions of comfort_431 in those populations.

6.2.1 Graphical Summaries

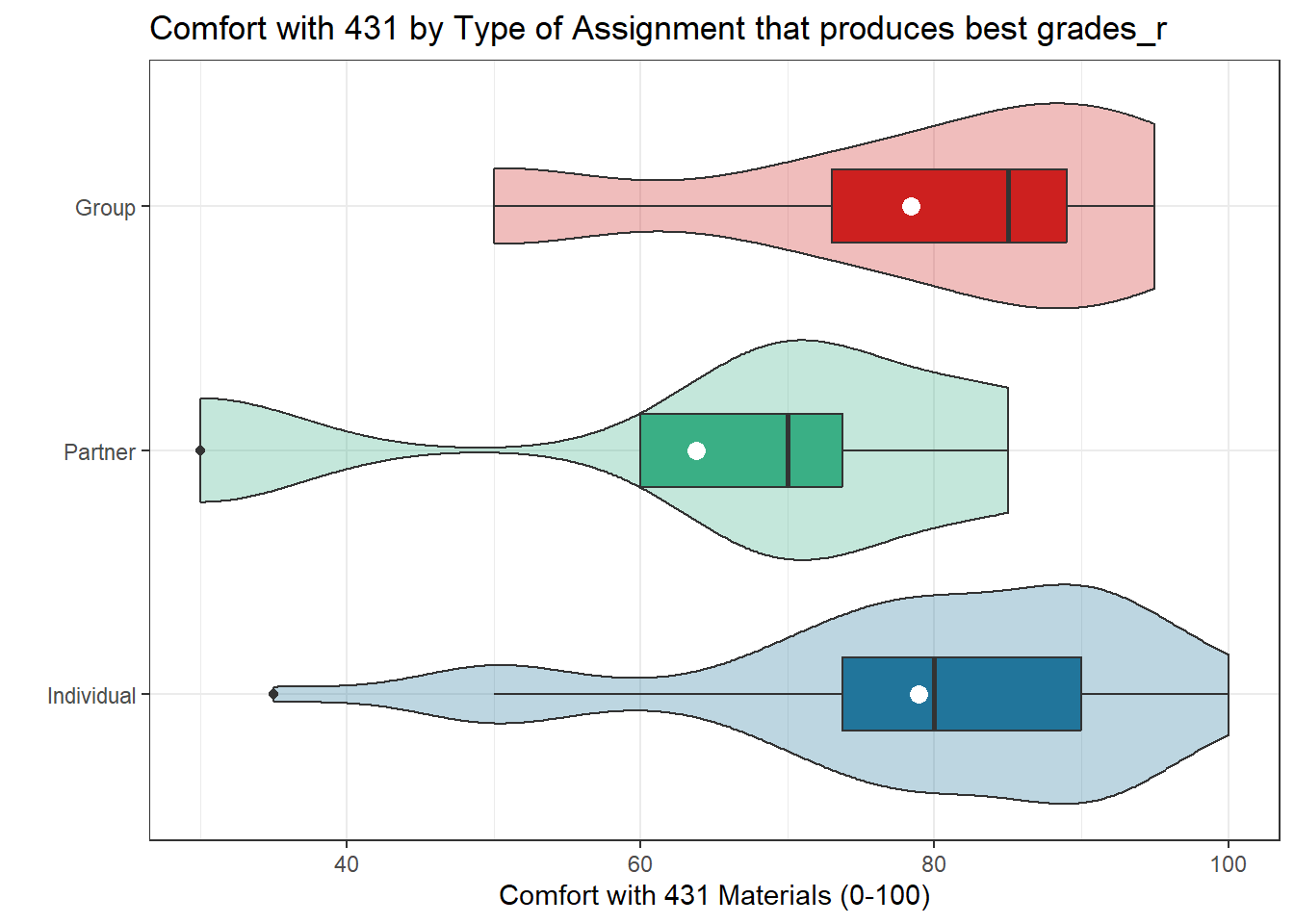

Since we are exploring the distributions of three independent samples, I’ll plot each of the groups in a comparison boxplot, as a start.

ggplot(sur_15, aes(x = grades_r, y = comfort_431, fill = grades_r)) +

geom_violin(alpha = 0.3) +

geom_boxplot(width = 0.3) +

stat_summary(fun = "mean", geom = "point", size = 3, col = "white") +

coord_flip() +

scale_fill_social() +

guides(fill = "none") +

labs(title = "Comfort with 431 by Type of Assignment that produces best grades_r",

y = "Comfort with 431 Materials (0-100)",

x = "")

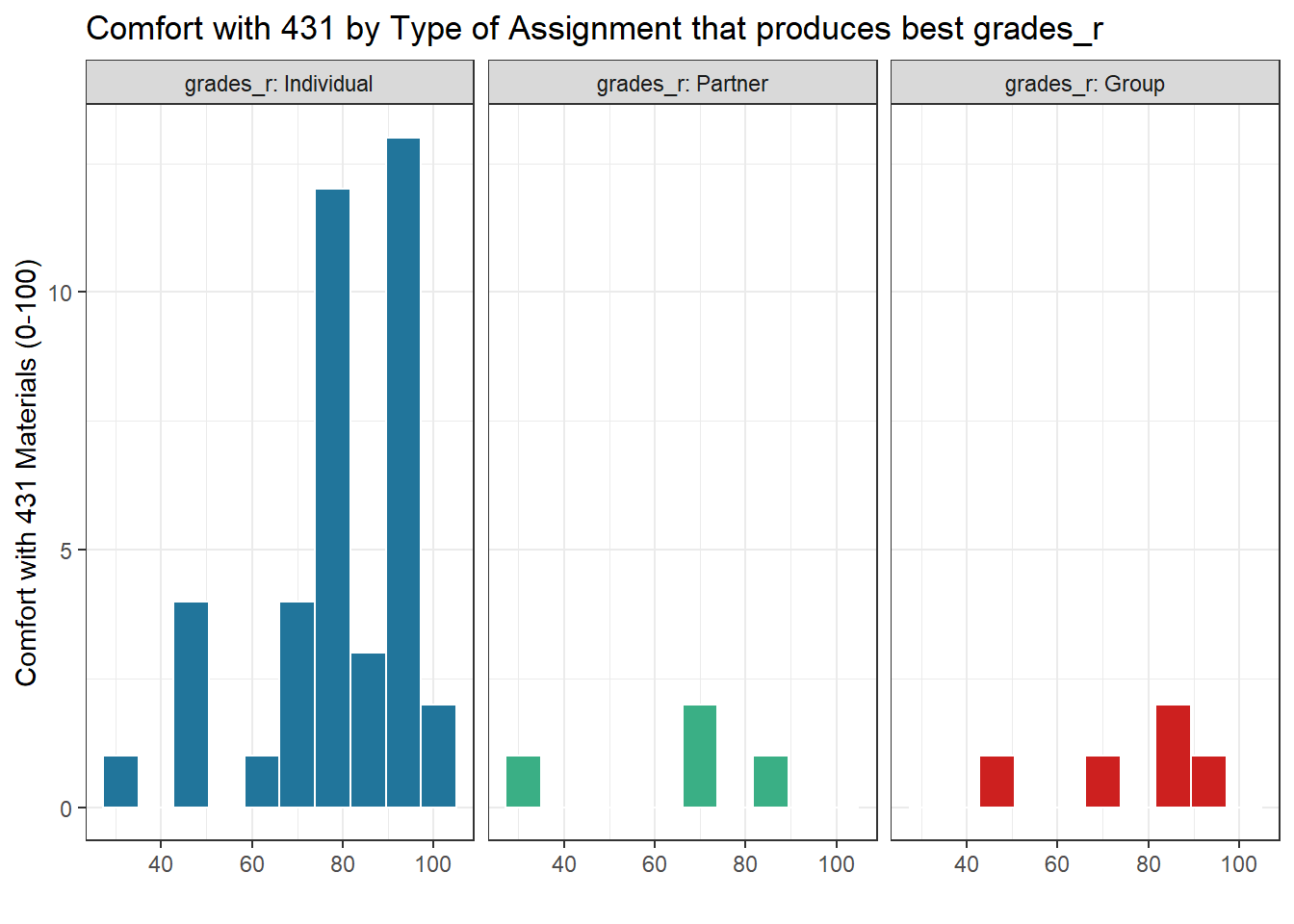

The sample sizes are so small that histograms for those two levels of the grades_r factor (Partner and Group) tell us nothing of substantial value.

ggplot(sur_15, aes(x = comfort_431)) +

geom_histogram(aes(fill = grades_r), bins = 10, col = "white") +

facet_wrap(~ grades_r, labeller = "label_both") +

scale_fill_social() +

guides(fill = "none") +

labs(title = "Comfort with 431 by Type of Assignment that produces best grades_r",

y = "Comfort with 431 Materials (0-100)",

x = "")

- In addition, the Individual data look as though they may be either skewed to the left a bit or at least have one potential outlier.

- With these tiny sample sizes (less than 10 observations) these plots don’t really help. All of the values in each group are within the stated response levels (0-100) but otherwise, there’s not a lot to go on. ANOVA is quite robust, so we’ll run it, but I expect that a Kruskal-Wallis approach may also be useful here.

6.2.2 Numerical Summaries

With so few observations in the Partner and Group grades_r levels, there’s not much to see in numerical summaries, either.

sur_15 |> group_by(grades_r) |> reframe(lovedist(comfort_431))# A tibble: 3 × 11

grades_r n miss mean sd med mad min q25 q75 max

<fct> <int> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Individual 40 0 79.0 15.3 80 14.8 35 73.8 90 100

2 Partner 4 0 63.8 23.6 70 11.1 30 60 73.8 85

3 Group 5 0 78.4 17.8 85 14.8 50 73 89 95We have 40 Individual, 4 Partner and 5 Group subjects with known comfort levels.

The conclusion I draw from all of this is that we need to run but that we probably can’t trust an ANOVA here, with such small sample sizes in the non-Individual grades_r levels. Anything below 10 subjects is just too small, and, practically, I’d consider collapsing the groups to Individual vs. All Other. But for this demonstration, I’ll press on.

6.3 Analysis of Variance

Let’s run the ANOVA here just so you have the code, even though we don’t have large enough data samples in the Partner and Group levels to justify statistical inference at all.

The Analysis of Variance compares the means of comfort_431 in the three grades_r populations. We can run the analysis using either of two approaches, each of which we’ll show in what follows.

fitC <- lm(comfort_431 ~ grades_r, data = sur_15)

fitC |> anova()Analysis of Variance Table

Response: comfort_431

Df Sum Sq Mean Sq F value Pr(>F)

grades_r 2 843.3 421.63 1.609 0.2111

Residuals 46 12053.8 262.04 fitC |> eta_squared()For one-way between subjects designs, partial eta squared is equivalent

to eta squared. Returning eta squared.# Effect Size for ANOVA

Parameter | Eta2 | 95% CI

-------------------------------

grades_r | 0.07 | [0.00, 1.00]

- One-sided CIs: upper bound fixed at [1.00].- Here, we’d conclude only that the results we have are not particularly consistent with an assumption that there are no differences between the population mean

comfort_431scores for the threegrades_rcategories. - The

grades_raccount for \(\eta^2 = \frac{843.3}{843.3 + 12053.8} = 0.07\) or 7% of the variation incomfort_431scores in our sample. - The natural next question is to try to identify which pairs of

grades_rcategories show larger estimated differences, and we’ll tackle that in a moment with a Bonferroni/Holm approach (although you are welcome to use Tukey HSD instead.) - ANOVA is the natural extension of the pooled t test for two independent samples, and so it has the same set of assumptions when we compare population means across multiple categories (here, the three

grades_rcategories)…- that the samples in each category are drawn independently of each other,

- that the samples in each category represent a random sample of the population of interest,

- the samples in each category are drawn from a Normally distributed population,

- and that either the sample sizes or the population variances are equal across the categories.

The main problem here is that the sample size is so small that we can’t tell whether this result is truly reasonable or not. We really need a minimum of 15 observations (and ideally more like 30) in each group to let our histograms and boxplots have any chance to be informative on these points. We’ll move on to looking at the pairwise comparisons, though, in this demonstration.

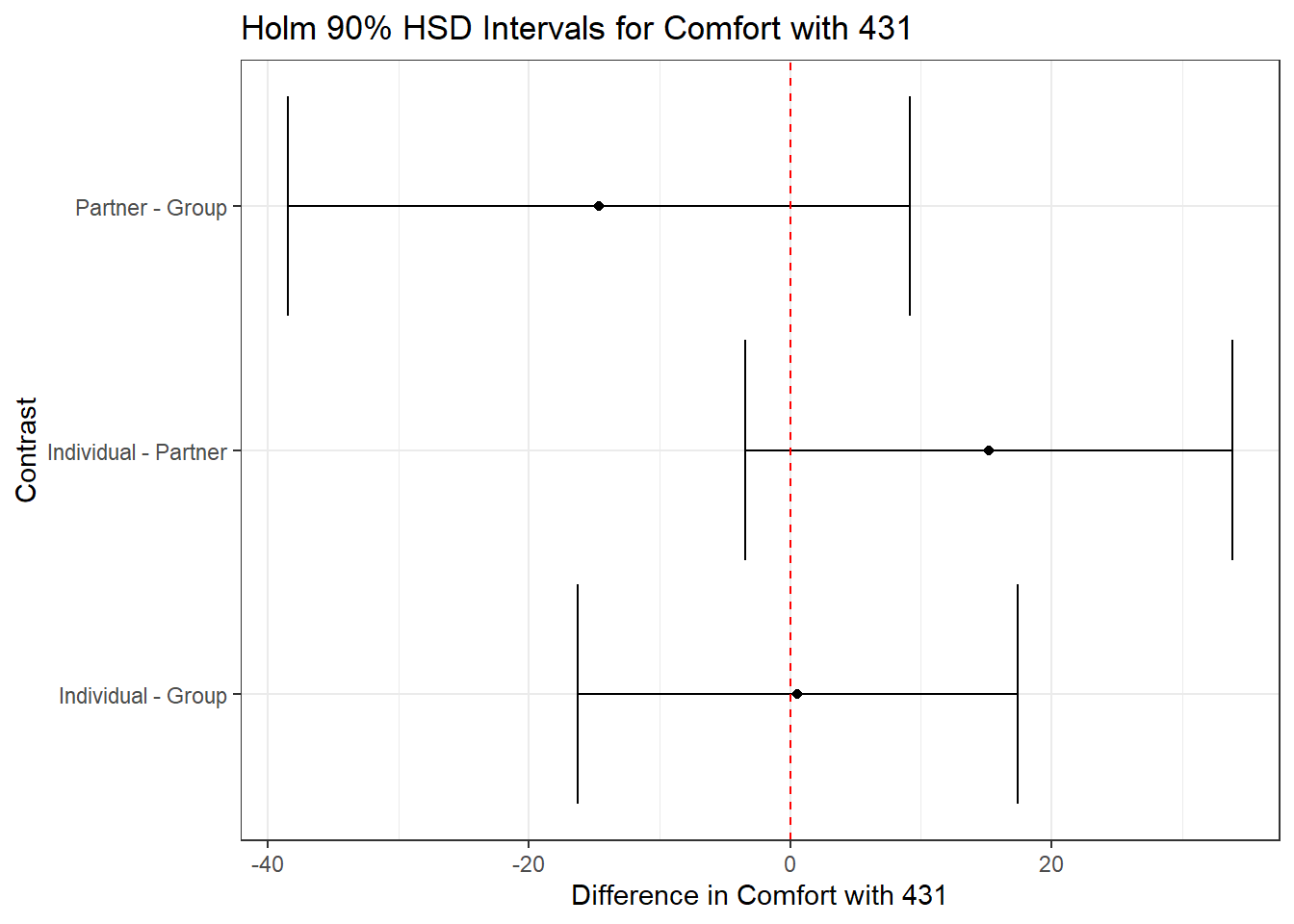

6.3.1 Holm approach to Pairwise Comparisons of Means

We have two approaches available for dealing with multiple comparisons. If we had not pre-planned the full set of pairwise comparisons of comfort_431 across the grades_r categories, or if we wanted to use a fairly conservative approach, we could apply a Holm correction to our comparisons. This works reasonably well even with an unbalanced design, such as we have here, and I recommend you use this approach in Project B Study 1 Analysis C4.

conC1 <- estimate_contrasts(fitC, contrast = "grades_r",

ci = 0.90, p.adjust = "holm")

conC1 |> gt()| Level1 | Level2 | Difference | CI_low | CI_high | SE | df | t | p |

|---|---|---|---|---|---|---|---|---|

| Individual | Group | 0.55 | -16.295760 | 17.395760 | 7.678480 | 46 | 0.07162876 | 0.9432079 |

| Individual | Partner | 15.20 | -3.423689 | 33.823689 | 8.488879 | 46 | 1.79057809 | 0.2398296 |

| Partner | Group | -14.65 | -38.473503 | 9.173503 | 10.859010 | 46 | -1.34911010 | 0.3678106 |

- Our process detects positive differences between the mean of the Partner category and the means of the other two categories, but suggests that the difference between Individual and Group means covers both positive and negative values.

- Note that a more comprehensive discussion of the confidence intervals here might be helpful, but I’ll leave that to you.

- The assumptions here include the ANOVA assumptions, which are no more or less justified than they were before. We do not, however, require that our pairwise comparisons be pre-planned.

Here is the plot of the results.

conC1_tib <- tibble(conC1) |>

mutate(contr = str_c(Level1, " - ", Level2))

ggplot(conC1_tib, aes(y = contr, x = Difference)) +

geom_point() +

geom_errorbar(aes(xmin = CI_low, xmax = CI_high)) +

geom_vline(xintercept = 0, col = "red", lty = "dashed") +

labs(title = "Holm 90% HSD Intervals for Comfort with 431",

y = "Contrast",

x = "Difference in Comfort with 431")

6.4 Conclusions

Our conclusions are:

- that the sample size is just too small in the non-Individual

grades_rcategories to draw very firm conclusions, and - the ANOVA results don’t appear to have the power to detect meaningful differences between the means based on this small sample.

I’ve made no special effort here to write these conclusions in the format we’re looking for in your Study 1 work, because you’ll have larger sample sizes, and (I hope) more faith as a result in the comparisons you build.

7 Analysis D: Two-Way (2 x 2) Contingency Table

7.1 The Question

We’ll look at the association of r_before with english in this analysis. The r_before variable and the english variable each have two levels, and suppose we are interested in whether english has an impact on r_before, so we’ll build a contingency table with english in the rows and r_before in the columns. Note that we’ll use a 90% confidence level and the add 2 successes and 2 failures Bayesian augmentation, and I encourage you to do this in your actual Project B Study 1 work, as well. I’ll remind you that in your Project B, we’re requiring you to have a minimum number of observations within each cell of the table that I cannot meet here with this tiny sample size.

If this were an actual Study 1, rather than a demonstration, I’d build a research question here, but I have decided to leave that work to you.

7.2 Describing the Data

Here is the data for our 2x2 table.

sur_15 |> tabyl(english, r_before) |> adorn_title() r_before

english No Yes

No 9 7

Yes 16 17Those names could use some work, I think.

- The row names, in order, should be something like “English” (where “Yes” is now) and “Not English” with “English” first

- The column names, respectively, should be “Prior R user” and “No Prior R”, with “Prior R User” first.

sur_15_D <- sur_15 |>

select(s_id, english, r_before) |>

mutate(english_r = fct_recode(factor(english),

"Not English" = "No",

"English" = "Yes"),

english_r = fct_relevel(english_r, "English"),

r_before_r = fct_recode(factor(r_before),

"No Prior R" = "No",

"Prior R user" = "Yes"),

r_before_r = fct_relevel(r_before_r, "Prior R user"))sur_15_D |> tabyl(english_r, r_before_r) english_r Prior R user No Prior R

English 17 16

Not English 7 97.3 Main Analysis

I strongly encourage you to use the Bayesian augmentation where we add two successes and add two failures, as recommended in Agresti and Coull5, and to use 90% confidence levels. To accomplish this I’ll use the twoby2 function in the Epi package.

t1 <- table(sur_15_D$english_r, sur_15_D$r_before_r)

twoby2(t1 + 2, conf.level = 0.90) 2 by 2 table analysis:

------------------------------------------------------

Outcome : Prior R user

Comparing : English vs. Not English

Prior R user No Prior R P(Prior R user) 90% conf. interval

English 19 18 0.5135 0.3806 0.6445

Not English 9 11 0.4500 0.2809 0.6315

90% conf. interval

Relative Risk: 1.1411 0.7030 1.8522

Sample Odds Ratio: 1.2901 0.5161 3.2248

Conditional MLE Odds Ratio: 1.2844 0.4506 3.7146

Probability difference: 0.0635 -0.1576 0.2740

Exact P-value: 0.7828

Asymptotic P-value: 0.6474

------------------------------------------------------Note what I did to add two observations to each cell of the table.

In your Project B write-up, be sure to interpret the point estimates of the relative risk and the odds ratio (the sample one, not the conditional MLE one) and the probability difference. For the confidence intervals, though, just write out your interpretation of the confidence interval explanation for the probability difference.

We can draw conclusions now about:

- The individual probabilities of being a prior R user in the English and non-English groups, and 90% confidence intervals for each at the top of the output, so that, for instance, we estimate the probability of prior R usage among subjects for whom English is not their best language at 0.45, with 90% confidence interval (0.29, 0.63).

- The relative risk of Prior R use given English vs. Prior R use given non-English, which is estimated to be 1.14, with 90% CI (0.70, 1.85). (In your project, you’ll explain what your relative risk point estimate means in detail, but don’t worry about interpreting the CI - just specify the lower and upper bounds.)

- The odds ratio describing the odds of Prior R use given English vs. Non-English, which is estimated to be 1.29 with 90% CI (0.52, 3.22). (In your project B, you’ll explain what your odds ratio point estimate means in detail, but don’t worry about interpreting the CI - just specify the lower and upper bounds.)

- The difference in probability of Prior R use for English vs. non-English subjects, which is estimated to be 0.0635, with a 90% confidence interval of (-0.1576, 0.2740). (In your project, you’ll explain what your point estimate for the probability difference means in detail and also state and interpret the CI for the probability difference.)

- The chi-square test of independence, which assesses the null hypothesis of no association between language preference and prior R usage, using either Fisher’s exact test or the Pearson chi-square test (labeled asymptotic here.) The p value here is quite large.

7.3.1 Checking Assumptions

Since each cell in our (non-augmented) 2x2 table is at least 5, R throws no warning messages. We should be reasonably comfortable with the chi-square test of independence here. If every cell was 10 or more, we’d be even more comfortable.

7.3.2 What If We Wanted to Type in the Table Ourselves?

With the twobytwo function available in the Love-boost.R script, we can directly obtain 90% confidence intervals. For example, suppose we had the following data, pulled from our 2016 survey:

| 2016 Survey | Drank Tea Recently | Didn’t Drink Tea |

|---|---|---|

| Not Born in US | 21 | 10 |

| US Born | 20 | 18 |

Suppose we wanted to use twobytwo and the +2/+4 Bayesian augmentation (adding 2 to the count in each cell of our 2x2 table) and a 90% confidence interval for this comparison, to see whether the population proportions who drank tea recently differ between those born in and out of the US.

twobytwo(21+2, 10+2, 20+2, 18+2,

"Not US Born", "US Born", "Drank Tea", "No Tea",

conf.level = 0.90)2 by 2 table analysis:

------------------------------------------------------

Outcome : Drank Tea

Comparing : Not US Born vs. US Born

Drank Tea No Tea P(Drank Tea) 90% conf. interval

Not US Born 23 12 0.6571 0.5162 0.7749

US Born 22 20 0.5238 0.3982 0.6465

90% conf. interval

Relative Risk: 1.2545 0.9160 1.7181

Sample Odds Ratio: 1.7424 0.8024 3.7839

Conditional MLE Odds Ratio: 1.7299 0.7276 4.1948

Probability difference: 0.1333 -0.0512 0.3036

Exact P-value: 0.2561

Asymptotic P-value: 0.2389

------------------------------------------------------7.4 Conclusions

Our primary conclusions about the study we’ve done here in Analysis D should be motivated by comparing the point estimates of the relative risk and odds ratio to what they would be (i.e., 1) if there was no relationship, and a more thorough description of the estimated difference in probabilities between the two groups (English and not English in our case) including its 90% confidence interval.

Then we’d write more about limitations and opportunities for further work, were this an actual Study 1, instead of just a demonstration.

8 Analysis E: Two-Way (3 x 4) Contingency Table

8.1 The Question

We’ll look at the association of two categorical factors we created earlier: medium_r and fiction_r in this analysis. We’re interested in whether there is an association between the ways in which subjects consumed their fiction, and the type of fiction they most enjoy. The medium_r data have three levels, and the fiction_r data have four levels. Note that we’ll use a 90% confidence level and I encourage you to do this in your actual Project B Study 1 work, as well.

If this were an actual Study 1, rather than a demonstration, I’d build a research question here, but I have decided to leave that work to you.

8.2 Describing the Data

Let’s store this initial table of interest as table_E1

table_E1 <- table(sur_15$medium_r, sur_15$fiction_r)

table_E1

Comedy Drama Fantasy/SF Other

Movies 4 4 5 2

TV 10 5 2 4

Other 3 5 5 0We could add the marginal totals, I suppose.

sur_15 |>

tabyl(medium_r, fiction_r) |>

adorn_totals(where = c("row", "col")) medium_r Comedy Drama Fantasy/SF Other Total

Movies 4 4 5 2 15

TV 10 5 2 4 21

Other 3 5 5 0 13

Total 17 14 12 6 49Note that we don’t meet the Cochran conditions here. In particular, we still have a 0 cell, and that might motivate us to consider collapsing or removing the “Other” category from the fiction_r variable.

NOTE: In doing your project B, I would only proceed once I’d identified variables (after whatever collapsing you decide to do) that meet the Cochran conditions (described below.)

I’ll leave it alone for now, and see what happens, returning to this later. The research question, if I’d written would need to address whether which medium (Movies, TV or other) you like is associated with which genre (Comedy, Drama, Fantasy/SF) you prefer.

8.3 Main Analysis

8.3.1 Running the Pearson \(\chi^2\) Test

We’ll run the Pearson \(\chi^2\) test using:

chisq.test(table_E1)Warning in stats::chisq.test(x, y, ...): Chi-squared approximation may be

incorrect

Pearson's Chi-squared test

data: table_E1

X-squared = 8.2621, df = 6, p-value = 0.2195Note the warning, because our table does not meet the Cochran conditions.

8.3.2 Running Fisher’s Exact Test

NOTE: In doing your project B, I would only proceed once I’d identified variables (after whatever collapsing you decide to do) that meet the Cochran conditions (described below.) I would not run Fisher’s test.

Given a small overall sample size, the fisher.test command will also produce a Fisher’s exact test, which may be a little more appropriate here, given the presence of cells with small counts.

fisher.test(table_E1)

Fisher's Exact Test for Count Data

data: table_E1

p-value = 0.2096

alternative hypothesis: two.sidedBased on the Fisher test, we would get only a slightly different conclusion than the Pearson test. However,

- Our conclusions are quite dependent on the choice of \(\alpha\) level.

- Neither test is really appropriate, since we have very small cell counts, including a zero.

8.3.3 Checking Assumptions - The Cochran Conditions

The “Cochran conditions”, which require that we have:

- no cells with 0 counts

- at least 80% of the cells in our table with counts of 5 or higher

- expected counts in each cell of the table should be 5 or more

We don’t meet those Cochran conditions here. In addition, since each cell in our 3x4 table is NOT at least 5, R throws a warning message when we run the Pearson \(\chi^2\) test, and since we don’t meet the Cochran conditions, the fisher.test results are questionable, as well. We should consider whether collapsing or deleting some of the rows or columns might be more reasonable. And we’ll do this next.

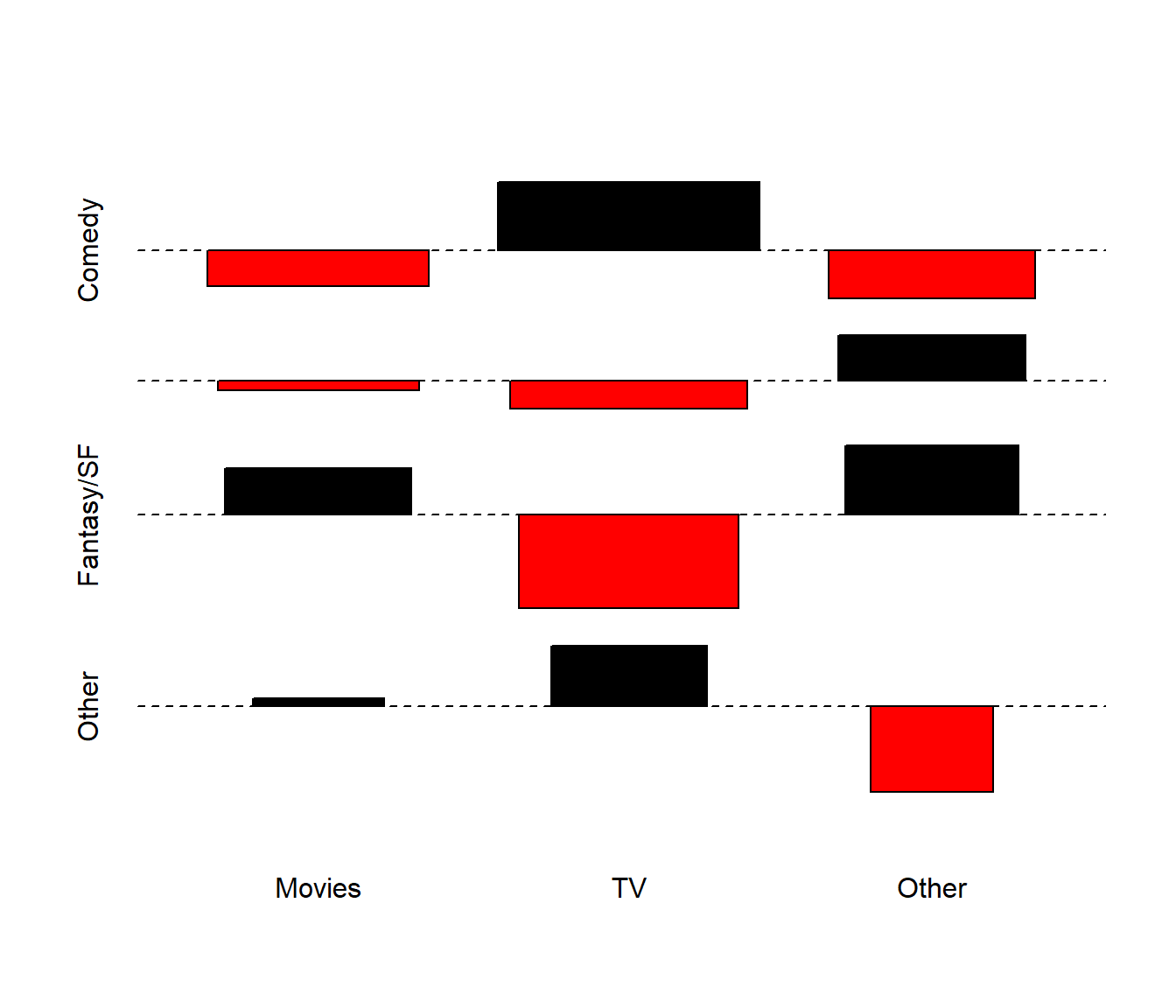

8.3.4 An Association Plot for the 3x4 Table

The assocplot function in R produces a plot that indicates deviations from the assumption of independence of rows and columns in a two-way table. For instance, using our original table, we have:

assocplot(table_E1)

We can see that the independence model really doesn’t work well for the cells with larger shapes here, which we note especially in the Fantasy/SF category, and to some extent in the Comedy category.

Hint: Finding a better scheme for visualizing a contingency table’s relationship to independence (or simply the table itself), especially if it’s using the gt package, would be a good idea to explore further in Analysis E, too, especially if you’re looking to learn to build savvy tables. But this is not necessary, certainly.

8.3.5 A 2x3 Table, After Collapsing (Lumping) Some Small Rows and Columns

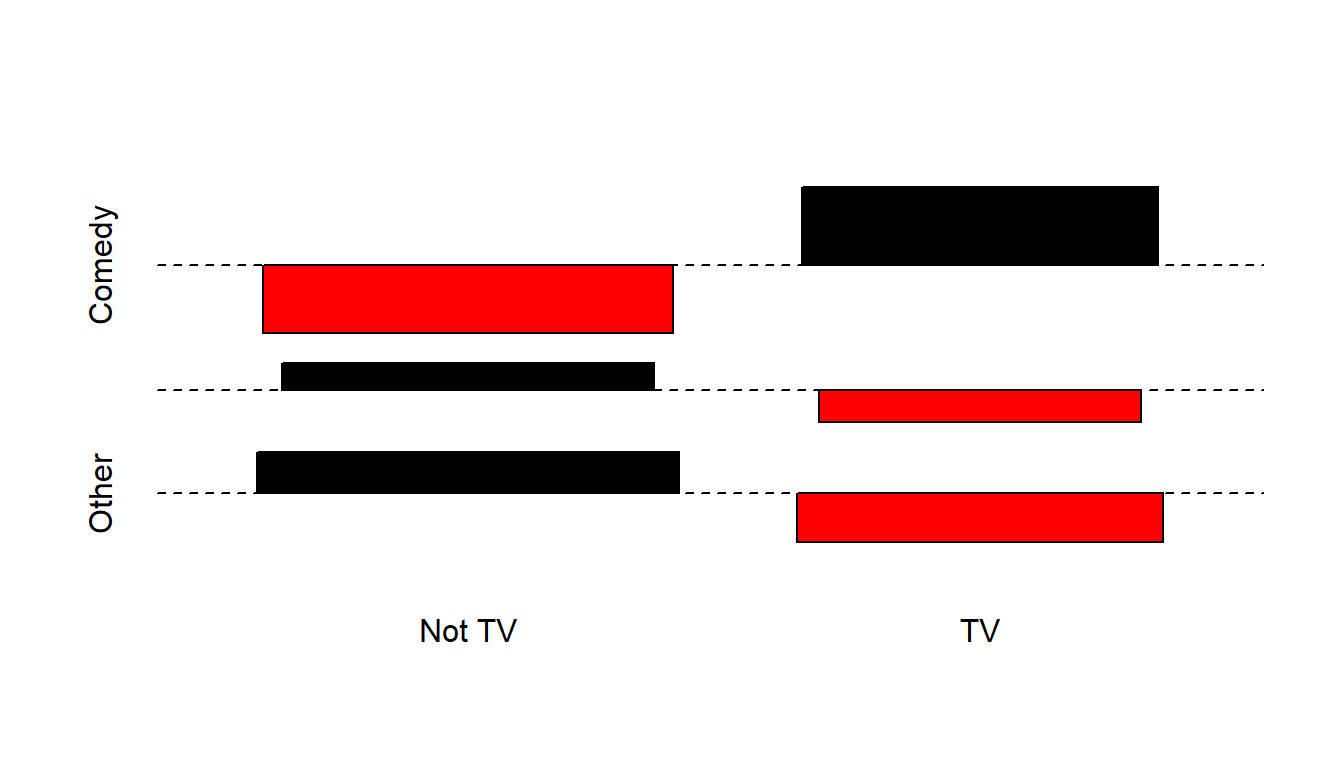

Suppose we instead decided to drop down to a study of TV vs. Other media (combining Movies and Other) and also collapsed the Fantasy/SF and Other columns (so the remaining subjects form a 2x3 table), in an effort to remove zero cells, and reduce the incidence of cells with counts below 5.

First, we’ll combine the Movies and Other groups to create medium_2 from medium_r using fct_recode.

sur_15 <- sur_15 |>

mutate(medium_2 = fct_recode(medium_r,

"Not TV" = "Movies",

"TV" = "TV",

"Not TV" = "Other"))Or, we can use the fct_lump function to lump together the two categories with the smallest overall counts directly, in creating fiction_3 from fiction_r.

sur_15 <- sur_15 |>

mutate(fiction_3 = fct_lump(fiction_r, 2))Let’s call the collapsed table table_E2.

table_E2 <-

table(sur_15$medium_2, sur_15$fiction_3)

table_E2

Comedy Drama Other

Not TV 7 9 12

TV 10 5 6This new 2x3 table loses some fidelity, but gains in that each cell now contains at least 5 subjects. I’ll remind you that in your Project B, we’re requiring you to have even more than that in each cell.

8.3.6 Chi-Square Testing for the 2x3 Table

And here are the results from chi-square testing…

chisq.test(table_E2)

Pearson's Chi-squared test

data: table_E2

X-squared = 2.7279, df = 2, p-value = 0.2556fisher.test(table_E2)

Fisher's Exact Test for Count Data

data: table_E2

p-value = 0.2765

alternative hypothesis: two.sidedFor the project, once all of the cells have at least 5 observations, I recommend the use of the Pearson approach, unless the table is square (# of rows = # of columns), in which case the Fisher test is also a reasonable choice. Generally, the Fisher test is more appropriate when the sample sizes are small. In this case, of course, it doesn’t matter much after collapsing cells and forming this 2x3 table. We’ll close with the association plot for this smaller table, which suggests that the independence model inverts its errors for Comedy as compared to the other two categories.

assocplot(table_E2)

8.4 Conclusions

Using the collapsed table_E2 to meet the Cochran conditions, and either the Pearson or Fisher test we detect no strong association between the favorite consumption method and favorite genre. I’d also spend a bit of time talking about the results of the assocplot, I think, or at least make some effort to indicate where the independence assumption holds less well, even though it doesn’t reach the point where we can reject it with 90% confidence.

Again, it’s your job to identify and discuss your conclusions, as expected in the instructions for Project B Study 1. This is just a demonstration.

9 Session Information

End your report with the session information, in its own section. This will be Section 8 for you, but it’s Section 9 for me because I did all five analyses, whereas you will only do four.

session_info()R version 4.4.2 (2024-10-31 ucrt)

Platform: x86_64-w64-mingw32/x64

Running under: Windows 11 x64 (build 22631)

Locale:

LC_COLLATE=English_United States.utf8

LC_CTYPE=English_United States.utf8

LC_MONETARY=English_United States.utf8

LC_NUMERIC=C

LC_TIME=English_United States.utf8

Package version:

askpass_1.2.1 backports_1.5.0 base64enc_0.1.3

bayestestR_0.15.0 bigD_0.3.0 bit_4.5.0

bit64_4.5.2 bitops_1.0.9 blob_1.2.4

broom_1.0.7 bslib_0.8.0 cachem_1.1.0

callr_3.7.6 cellranger_1.1.0 cli_3.6.3

clipr_0.8.0 cmprsk_2.2-12 coda_0.19-4.1

codetools_0.2-20 colorspace_2.1-1 commonmark_1.9.2

compiler_4.4.2 conflicted_1.2.0 correlation_0.8.6

cpp11_0.5.0 crayon_1.5.3 curl_6.0.1

data.table_1.16.2 datasets_4.4.2 datawizard_0.13.0

DBI_1.2.3 dbplyr_2.5.0 digest_0.6.37

dplyr_1.1.4 dtplyr_1.3.1 easystats_0.7.3

effectsize_0.8.9 emmeans_1.10.5 Epi_2.56

estimability_1.5.1 etm_1.1.1 evaluate_1.0.1

fansi_1.0.6 farver_2.1.2 fastmap_1.2.0

fontawesome_0.5.3 forcats_1.0.0 fs_1.6.5

gargle_1.5.2 generics_0.1.3 ggplot2_3.5.1

glue_1.8.0 googledrive_2.1.1 googlesheets4_1.1.1

graphics_4.4.2 grDevices_4.4.2 grid_4.4.2

gridExtra_2.3 gt_0.11.1 gtable_0.3.6

haven_2.5.4 highr_0.11 hms_1.1.3

htmltools_0.5.8.1 htmlwidgets_1.6.4 httr_1.4.7

ids_1.0.1 infer_1.0.7 insight_0.20.5

isoband_0.2.7 janitor_2.2.0 jquerylib_0.1.4

jsonlite_1.8.9 juicyjuice_0.1.0 knitr_1.49

labeling_0.4.3 lattice_0.22-6 lifecycle_1.0.4

lubridate_1.9.3 magrittr_2.0.3 markdown_1.13

MASS_7.3-61 Matrix_1.7-1 memoise_2.0.1

methods_4.4.2 mgcv_1.9-1 mime_0.12

modelbased_0.8.9 modelr_0.1.11 multcomp_1.4-26

munsell_0.5.1 mvtnorm_1.3-2 naniar_1.1.0

nlme_3.1-166 norm_1.0-11.1 numDeriv_2016.8-1.1

openssl_2.2.2 parallel_4.4.2 parameters_0.23.0

patchwork_1.3.0 performance_0.12.4 pillar_1.9.0

pkgconfig_2.0.3 plyr_1.8.9 prettyunits_1.2.0

processx_3.8.4 progress_1.2.3 ps_1.8.1

purrr_1.0.2 R6_2.5.1 ragg_1.3.3

rappdirs_0.3.3 RColorBrewer_1.1.3 Rcpp_1.0.13-1

RcppArmadillo_14.0.2.1 reactable_0.4.4 reactR_0.6.1

readr_2.1.5 readxl_1.4.3 rematch_2.0.0

rematch2_2.1.2 report_0.5.9 reprex_2.1.1

rlang_1.1.4 rmarkdown_2.29 rstudioapi_0.17.1

rvest_1.0.4 sandwich_3.1-1 sass_0.4.9

scales_1.3.0 see_0.9.0 selectr_0.4.2

snakecase_0.11.1 splines_4.4.2 stats_4.4.2

stringi_1.8.4 stringr_1.5.1 survival_3.7-0

sys_3.4.3 systemfonts_1.1.0 textshaping_0.4.0

TH.data_1.1-2 tibble_3.2.1 tidyr_1.3.1

tidyselect_1.2.1 tidyverse_2.0.0 timechange_0.3.0

tinytex_0.54 tools_4.4.2 tzdb_0.4.0

UpSetR_1.4.0 utf8_1.2.4 utils_4.4.2

uuid_1.2.1 V8_6.0.0 vctrs_0.6.5

viridis_0.6.5 viridisLite_0.4.2 visdat_0.6.0

vroom_1.6.5 withr_3.0.2 xfun_0.49

xml2_1.3.6 xtable_1.8-4 yaml_2.3.10

zoo_1.8-12 Footnotes

Little, Roderick J. A. 1988. “A Test of Missing Completely at Random for Multivariate Data with Missing Values.” Journal of the American Statistical Association 83 (404): 1198–1202. doi:10.1080/01621459.1988.10478722.↩︎

Note the use of inline code here to specify this result. The usual cutoff for meaningful right skew by this measure is around 20% of a standard deviation.↩︎

The usual cutoff for meaningful right skew by this measure is around 20% of a standard deviation.↩︎

There is no need in Project B for you to use both a Holm and a Tukey HSD approach to your ANOVA. Either one is OK.↩︎

Agresti A Coull BA 1988 Approximate is Better than “Exact” for Interval Estimation of Binomial Proportions. The American Statistician 52(2), 119-126. http://www.jstor.org/stable/2685469↩︎