Chapter 15 Other Variable Selection Strategies

15.1 Why not use stepwise procedures?

- The R2 for a model selected in a stepwise manner is biased, high.

- The coefficient estimates and standard errors are biased.

- The \(p\) values for the individual-variable t tests are too small.

- In stepwise analyses of prediction models, the final model represented noise 20-74% of the time.

- In stepwise analyses, the final model usually contained less than half of the actual number of real predictors.

- It is not logical that a population regression coefficient would be exactly zero just because its estimate was not statistically significant.

This last comment applies to things like our “best subsets” approach as well as standard stepwise procedures.

Sander Greenland’s comments on parsimony and stepwise approaches to model selection are worth addressing…

- Stepwise variable selection on confounders leaves important confounders uncontrolled.

- Shrinkage approaches (like ridge regression and the lasso) are far superior to variable selection.

- Variable selection does more damage to confidence interval widths than to point estimates.

If we are seriously concerned about overfitting - winding up with a model that doesn’t perform well on new data - then stepwise approaches generally don’t help.

Vittinghoff et al. (2012) suggest four strategies for minimizing the chance of overfitting

- Pre-specify well-motivated predictors and how to model them.

- Eliminate predictors without using the outcome.

- Use the outcome, but cross-validate the target measure of prediction error.

- Use the outcome, and shrink the coefficient estimates.

The best subsets methods we have studied either include a variable or drop it from the model. Often, this choice is based on only a tiny difference in the quality of a fit to data.

- Harrell (2001): not reasonable to assume that a population regression coefficient would be exactly zero just because it failed to meet a criterion for significance.

- Brad Efron has suggested that a stepwise approach is “overly greedy, impulsively eliminating covariates which are correlated with other covariates.”

So, what’s the alternative?

15.2 Ridge Regression

Ridge regression involves a more smooth transition between useful and not useful predictors which can be obtained by constraining the overall size of the regression coefficients.

Ridge regression assumes that the regression coefficients (after normalization) should not be very large. This is reasonable to assume when you have lots of predictors and you believe many of them have some effect on the outcome.

Pros:

- Some nice statistical properties

- Can be calculated using only standard least squares approaches, so it’s been around for a while.

- Available in the

MASSpackage.

Ridge regression takes the sum of the squared estimated standardized regression coefficients and constrains that sum to only be as large as some value \(k\).

\[ \sum \hat{\beta_j}^2 \leq k. \]

The value \(k\) is one of several available measures of the amount of shrinkage, but the main one used in the MASS package is a value \(\lambda\). As \(\lambda\) increases, the amount of shrinkage goes up, and \(k\) goes down.

15.2.1 Assessing a Ridge Regression Approach

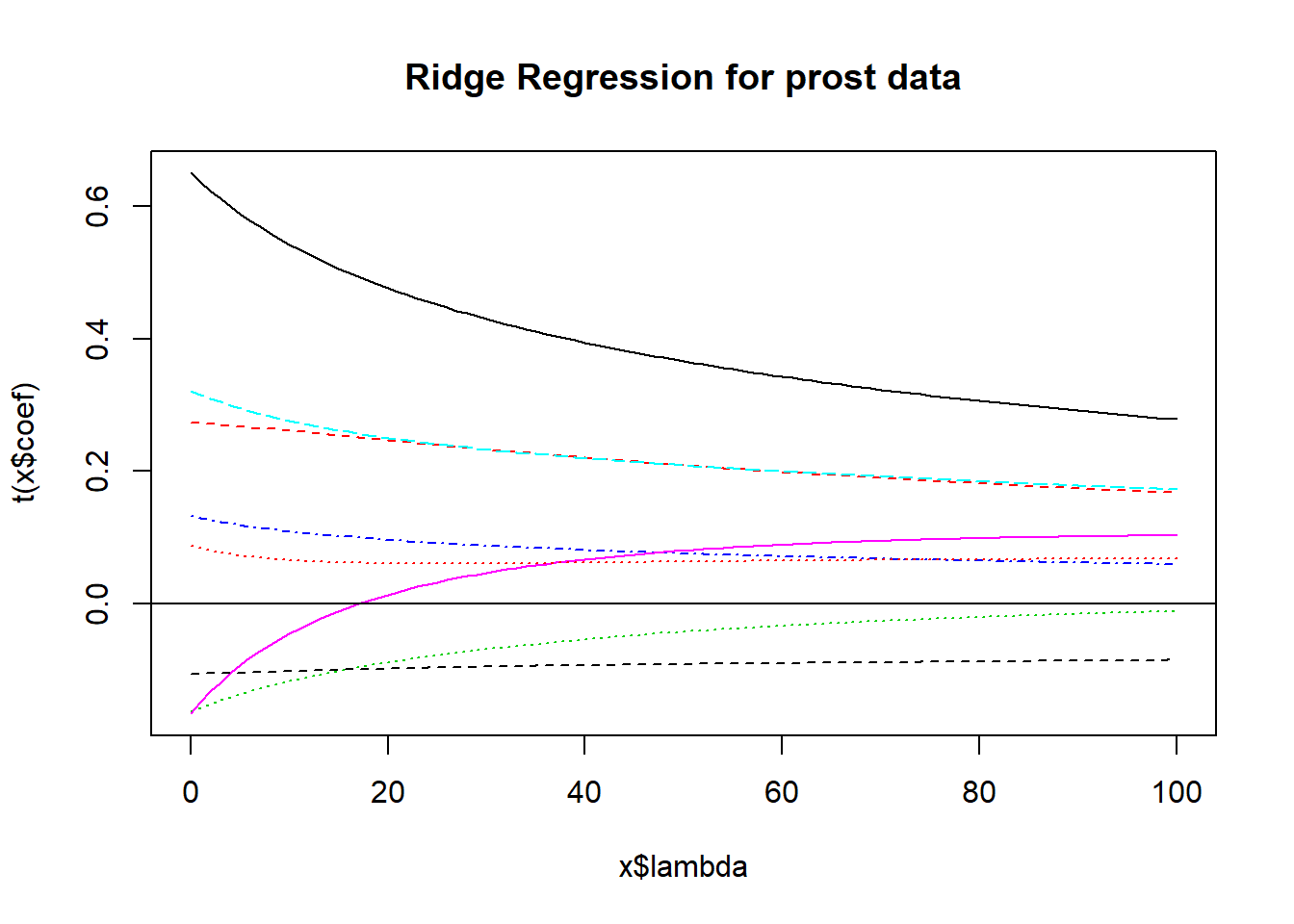

We’ll look at a plot produced by the lm.ridge function for a ridge regression for the prostate cancer study we worked on when studying Stepwise Regression and Best Subsets methods earlier.

- Several (here 101) different values for \(\lambda\), our shrinkage parameter, will be tested.

- Results are plotted so that we see the coefficients across the various (standardized) predictors.

- Each selection of a \(\lambda\) value implies a different vector of covariate values across the predictors we are studying.

- The idea is to pick a value of \(\lambda\) for which the coefficients seem relatively stable.

preds <- with(prost, cbind(lcavol, lweight, age, bph_f,

svi_f, lcp, gleason_f, pgg45))

x <- lm.ridge(prost$lpsa ~ preds, lambda = 0:100)

plot(x)

title("Ridge Regression for prost data")

abline(h = 0)

Usually, you need to use trial and error to decide the range of \(\lambda\) to be tested. Here, 0:100 means going from 0 (no shrinkage) to 100 in steps of 1.

15.2.2 The lm.ridge plot - where do coefficients stabilize?

Does \(\lambda = 20\) seem like a stable spot here?

x <- lm.ridge(prost$lpsa ~ preds, lambda = 0:100)

plot(x)

title("Ridge Regression for prost data")

abline(h = 0)

abline(v=20, lty=2, col="black")

The coefficients at \(\lambda\) = 20 can be determined from the lm.ridge output. These are fully standardized coefficients. The original predictors are centered by their means and then scaled by their standard deviations and the outcome has also been centered, in these models.

predslcavol predslweight predsage predsbph_f predssvi_f

0.482 0.248 -0.091 0.097 0.252

predslcp predsgleason_f predspgg45

0.009 -0.099 0.061 Was an intercept used?

[1] 1Yes, it was. There is an automated way to pick \(\lambda\). Use the select function in the MASS package:

modified HKB estimator is 4.210238

modified L-W estimator is 3.32223

smallest value of GCV at 6 I’ll use the GCV = generalized cross-validation to select \(\lambda\) = 6 instead.

x <- lm.ridge(prost$lpsa ~ preds, lambda = 0:100)

plot(x)

title("Ridge Regression for prost data")

abline(h = 0)

abline(v=6, lty=2, col="black")

predslcavol predslweight predsage predsbph_f predssvi_f

0.58911149 0.26773757 -0.13715070 0.11862949 0.29491008

predslcp predsgleason_f predspgg45

-0.09389545 -0.10477578 0.07250609 15.2.3 Ridge Regression: The Bottom Line

The main problem with ridge regression is that all it does is shrink the coefficient estimates, but it’s not so useful in practical settings because it still includes all variables.

- It’s been easy to do ridge regression for many years, so you see it occasionally in the literature.

- It leads to the lasso, which incorporates the positive features of shrinking regression coefficients with the ability to wisely select some variables to be eliminated from the predictor pool.

15.3 The Lasso

The lasso works by takes the sum of the absolute values of the estimated standardized regression coefficients and constrains it to only be as large as some value k.

\[ \sum \hat{|\beta_j|} \leq k. \]

This looks like a minor change, but it’s not.

15.3.1 Consequences of the Lasso Approach

- In ridge regression, while the individual coefficients shrink and sometimes approach zero, they seldom reach zero and are thus excluded from the model. With the lasso, some coefficients do reach zero and thus, those predictors do drop out of the model.

- So the lasso leads to more parsimonious models than does ridge regression.

- Ridge regression is a method of shrinkage but not model selection. The lasso accomplishes both tasks.

- If k is chosen to be too small, then the model may not capture important characteristics of the data. If k is too large, the model may over-fit the data in the sample and thus not represent the population of interest accurately.

- The lasso is far more difficult computationally than ridge regression (the problem requires an algorithm called least angle regression published in 2004), although R has a library (

lars) which can do the calculations pretty efficiently.

The lasso is not an acronym, but rather refers to cowboys using a rope to pull cattle from the herd, much as we will pull predictors from a model.

15.3.2 How The Lasso Works

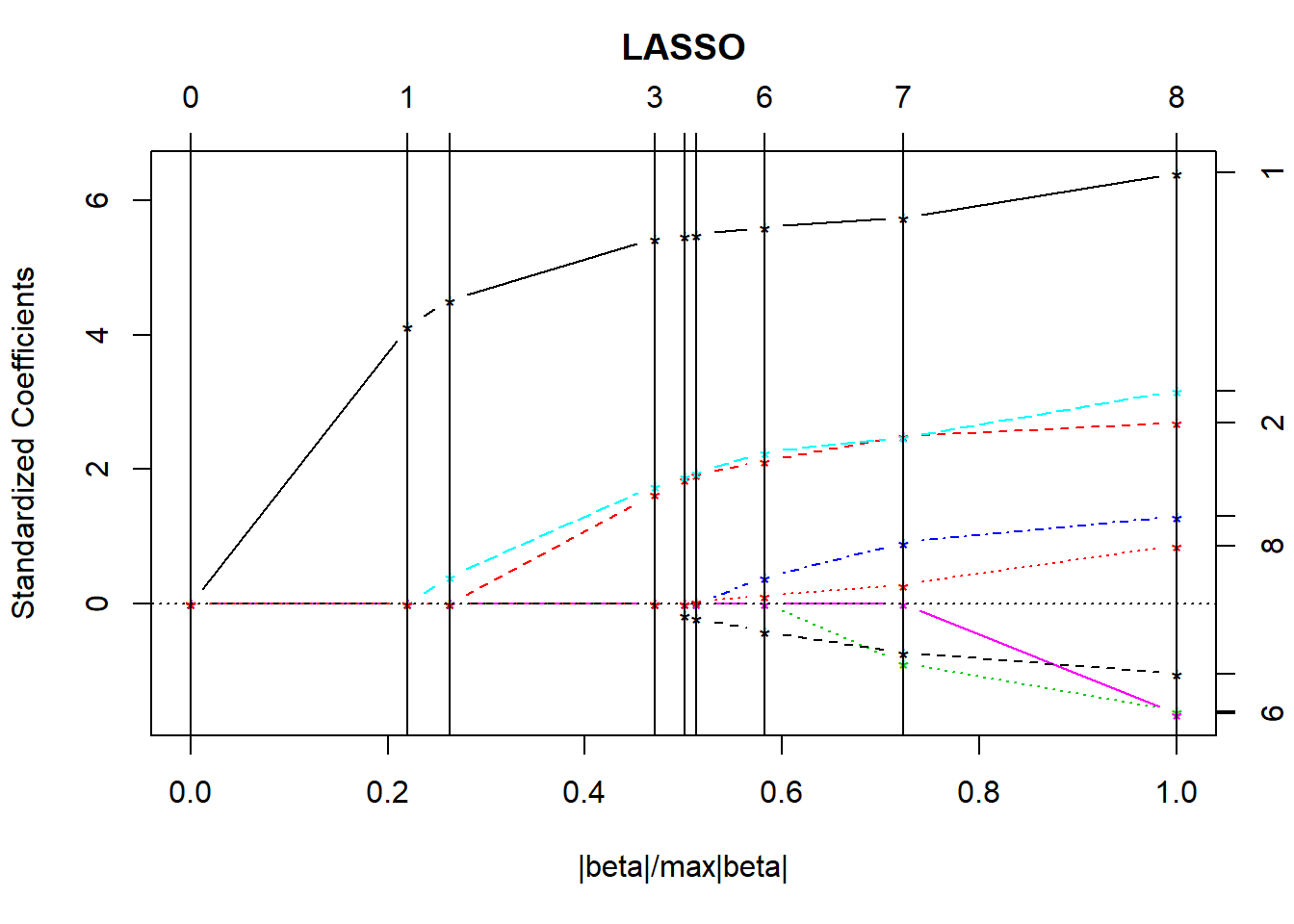

The lars package lets us compute the lasso coefficient estimates and do cross-validation to determine the appropriate amount of shrinkage. The main tool is a pair of graphs.

- The first plot shows what coefficients get selected as we move from constraining all of the coefficients to zero (complete shrinkage) towards fewer constraints all the way up to ordinary least squares, showing which variables are included in the model at each point.

- The second plot suggests where on the first plot we should look for a good model choice, according to a cross-validation approach.

- The y axis shows standardized regression coefficients.

- The

larspackage standardizes all variables so the shrinkage doesn’t penalize some coefficients because of their scale.

- The

- The x-axis is labeled

|beta|/max|beta|.- This ranges from 0 to 1.

- 0 means that the sum of the \(|\hat{\beta_j}|\) is zero (completely shrunk)

- 1 means the ordinary least squares unbiased estimates.

The lasso graph starts at constraining all of the coefficients to zero, and then moves toward ordinary least squares.

Identifiers for the predictors (numbers) are shown to the right of the graph.

The vertical lines in the lasso plot show when a variable has been eliminated from the model, and in fact these are the only points that are actually shown in the default lasso graph. The labels on the top of the graph tell you how many predictors are in the model at that stage.

LARS/LASSO

Call: lars(x = preds, y = prost$lpsa, type = "lasso")

Df Rss Cp

0 1 127.918 168.1835

1 2 76.392 64.1722

2 3 70.247 53.5293

3 4 50.598 15.1017

4 5 49.065 13.9485

5 6 48.550 14.8898

6 7 46.284 12.2276

7 8 44.002 9.5308

8 9 42.772 9.0000Based on the \(C_p\) statistics, it looks like the improvements continue throughout, and don’t really finish happening until we get pretty close to the full model with 9 df.

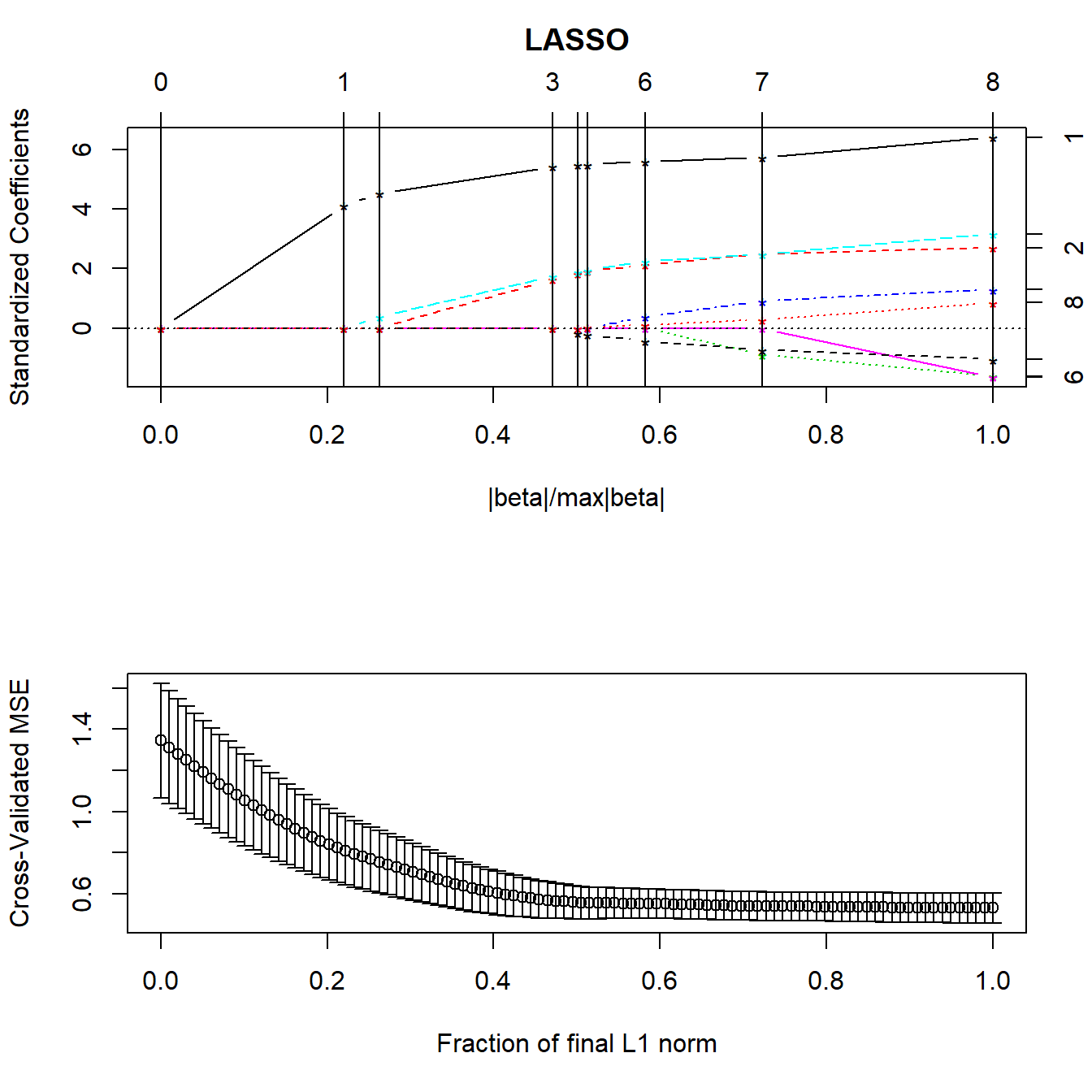

15.3.3 Cross-Validation with the Lasso

Normally, cross-validation methods are used to determine how much shrinkage should be used. We’ll use the cv.lars function.

- 10-fold (K = 10) cross-validation

- the data are randomly divided into 10 groups.

- Nine groups are used to predict the remaining group for each group in turn.

- Overall prediction performance is computed, and the machine calculates a cross-validation criterion (mean squared error) and standard error for that criterion.

The cross-validation plot is the second lasso plot.

We’re looking to minimize cross-validated mean squared error in this plot, which doesn’t seem to happen until the fraction gets very close to 1.

15.3.4 What value of the key fraction minimizes cross-validated MSE?

[1] 1The cross-validation plot suggests we use a fraction of nearly 1.0, suggesting that all of the predictors will be kept in, based on the top LASSO plot.

par(mfrow=c(2,1))

lasso1 <- lars(preds, prost$lpsa, type="lasso")

plot(lasso1)

set.seed(432)

lassocv <- cv.lars(preds, prost$lpsa, K=10)

15.3.5 Coefficients for the Model Identified by the Cross-Validation

lcavol lweight age bph_f svi_f lcp gleason_f pgg45

0.5550 0.6420 -0.0220 0.1552 0.7812 -0.1198 -0.1846 0.0031 So the model suggested by the lasso still includes all eight of these predictors.

15.3.6 Obtaining Fitted Values from Lasso

$s

[1] 1

$fraction

[1] 1

$mode

[1] "fraction"

$fit

[1] 0.7981479 0.7452123 0.5047527 0.6051627 1.6984386 0.8321833 1.8281390

[8] 2.1333745 1.2493589 1.2626371 1.4669434 0.7736890 2.0754442 1.9125642

[15] 2.1526264 1.8103388 1.2670402 2.3921331 1.3259257 1.7692117 1.9759115

[22] 2.7420125 1.1640356 2.4797587 1.8024473 1.9100031 2.0164183 1.7846865

[29] 1.9707330 2.1562795 2.0359132 1.8658364 1.6927577 1.3572705 1.0450002

[36] 2.9154581 2.1796083 1.0436497 3.8894710 1.7956357 2.1016088 2.3261270

[43] 2.0788225 2.5248165 2.4462645 2.5880361 4.0702259 2.6868568 2.7106992

[50] 1.9547992 2.4558606 2.9843790 2.1921094 3.0522425 3.3516038 2.9824834

[57] 1.7591653 2.3487167 2.2871618 2.6256177 2.3046839 3.5581817 2.9732302

[64] 3.6777383 2.5104085 2.6597765 2.9467963 3.0901247 1.5062634 3.0294526

[71] 3.2912806 2.1092781 2.8890738 3.4988268 3.6925330 3.6049797 3.2758657

[78] 3.4559699 3.4011748 3.1824772 2.0470770 2.8945197 3.6756633 3.3286276

[85] 2.6958068 3.8336368 2.9955258 3.0589058 4.2901466 3.1001571 3.3809665

[92] 4.0343586 3.8332468 4.7608240 3.6275110 4.1408446 4.093786615.3.7 Complete Set of Fitted Values from the Lasso

[1] 0.798 0.745 0.505 0.605 1.698 0.832 1.828 2.133 1.249 1.263 1.467 0.774

[13] 2.075 1.913 2.153 1.810 1.267 2.392 1.326 1.769 1.976 2.742 1.164 2.480

[25] 1.802 1.910 2.016 1.785 1.971 2.156 2.036 1.866 1.693 1.357 1.045 2.915

[37] 2.180 1.044 3.889 1.796 2.102 2.326 2.079 2.525 2.446 2.588 4.070 2.687

[49] 2.711 1.955 2.456 2.984 2.192 3.052 3.352 2.982 1.759 2.349 2.287 2.626

[61] 2.305 3.558 2.973 3.678 2.510 2.660 2.947 3.090 1.506 3.029 3.291 2.109

[73] 2.889 3.499 3.693 3.605 3.276 3.456 3.401 3.182 2.047 2.895 3.676 3.329

[85] 2.696 3.834 2.996 3.059 4.290 3.100 3.381 4.034 3.833 4.761 3.628 4.141

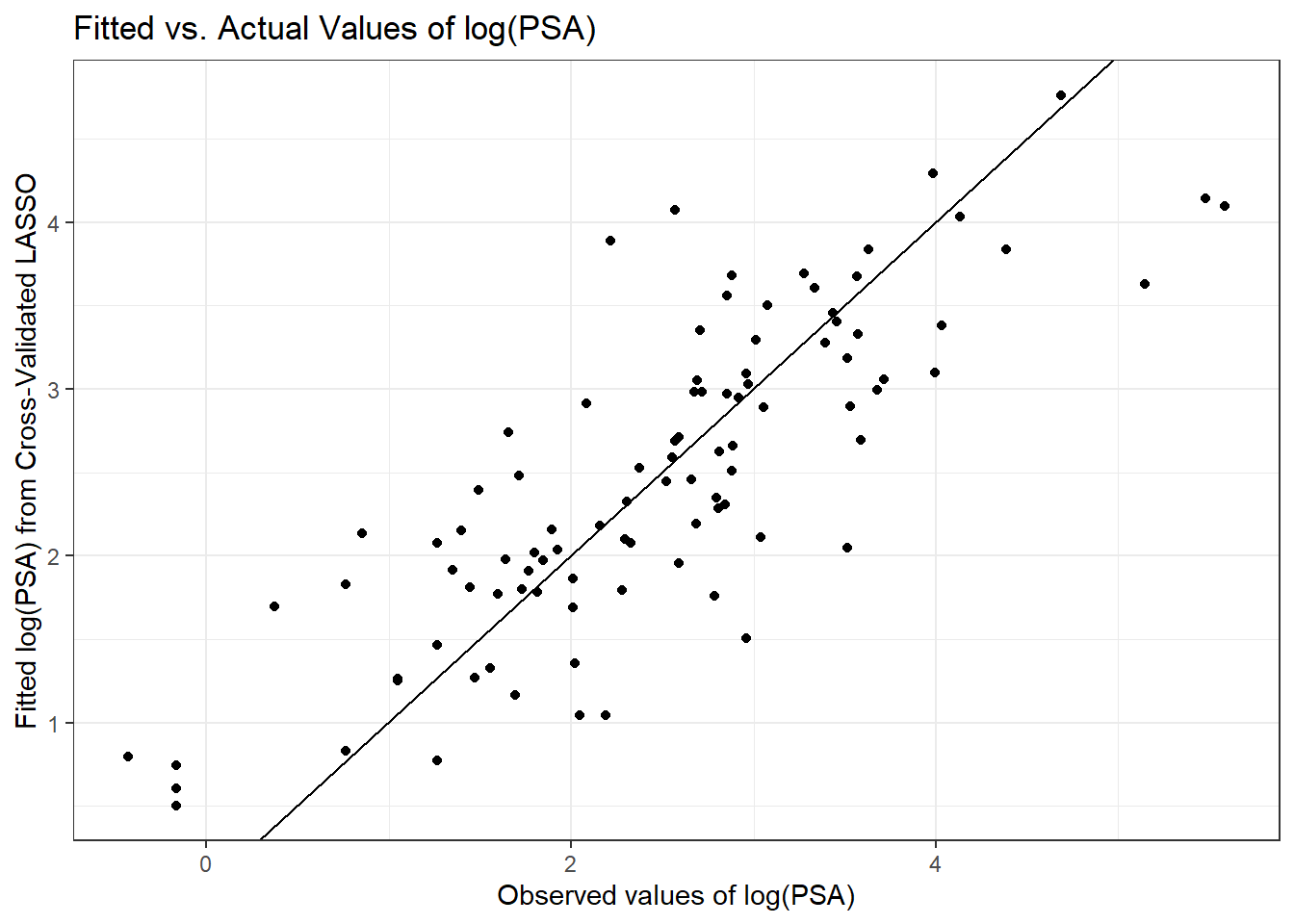

[97] 4.094To assess the quality of these predictions, we might plot them against the observed values of our outcome (lpsa), or we might look at residuals vs. these fitted values.

prost_lasso_res <- data_frame(fitted = fits.cv$fit,

actual = prost$lpsa,

resid = actual - fitted)

ggplot(prost_lasso_res, aes(x = actual, y = fitted)) +

geom_point() +

geom_abline(slope = 1, intercept = 0) +

labs(y = "Fitted log(PSA) from Cross-Validated LASSO",

x = "Observed values of log(PSA)",

title = "Fitted vs. Actual Values of log(PSA)")

ggplot(prost_lasso_res, aes(x = fitted, y = resid)) +

geom_point() +

geom_hline(yintercept = 0, col = "red") +

geom_smooth(method = "loess", col = "blue", se = F) +

labs(x = "LASSO-fitted log(PSA)",

y = "Residuals from Cross-Validated LASSO model",

title = "Residuals vs. Fitted Values of log(PSA) from LASSO",

subtitle = "with loess smooth")`geom_smooth()` using formula 'y ~ x'

15.3.8 When is the Lasso Most Useful?

As Faraway (2015) suggests, the lasso is particularly useful when we believe the effects are sparse, in the sense that we believe that few of the many predictors we are evaluating have a meaningful effect.

Consider, for instance, the analysis of gene expression data, where we have good reason to believe that only a small number of genes have an influence on our response of interest.

Or, in medical claims data, where we can have thousands of available codes to search through that may apply to some of the people included in a large analysis relating health care costs to outcomes.

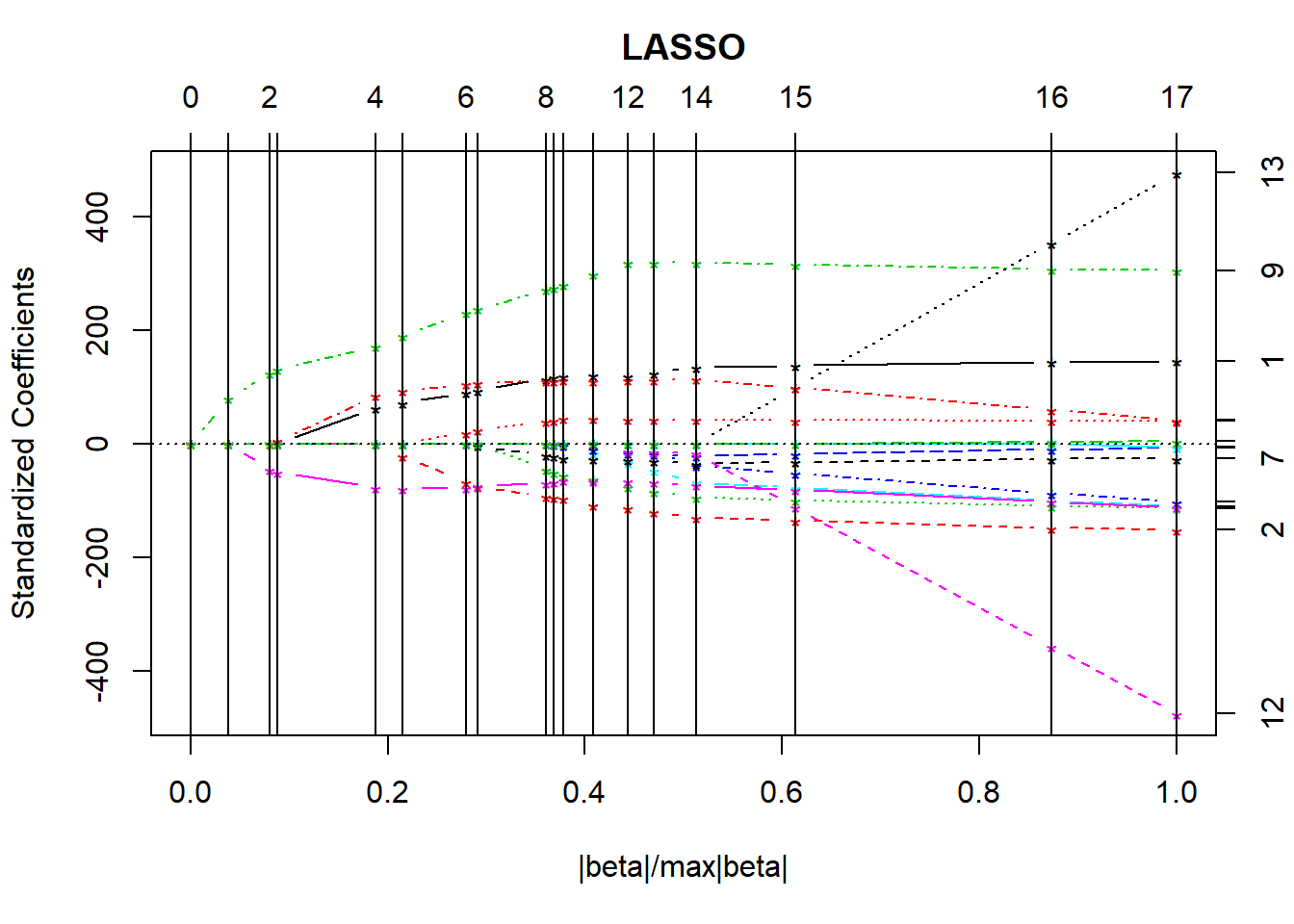

15.4 Applying the Lasso to the pollution data

Let’s consider the lasso approach in application to the pollution data we’ve seen previously. Recall that we have 60 observations on an outcome, y, and 15 predictors, labeled x1 through x15.

preds <- with(pollution, cbind(x1, x2, x3, x4, x5, x6, x7,

x8, x9, x10, x11, x12, x13,

x14, x15))

lasso_p1 <- lars(preds, pollution$y, type="lasso")

plot(lasso_p1)

LARS/LASSO

Call: lars(x = preds, y = pollution$y, type = "lasso")

Df Rss Cp

0 1 228311 129.1367

1 2 185419 95.9802

2 3 149370 68.4323

3 4 143812 65.8764

4 5 92077 25.4713

5 6 83531 20.4668

6 7 69532 10.9922

7 8 67682 11.4760

8 9 60689 7.7445

9 10 60167 9.3163

10 11 59609 10.8588

11 12 58287 11.7757

12 13 57266 12.9383

13 14 56744 14.5107

14 13 56159 12.0311

15 14 55238 13.2765

16 15 53847 14.1361

17 16 53681 16.0000Based on the \(C_p\) statistics, it looks like the big improvements occur somewhere around the move from 6 to 7 df. Let’s look at the cross-validation.

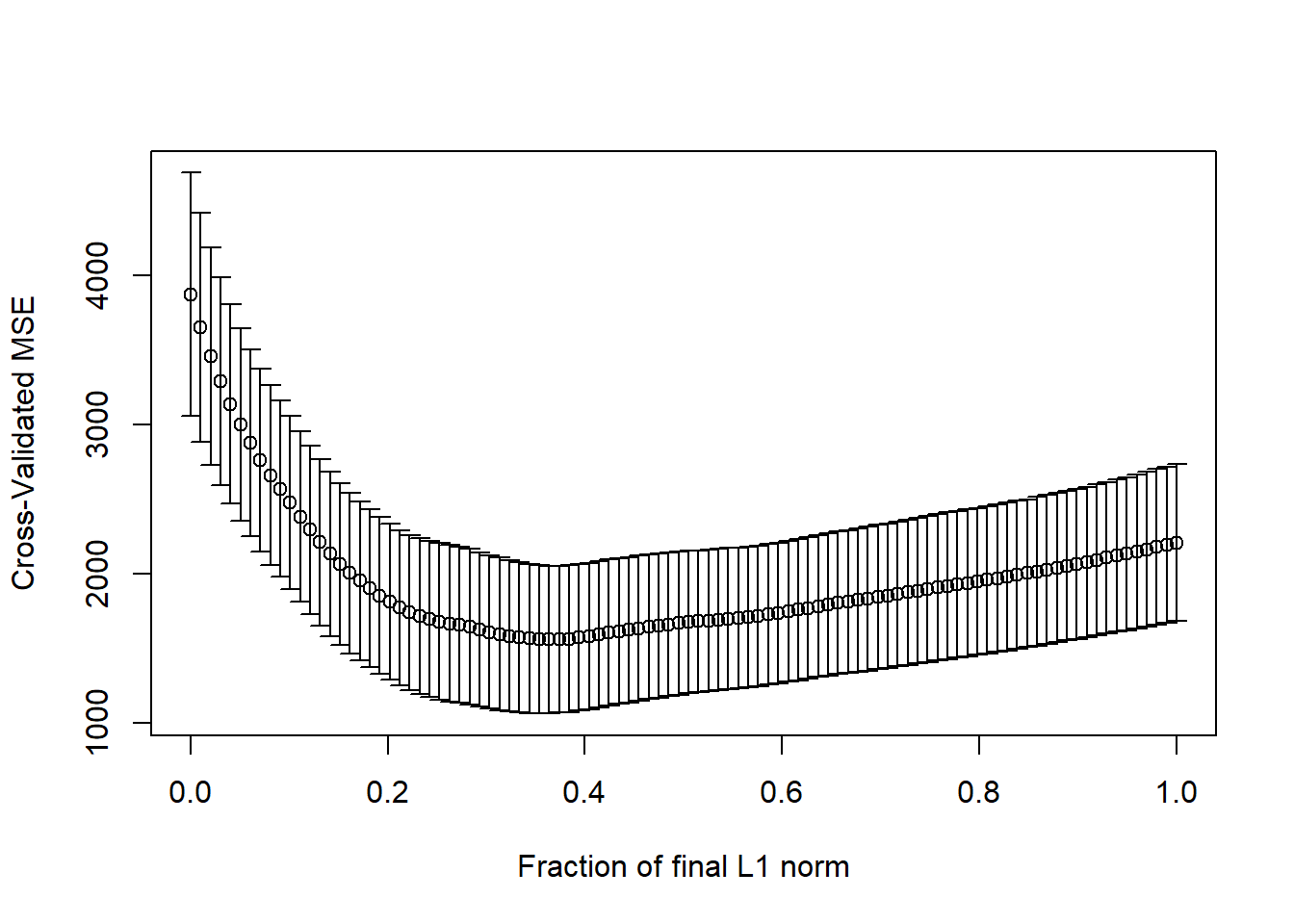

Here it looks like cross-validated MSE happens somewhere between a fraction of 0.2 and 0.4.

[1] 0.3636364par(mfrow=c(2,1))

lasso_p1 <- lars(preds, pollution$y, type="lasso")

plot(lasso_p1)

set.seed(432012)

pollution_lassocv <- cv.lars(preds, pollution$y, K=10)

It looks like a model with 6-8 predictors will be the most useful. The cross-validated coefficients are as follows:

x1 x2 x3 x4 x5 x6 x7 x8 x9 x10

1.511 -1.194 -1.278 0.000 0.000 -10.394 -0.519 0.004 3.992 -0.016

x11 x12 x13 x14 x15

0.000 0.000 0.000 0.229 0.000 Note that by this cross-validated lasso selection, not only are the coefficients for the 8 variables remaining in the model shrunken, but variables x4, x5, x10, x11, x12, x13 and x15 are all dropped from the model, and model x8 almost is, as well.

poll_fits <- predict.lars(lasso_p1, preds, s=frac,

type="fit", mode="fraction")

round(poll_fits$fit,3) [1] 933.179 918.621 921.473 987.781 1051.082 1067.800 912.441 916.116

[9] 950.767 926.550 997.107 1018.063 977.723 955.038 931.881 891.788

[17] 931.634 867.682 973.719 941.645 879.011 906.369 974.116 919.592

[25] 932.388 957.082 912.097 925.046 873.603 984.542 1043.996 913.444

[33] 938.098 884.809 990.042 932.188 1014.197 970.005 1004.448 984.370

[41] 896.646 918.426 933.802 1005.297 910.259 976.554 829.222 908.848

[49] 824.828 896.169 908.915 918.541 926.658 917.542 991.639 878.248

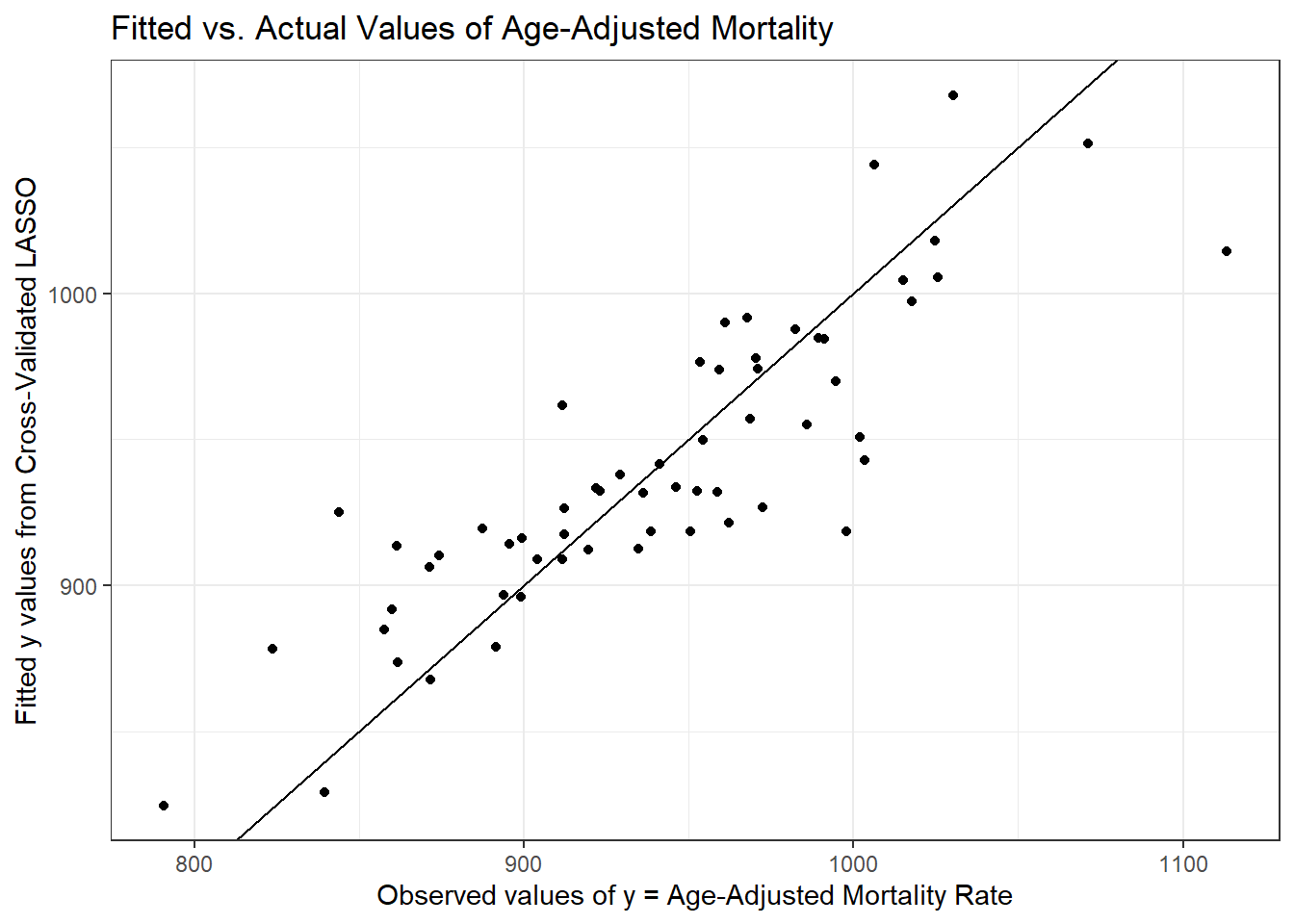

[57] 942.784 914.382 961.495 949.723Here’s a plot of the actual pollution y values, against these fitted values.

poll_lasso_res <- data_frame(fitted = poll_fits$fit,

actual = pollution$y,

resid = actual - fitted)

ggplot(poll_lasso_res, aes(x = actual, y = fitted)) +

geom_point() +

geom_abline(slope = 1, intercept = 0) +

labs(y = "Fitted y values from Cross-Validated LASSO",

x = "Observed values of y = Age-Adjusted Mortality Rate",

title = "Fitted vs. Actual Values of Age-Adjusted Mortality")

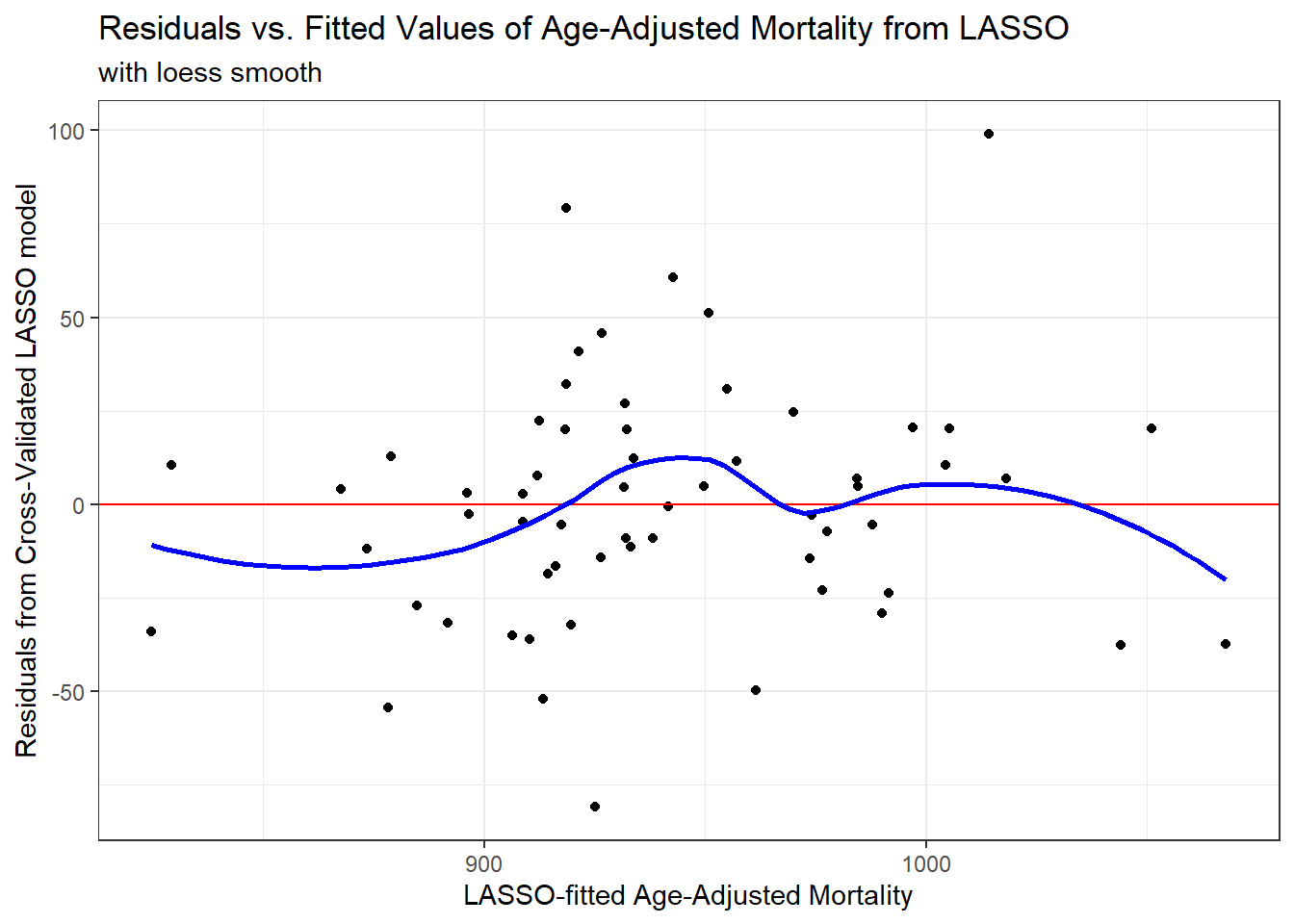

And now, here’s a plot or residuals vs. fitted values.

ggplot(poll_lasso_res, aes(x = fitted, y = resid)) +

geom_point() +

geom_hline(yintercept = 0, col = "red") +

geom_smooth(method = "loess", col = "blue", se = F) +

labs(x = "LASSO-fitted Age-Adjusted Mortality",

y = "Residuals from Cross-Validated LASSO model",

title = "Residuals vs. Fitted Values of Age-Adjusted Mortality from LASSO",

subtitle = "with loess smooth")`geom_smooth()` using formula 'y ~ x'

References

Faraway, Julian J. 2015. Linear Models with R. Second. Boca Raton, FL: CRC Press.

Harrell, Frank E. 2001. Regression Modeling Strategies. New York: Springer.

Vittinghoff, Eric, David V. Glidden, Stephen C. Shiboski, and Charles E. McCulloch. 2012. Regression Methods in Biostatistics: Linear, Logistic, Survival, and Repeated Measures Models. Second Edition. Springer-Verlag, Inc. http://www.biostat.ucsf.edu/vgsm/.