knitr::opts_chunk$set(comment = NA)

library(janitor)

library(broom)

library(mosaic)

library(tidyverse)

theme_set(theme_bw())12 Analysis of Covariance with the SMART data

In this chapter, we’ll work with the smart_cle1_sh data file that we developed in Chapter 7.

12.1 R Setup Used Here

12.1.1 Data Load

smart_cle1_sh <- readRDS("data/smart_cle1_sh.Rds")12.2 A New Small Study: Predicting BMI

We’ll begin by investigating the problem of predicting bmi, at first with just three regression inputs: smoke100, female and physhealth, in our smart_cle1_sh data set.

- The outcome of interest is

bmi. - Inputs to the regression model are:

smoke100= 1 if the subject has smoked 100 cigarettes in their lifetimefemale= 1 if the subject is female, and 0 if they are malephyshealth= number of poor physical health days in past 30 (treated as quantitative)

12.2.1 Does smoke100 predict bmi well?

12.2.1.1 Graphical Assessment

ggplot(smart_cle1_sh, aes(x = smoke100, y = bmi)) +

geom_point()

Not so helpful. We should probably specify that smoke100 is a factor, and try another plotting approach.

ggplot(smart_cle1_sh, aes(x = factor(smoke100), y = bmi)) +

geom_boxplot()

The median BMI looks a little higher for those who have smoked 100 cigarettes. Let’s see if a model reflects that.

12.3 mod1: A simple t-test model

mod1 <- lm(bmi ~ smoke100, data = smart_cle1_sh)

mod1

Call:

lm(formula = bmi ~ smoke100, data = smart_cle1_sh)

Coefficients:

(Intercept) smoke100

27.9150 0.8996 summary(mod1)

Call:

lm(formula = bmi ~ smoke100, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-15.515 -3.995 -0.844 2.865 41.745

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 27.9150 0.2624 106.387 <2e-16 ***

smoke100 0.8996 0.3776 2.382 0.0174 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 6.352 on 1131 degrees of freedom

Multiple R-squared: 0.004993, Adjusted R-squared: 0.004113

F-statistic: 5.675 on 1 and 1131 DF, p-value: 0.01737confint(mod1) 2.5 % 97.5 %

(Intercept) 27.4001291 28.429781

smoke100 0.1586812 1.640556tidy(mod1, conf.int = TRUE, conf.level = 0.95)# A tibble: 2 × 7

term estimate std.error statistic p.value conf.low conf.high

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 27.9 0.262 106. 0 27.4 28.4

2 smoke100 0.900 0.378 2.38 0.0174 0.159 1.64glance(mod1)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.00499 0.00411 6.35 5.68 0.0174 1 -3701. 7409. 7424.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>The model suggests, based on these 1133 subjects, that

- our best prediction for non-smokers is BMI = 27.94 kg/m2, and

- our best prediction for those who have smoked 100 cigarettes is BMI = 27.94 + 0.87 = 28.81 kg/m2.

- the mean difference between smokers and non-smokers is +0.87 kg/m2 in BMI

- a 95% confidence (uncertainty) interval for that mean smoker - non-smoker difference in BMI ranges from 0.13 to 1.61

- the model accounts for 0.47% of the variation in BMI, so that knowing the respondent’s tobacco status does very little to reduce the size of the prediction errors as compared to an intercept only model that would predict the overall mean BMI for each of our subjects.

- the model makes some enormous errors, with one subject being predicted to have a BMI 42 points lower than his/her actual BMI.

Note that this simple regression model just gives us the t-test.

t.test(bmi ~ smoke100, var.equal = TRUE, data = smart_cle1_sh)

Two Sample t-test

data: bmi by smoke100

t = -2.3823, df = 1131, p-value = 0.01737

alternative hypothesis: true difference in means between group 0 and group 1 is not equal to 0

95 percent confidence interval:

-1.6405563 -0.1586812

sample estimates:

mean in group 0 mean in group 1

27.91495 28.81457 12.4 mod2: Adding another predictor (two-way ANOVA without interaction)

When we add in the information about female (sex) to our original model, we might first picture the data. We could look at separate histograms,

ggplot(smart_cle1_sh, aes(x = bmi)) +

geom_histogram(bins = 30) +

facet_grid(female ~ smoke100, labeller = label_both)

or maybe boxplots?

ggplot(smart_cle1_sh, aes(x = factor(female), y = bmi)) +

geom_boxplot() +

facet_wrap(~ smoke100, labeller = label_both)

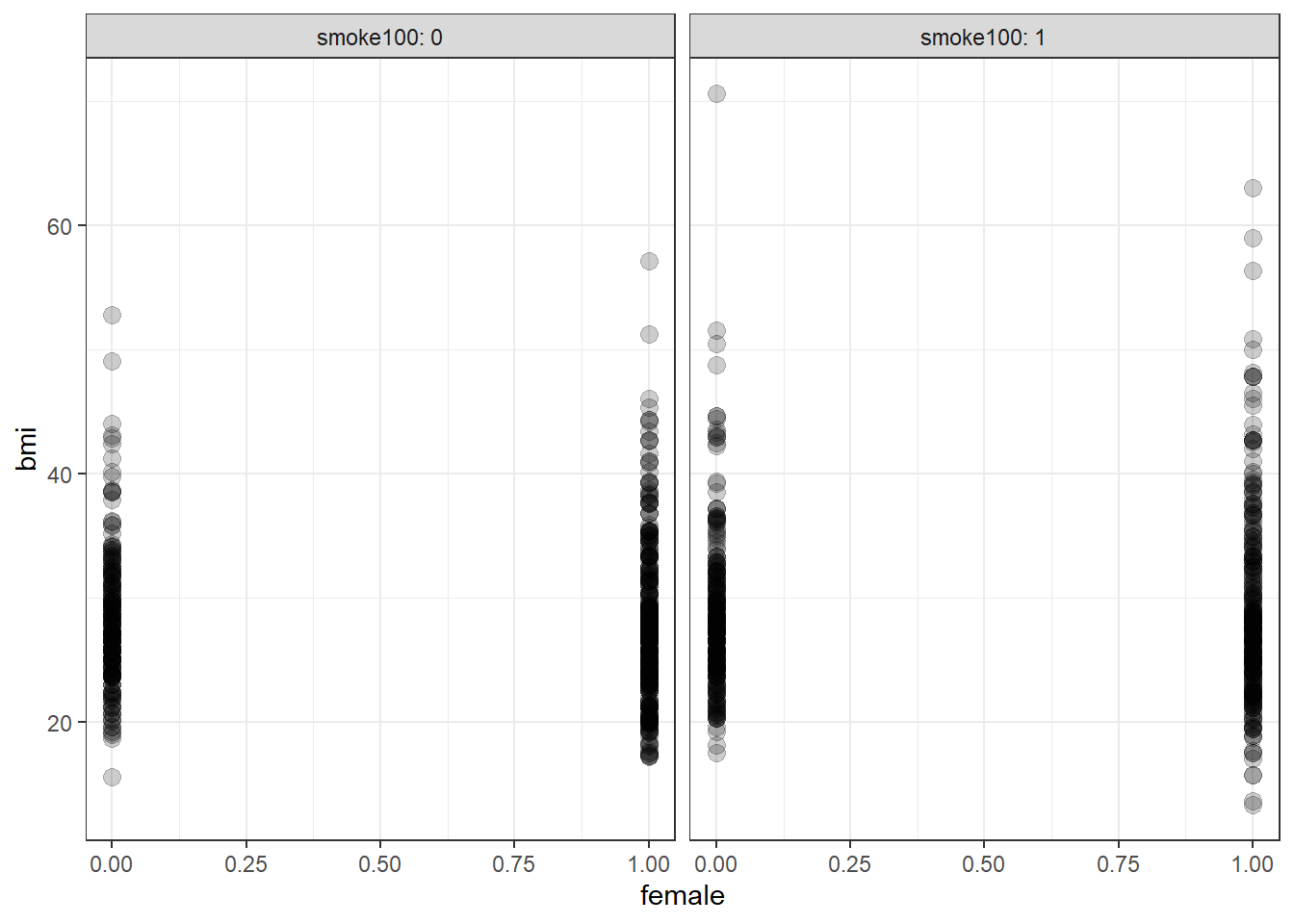

ggplot(smart_cle1_sh, aes(x = female, y = bmi))+

geom_point(size = 3, alpha = 0.2) +

theme_bw() +

facet_wrap(~ smoke100, labeller = label_both)

OK. Let’s try fitting a model.

mod2 <- lm(bmi ~ female + smoke100, data = smart_cle1_sh)

mod2

Call:

lm(formula = bmi ~ female + smoke100, data = smart_cle1_sh)

Coefficients:

(Intercept) female smoke100

28.0101 -0.1479 0.8842 This new model predicts only four predicted values:

bmi= 28.0265 if the subject is male and has not smoked 100 cigarettes (sofemale= 0 andsmoke100= 0)bmi= 28.0265 - 0.1342 = 27.8923 if the subject is female and has not smoked 100 cigarettes (female= 1 andsmoke100= 0)bmi= 28.0265 + 0.8555 = 28.8820 if the subject is male and has smoked 100 cigarettes (sofemale= 0 andsmoke100= 1), and, finallybmi= 28.0265 - 0.1342 + 0.8555 = 28.7478 if the subject is female and has smoked 100 cigarettes (so bothfemaleandsmoke100= 1).

Another way to put this is that for those who have not smoked 100 cigarettes, the model is:

bmi= 28.0265 - 0.1342female

and for those who have smoked 100 cigarettes, the model is:

bmi= 28.8820 - 0.1342female

Only the intercept of the bmi-female model changes depending on smoke100.

summary(mod2)

Call:

lm(formula = bmi ~ female + smoke100, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-15.446 -3.960 -0.854 2.790 41.666

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 28.0101 0.3615 77.475 <2e-16 ***

female -0.1479 0.3864 -0.383 0.7019

smoke100 0.8842 0.3799 2.327 0.0201 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 6.354 on 1130 degrees of freedom

Multiple R-squared: 0.005122, Adjusted R-squared: 0.003361

F-statistic: 2.909 on 2 and 1130 DF, p-value: 0.05495confint(mod2) 2.5 % 97.5 %

(Intercept) 27.3007728 28.7194992

female -0.9061739 0.6102793

smoke100 0.1388225 1.6296303The slopes of both female and smoke100 have confidence intervals that are completely below zero, indicating that both female sex and smoke100 appear to be associated with reductions in bmi.

The \(R^2\) value suggests that 0.4788% of the variation in bmi is accounted for by this ANOVA model.

In fact, this regression (on two binary indicator variables) is simply a two-way ANOVA model without an interaction term.

anova(mod2)Analysis of Variance Table

Response: bmi

Df Sum Sq Mean Sq F value Pr(>F)

female 1 16 16.163 0.4003 0.52705

smoke100 1 219 218.721 5.4171 0.02012 *

Residuals 1130 45625 40.376

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 112.5 mod3: Adding the interaction term (Two-way ANOVA with interaction)

Suppose we want to let the effect of female vary depending on the smoke100 status. Then we need to incorporate an interaction term in our model.

mod3 <- lm(bmi ~ female * smoke100, data = smart_cle1_sh)

mod3

Call:

lm(formula = bmi ~ female * smoke100, data = smart_cle1_sh)

Coefficients:

(Intercept) female smoke100 female:smoke100

28.2687 -0.5499 0.4112 0.7996 So, for example, for a male who has smoked 100 cigarettes, this model predicts

bmi= 28.269 - 0.506 (0) + 0.412 (1) + 0.754 (0)(1) = 28.269 + 0.412 = 28.681

And for a female who has smoked 100 cigarettes, the model predicts

bmi= 28.269 - 0.506 (1) + 0.412 (1) + 0.754 (1)(1) = 28.269 - 0.506 + 0.412 + 0.754 = 28.929

For those who have not smoked 100 cigarettes, the model is:

bmi= 28.269 - 0.506female

But for those who have smoked 100 cigarettes, the model is:

bmi= (28.269 + 0.412) + (-0.506 + 0.754)female, or ,,,bmi= 28.681 - 0.248female

Now, both the slope and the intercept of the bmi-female model change depending on smoke100.

summary(mod3)

Call:

lm(formula = bmi ~ female * smoke100, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-15.630 -3.939 -0.879 2.760 41.880

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 28.2687 0.4395 64.318 <2e-16 ***

female -0.5499 0.5480 -1.004 0.316

smoke100 0.4112 0.5945 0.692 0.489

female:smoke100 0.7996 0.7729 1.035 0.301

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 6.354 on 1129 degrees of freedom

Multiple R-squared: 0.006064, Adjusted R-squared: 0.003423

F-statistic: 2.296 on 3 and 1129 DF, p-value: 0.07614confint(mod3) 2.5 % 97.5 %

(Intercept) 27.4063786 29.1310929

female -1.6250493 0.5252321

smoke100 -0.7552196 1.5775258

female:smoke100 -0.7167828 2.3160753In fact, this regression (on two binary indicator variables and a product term) is simply a two-way ANOVA model with an interaction term.

anova(mod3)Analysis of Variance Table

Response: bmi

Df Sum Sq Mean Sq F value Pr(>F)

female 1 16 16.163 0.4003 0.52704

smoke100 1 219 218.721 5.4175 0.02011 *

female:smoke100 1 43 43.219 1.0705 0.30106

Residuals 1129 45581 40.373

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The interaction term doesn’t change very much here. Its uncertainty interval includes zero, and the overall model still accounts for less than 1% of the variation in bmi.

12.6 mod4: Using smoke100 and physhealth in a model for bmi

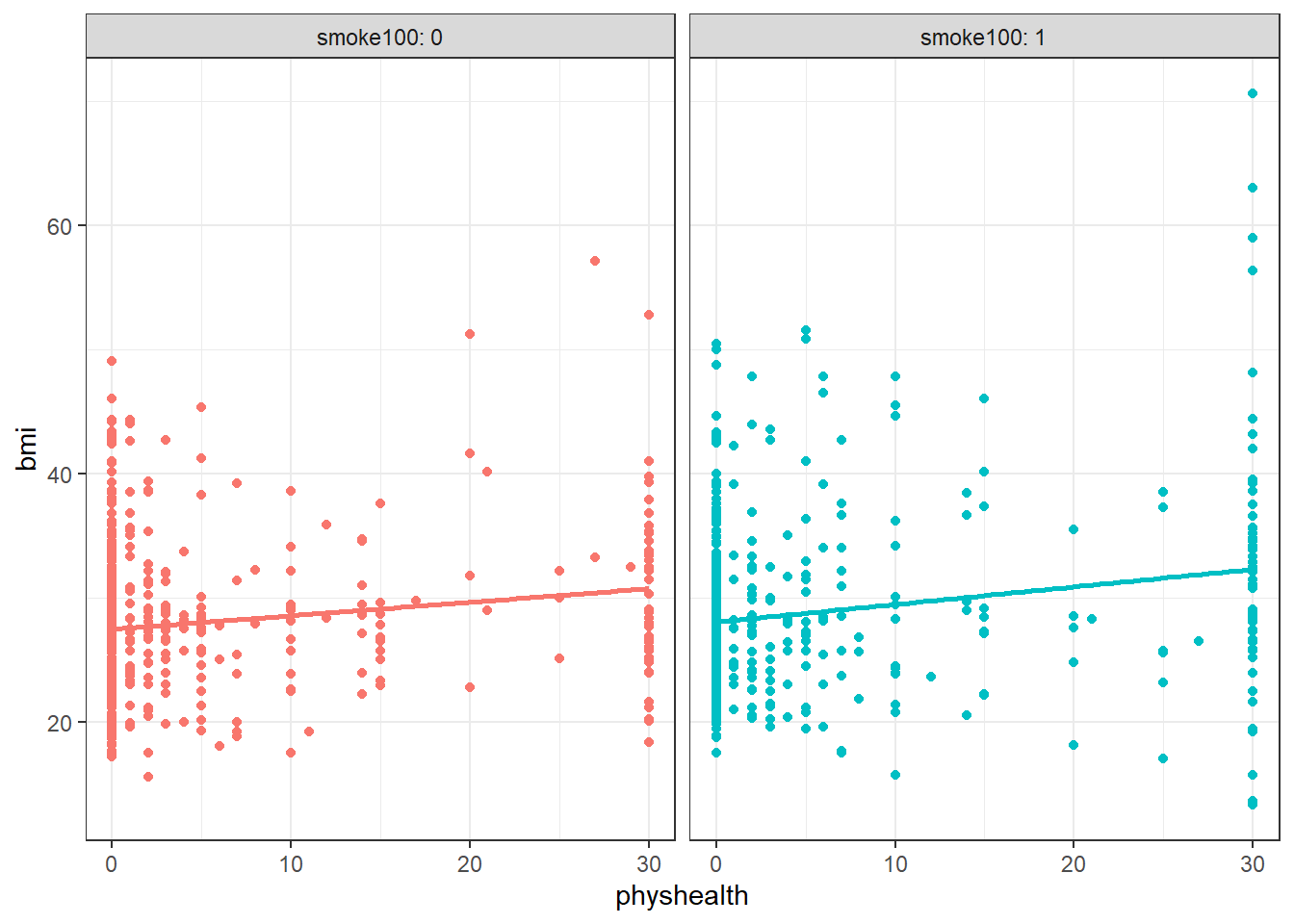

ggplot(smart_cle1_sh, aes(x = physhealth, y = bmi, color = factor(smoke100))) +

geom_point() +

guides(col = "none") +

geom_smooth(method = "lm", se = FALSE) +

facet_wrap(~ smoke100, labeller = label_both) `geom_smooth()` using formula = 'y ~ x'

Does the difference in slopes of bmi and physhealth for those who have and haven’t smoked at least 100 cigarettes appear to be substantial and important?

mod4 <- lm(bmi ~ smoke100 * physhealth, data = smart_cle1_sh)

summary(mod4)

Call:

lm(formula = bmi ~ smoke100 * physhealth, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-18.800 -3.785 -0.596 2.615 38.460

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 27.48538 0.28609 96.073 < 2e-16 ***

smoke100 0.63964 0.41697 1.534 0.125302

physhealth 0.10617 0.03026 3.509 0.000467 ***

smoke100:physhealth 0.02631 0.04084 0.644 0.519497

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 6.259 on 1129 degrees of freedom

Multiple R-squared: 0.03544, Adjusted R-squared: 0.03288

F-statistic: 13.83 on 3 and 1129 DF, p-value: 7.37e-09Does it seem as though the addition of physhealth has improved our model substantially over a model with smoke100 alone (which, you recall, was mod1)?

Since the mod4 model contains the mod1 model’s predictors as a subset and the outcome is the same for each model, we consider the models nested and have some extra tools available to compare them.

- I might start by looking at the basic summaries for each model.

glance(mod4)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.0354 0.0329 6.26 13.8 0.00000000737 3 -3684. 7377. 7403.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>glance(mod1)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.00499 0.00411 6.35 5.68 0.0174 1 -3701. 7409. 7424.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>- The \(R^2\) is much larger for the model with

physhealth, but still very tiny. - Smaller AIC and smaller BIC statistics are more desirable. Here, there’s little to choose from, so

mod4looks better, too. - We might also consider a significance test by looking at an ANOVA model comparison. This is only appropriate because

mod1is nested inmod4.

anova(mod4, mod1)Analysis of Variance Table

Model 1: bmi ~ smoke100 * physhealth

Model 2: bmi ~ smoke100

Res.Df RSS Df Sum of Sq F Pr(>F)

1 1129 44234

2 1131 45630 -2 -1396.3 17.819 2.405e-08 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The addition of the physhealth term appears to be an improvement, not that that means very much.

12.7 Making Predictions with a Linear Regression Model

Recall model 4, which yields predictions for body mass index on the basis of the main effects of having smoked (smoke100) and days of poor physical health (physhealth) and their interaction.

mod4

Call:

lm(formula = bmi ~ smoke100 * physhealth, data = smart_cle1_sh)

Coefficients:

(Intercept) smoke100 physhealth

27.48538 0.63964 0.10617

smoke100:physhealth

0.02631 12.7.1 Fitting an Individual Prediction and 95% Prediction Interval

What do we predict for the bmi of a subject who has smoked at least 100 cigarettes in their life and had 8 poor physical health days in the past 30?

new1 <- tibble(smoke100 = 1, physhealth = 8)

predict(mod4, newdata = new1, interval = "prediction", level = 0.95) fit lwr upr

1 29.18491 16.89142 41.47839The predicted bmi for this new subject is shown above. The prediction interval shows the bounds of a 95% uncertainty interval for a predicted bmi for an individual smoker1 who has 8 days of poor physical health out of the past 30. From the predict function applied to a linear model, we can get the prediction intervals for any new data points in this manner.

12.7.2 Confidence Interval for an Average Prediction

- What do we predict for the average body mass index of a population of subjects who are smokers and have

physhealth = 8?

predict(mod4, newdata = new1, interval = "confidence", level = 0.95) fit lwr upr

1 29.18491 28.63866 29.73115- How does this result compare to the prediction interval?

12.7.3 Fitting Multiple Individual Predictions to New Data

- How does our prediction change for a respondent if they instead have 7, or 9 poor physical health days? What if they have or don’t have a history of smoking?

c8_new2 <- tibble(subjectid = 1001:1006, smoke100 = c(1, 1, 1, 0, 0, 0), physhealth = c(7, 8, 9, 7, 8, 9))

pred2 <- predict(mod4, newdata = c8_new2, interval = "prediction", level = 0.95) |> as_tibble()

result2 <- bind_cols(c8_new2, pred2)

result2# A tibble: 6 × 6

subjectid smoke100 physhealth fit lwr upr

<int> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1001 1 7 29.1 16.8 41.3

2 1002 1 8 29.2 16.9 41.5

3 1003 1 9 29.3 17.0 41.6

4 1004 0 7 28.2 15.9 40.5

5 1005 0 8 28.3 16.0 40.6

6 1006 0 9 28.4 16.1 40.7The result2 tibble contains predictions for each scenario.

- Which has a bigger impact on these predictions and prediction intervals? A one category change in

smoke100or a one hour change inphyshealth?

12.8 Centering the model

Our model mod4 has four predictors (the constant, physhealth, smoke100 and their interaction) but just two inputs (smoke100 and physhealth.) If we center the quantitative input physhealth before building the model, we get a more interpretable interaction term.

smart_cle1_sh_c <- smart_cle1_sh |>

mutate(physhealth_c = physhealth - mean(physhealth))

mod4_c <- lm(bmi ~ smoke100 * physhealth_c, data = smart_cle1_sh_c)

summary(mod4_c)

Call:

lm(formula = bmi ~ smoke100 * physhealth_c, data = smart_cle1_sh_c)

Residuals:

Min 1Q Median 3Q Max

-18.800 -3.785 -0.596 2.615 38.460

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 27.97435 0.25913 107.956 < 2e-16 ***

smoke100 0.76083 0.37289 2.040 0.041544 *

physhealth_c 0.10617 0.03026 3.509 0.000467 ***

smoke100:physhealth_c 0.02631 0.04084 0.644 0.519497

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 6.259 on 1129 degrees of freedom

Multiple R-squared: 0.03544, Adjusted R-squared: 0.03288

F-statistic: 13.83 on 3 and 1129 DF, p-value: 7.37e-09What has changed as compared to the original mod4?

- Our original model was

bmi= 27.5 + 0.57smoke100+ 0.11physhealth- 0.03smoke100xphyshealth - Our new model is

bmi= 28.0 + 0.73smoke100+ 0.11 centeredphyshealth+ 0.03smoke100x centeredphyshealth.

So our new model on centered data is:

- 28.0 + 0.11 centered

physhealth_cfor non-smokers, and - (28.0 + 0.73) + (0.11 - 0.03) centered

physhealth_c, or 28.73 + 0.08 centeredphyshealth_cfor smokers.

In our new (centered physhealth_c) model,

- the main effect of

smoke100now corresponds to a predictive difference (smoker - non-smoker) inbmiwithphyshealthat its mean value, 4.68 days, - the intercept term is now the predicted

bmifor a non-smoker with an averagephyshealth, and - the product term corresponds to the change in the slope of centered

physhealth_conbmifor a smoker rather than a non-smoker, while - the residual standard deviation and the R-squared values remain unchanged from the model before centering.

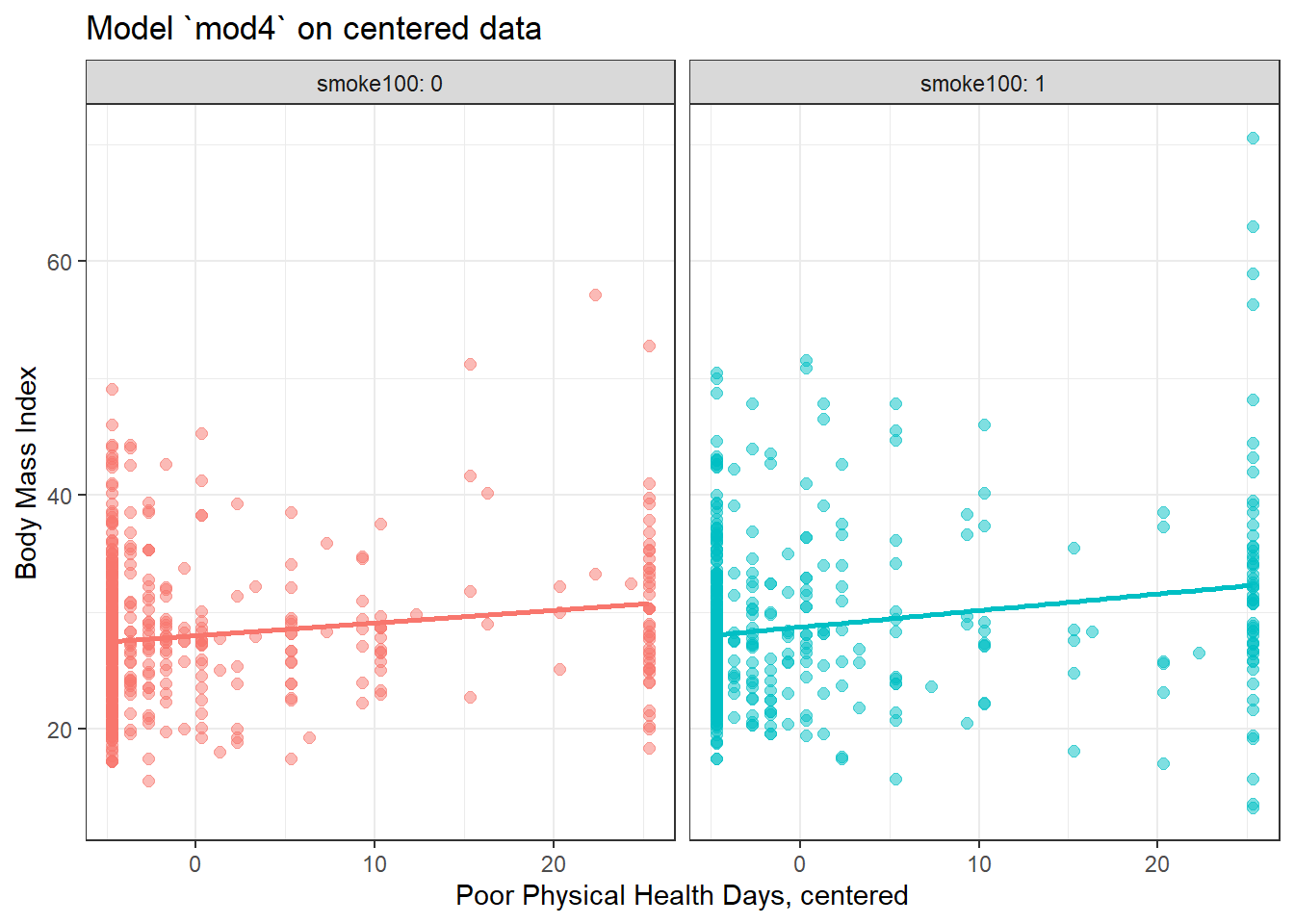

12.8.1 Plot of Model 4 on Centered physhealth: mod4_c

ggplot(smart_cle1_sh_c, aes(x = physhealth_c, y = bmi, group = smoke100, col = factor(smoke100))) +

geom_point(alpha = 0.5, size = 2) +

geom_smooth(method = "lm", se = FALSE, formula = y ~ x) +

guides(color = "none") +

labs(x = "Poor Physical Health Days, centered", y = "Body Mass Index",

title = "Model `mod4` on centered data") +

facet_wrap(~ smoke100, labeller = label_both)

12.9 Rescaling an input by subtracting the mean and dividing by 2 standard deviations

Centering helped us interpret the main effects in the regression, but it still leaves a scaling problem.

- The

smoke100coefficient estimate is much larger than that ofphyshealth, but this is misleading, considering that we are comparing the complete change in one variable (smoking or not) to a 1-day change inphyshealth. - Gelman and Hill (2007) recommend all continuous predictors be scaled by dividing by 2 standard deviations, so that:

- a 1-unit change in the rescaled predictor corresponds to a change from 1 standard deviation below the mean, to 1 standard deviation above.

- an unscaled binary (1/0) predictor with 50% probability of occurring will be exactly comparable to a rescaled continuous predictor done in this way.

smart_cle1_sh_rescale <- smart_cle1_sh |>

mutate(physhealth_z = (physhealth - mean(physhealth))/(2*sd(physhealth)))12.9.1 Refitting model mod4 to the rescaled data

mod4_z <- lm(bmi ~ smoke100 * physhealth_z, data = smart_cle1_sh_rescale)

summary(mod4_z)

Call:

lm(formula = bmi ~ smoke100 * physhealth_z, data = smart_cle1_sh_rescale)

Residuals:

Min 1Q Median 3Q Max

-18.800 -3.785 -0.596 2.615 38.460

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 27.9743 0.2591 107.956 < 2e-16 ***

smoke100 0.7608 0.3729 2.040 0.041544 *

physhealth_z 1.9477 0.5550 3.509 0.000467 ***

smoke100:physhealth_z 0.4827 0.7492 0.644 0.519497

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 6.259 on 1129 degrees of freedom

Multiple R-squared: 0.03544, Adjusted R-squared: 0.03288

F-statistic: 13.83 on 3 and 1129 DF, p-value: 7.37e-0912.9.2 Interpreting the model on rescaled data

What has changed as compared to the original mod4?

- Our original model was

bmi= 27.5 + 0.57smoke100+ 0.11physhealth+ 0.03smoke100xphyshealth - Our model on centered

physhealthwasbmi= 28.0 + 0.73smoke100+ 0.11 centeredphyshealth+ 0.03smoke100x centeredphyshealth. - Our new model on rescaled

physhealthisbmi= 28.0 + 0.73smoke100+ 1.98 rescaledphyshealth+ 0.61smoke100x rescaledphyshealth

So our rescaled model is:

- 28.0 + 1.98 rescaled

physhealth_zfor non-smokers, and - (28.0 + 0.73) + (1.98 + 0.61) rescaled

physhealth_z, or 28.73 + 2.59 rescaledphyshealth_zfor smokers.

In this new rescaled (physhealth_z) model, then,

- the main effect of

smoke100, 0.73, still corresponds to a predictive difference (smoker - non-smoker) inbmiwithphyshealthat its mean value, 4.68 days, - the intercept term is still the predicted

bmifor a non-smoker with an averagephyshealthcount, and - the residual standard deviation and the R-squared values remain unchanged,

as before, but now we also have that:

- the coefficient of

physhealth_zindicates the predictive difference inbmiassociated with a change inphyshealthof 2 standard deviations (from one standard deviation below the mean of 4.68 to one standard deviation above 4.68.)- Since the standard deviation of

physhealthis 9.12 (see below), this covers a massive range of potential values ofphyshealthfrom 0 all the way up to 4.68 + 2(9.12) = 22.92 days.

- Since the standard deviation of

favstats(~ physhealth, data = smart_cle1_sh) min Q1 median Q3 max mean sd n missing

0 0 0 3 30 4.605472 9.172505 1133 0- the coefficient of the product term (0.61) corresponds to the change in the coefficient of

physhealth_zfor smokers as compared to non-smokers.

12.9.3 Plot of model on rescaled data

ggplot(smart_cle1_sh_rescale, aes(x = physhealth_z, y = bmi,

group = smoke100, col = factor(smoke100))) +

geom_point(alpha = 0.5) +

geom_smooth(method = "lm", se = FALSE, size = 1.5) +

scale_color_discrete(name = "Is subject a smoker?") +

labs(x = "Poor Physical Health Days, standardized (2 sd)", y = "Body Mass Index",

title = "Model `mod4_z` on rescaled data") Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

ℹ Please use `linewidth` instead.`geom_smooth()` using formula = 'y ~ x'

There’s very little difference here.

12.10 mod5: What if we add more variables?

We can boost our \(R^2\) a bit, to nearly 5%, by adding in two new variables, related to whether or not the subject (in the past 30 days) used the internet, and the average number of alcoholic drinks per week consumed by ths subject.

mod5 <- lm(bmi ~ smoke100 + female +physhealth + internet30 + drinks_wk,

data = smart_cle1_sh)

summary(mod5)

Call:

lm(formula = bmi ~ smoke100 + female + physhealth + internet30 +

drinks_wk, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-18.271 -3.841 -0.723 2.654 38.187

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 27.59416 0.55756 49.491 < 2e-16 ***

smoke100 0.85750 0.37612 2.280 0.02280 *

female -0.44346 0.38471 -1.153 0.24928

physhealth 0.11875 0.02060 5.765 1.05e-08 ***

internet30 0.38241 0.48782 0.784 0.43326

drinks_wk -0.10307 0.03352 -3.074 0.00216 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 6.238 on 1127 degrees of freedom

Multiple R-squared: 0.04373, Adjusted R-squared: 0.03949

F-statistic: 10.31 on 5 and 1127 DF, p-value: 1.114e-09- Here’s the ANOVA for this model. What can we study with this?

anova(mod5)Analysis of Variance Table

Response: bmi

Df Sum Sq Mean Sq F value Pr(>F)

smoke100 1 229 228.97 5.8842 0.01543 *

female 1 6 5.92 0.1521 0.69663

physhealth 1 1394 1393.62 35.8147 2.912e-09 ***

internet30 1 9 9.26 0.2379 0.62586

drinks_wk 1 368 367.80 9.4521 0.00216 **

Residuals 1127 43854 38.91

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1- Consider the revised output below. Now what can we study?

anova(lm(bmi ~ smoke100 + internet30 + drinks_wk + female + physhealth,

data = smart_cle1_sh))Analysis of Variance Table

Response: bmi

Df Sum Sq Mean Sq F value Pr(>F)

smoke100 1 229 228.97 5.8842 0.0154334 *

internet30 1 8 8.38 0.2155 0.6426003

drinks_wk 1 440 439.87 11.3042 0.0007993 ***

female 1 35 34.92 0.8973 0.3437094

physhealth 1 1293 1293.42 33.2397 1.051e-08 ***

Residuals 1127 43854 38.91

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1- What does the output below let us conclude?

anova(lm(bmi ~ smoke100 + internet30 + drinks_wk + female + physhealth,

data = smart_cle1_sh),

lm(bmi ~ smoke100 + female + drinks_wk,

data = smart_cle1_sh))Analysis of Variance Table

Model 1: bmi ~ smoke100 + internet30 + drinks_wk + female + physhealth

Model 2: bmi ~ smoke100 + female + drinks_wk

Res.Df RSS Df Sum of Sq F Pr(>F)

1 1127 43854

2 1129 45148 -2 -1293.8 16.625 7.661e-08 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1- What does it mean for the models to be “nested”?

12.11 mod6: Would adding self-reported health help?

And we can do even a bit better than that by adding in a multi-categorical measure: self-reported general health.

mod6 <- lm(bmi ~ female + smoke100 + physhealth + internet30 + drinks_wk + genhealth,

data = smart_cle1_sh)

summary(mod6)

Call:

lm(formula = bmi ~ female + smoke100 + physhealth + internet30 +

drinks_wk + genhealth, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-19.205 -3.637 -0.750 2.667 36.839

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 25.21949 0.71005 35.518 < 2e-16 ***

female -0.32079 0.37604 -0.853 0.3938

smoke100 0.48496 0.37062 1.309 0.1910

physhealth 0.03710 0.02475 1.499 0.1341

internet30 0.91322 0.48259 1.892 0.0587 .

drinks_wk -0.07751 0.03293 -2.354 0.0187 *

genhealth2_VeryGood 1.21718 0.56839 2.141 0.0325 *

genhealth3_Good 3.23814 0.58002 5.583 2.97e-08 ***

genhealth4_Fair 4.19627 0.73221 5.731 1.28e-08 ***

genhealth5_Poor 6.00832 1.08770 5.524 4.12e-08 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 6.091 on 1123 degrees of freedom

Multiple R-squared: 0.09148, Adjusted R-squared: 0.0842

F-statistic: 12.56 on 9 and 1123 DF, p-value: < 2.2e-16If Harry and Marty have the same values of

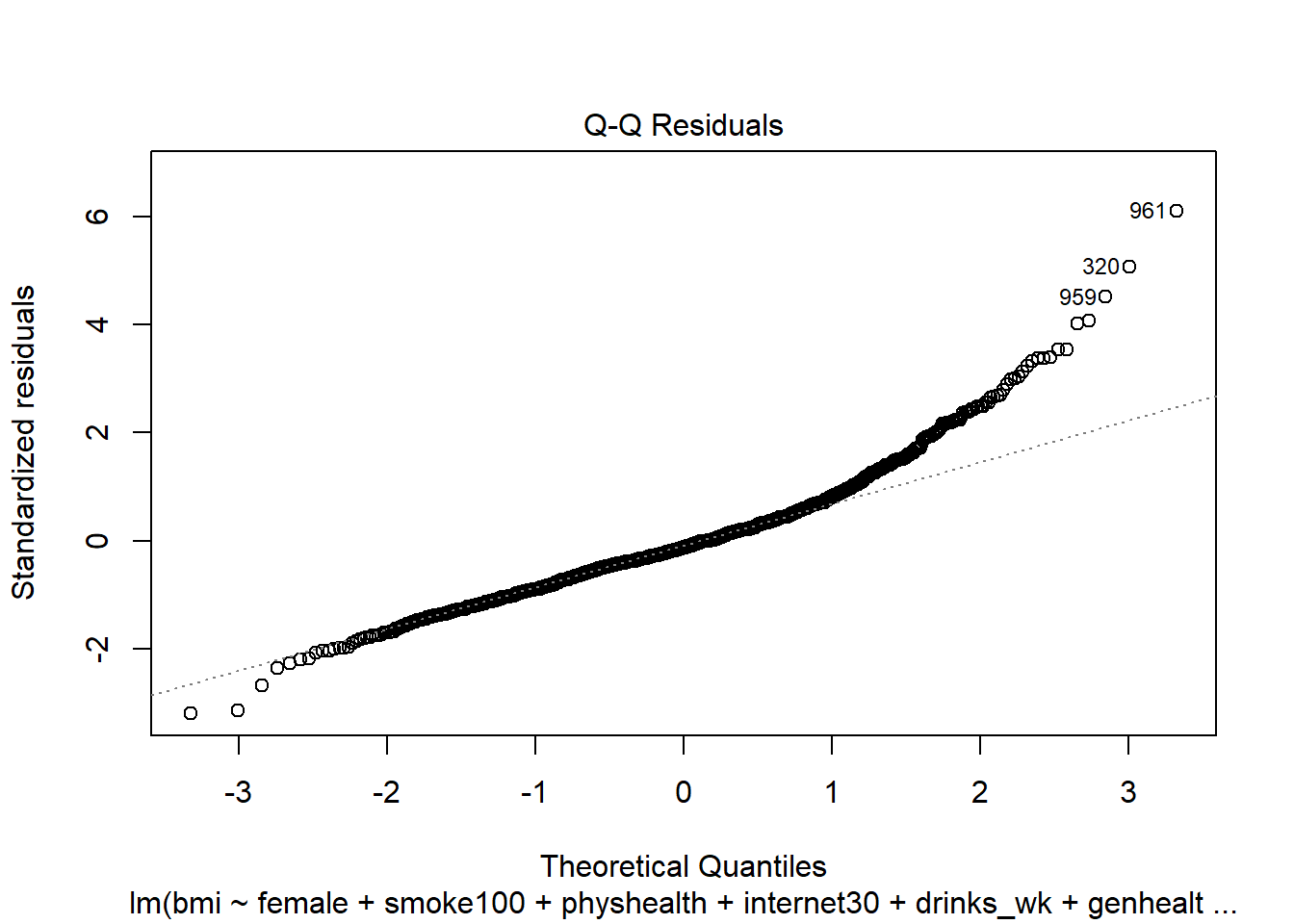

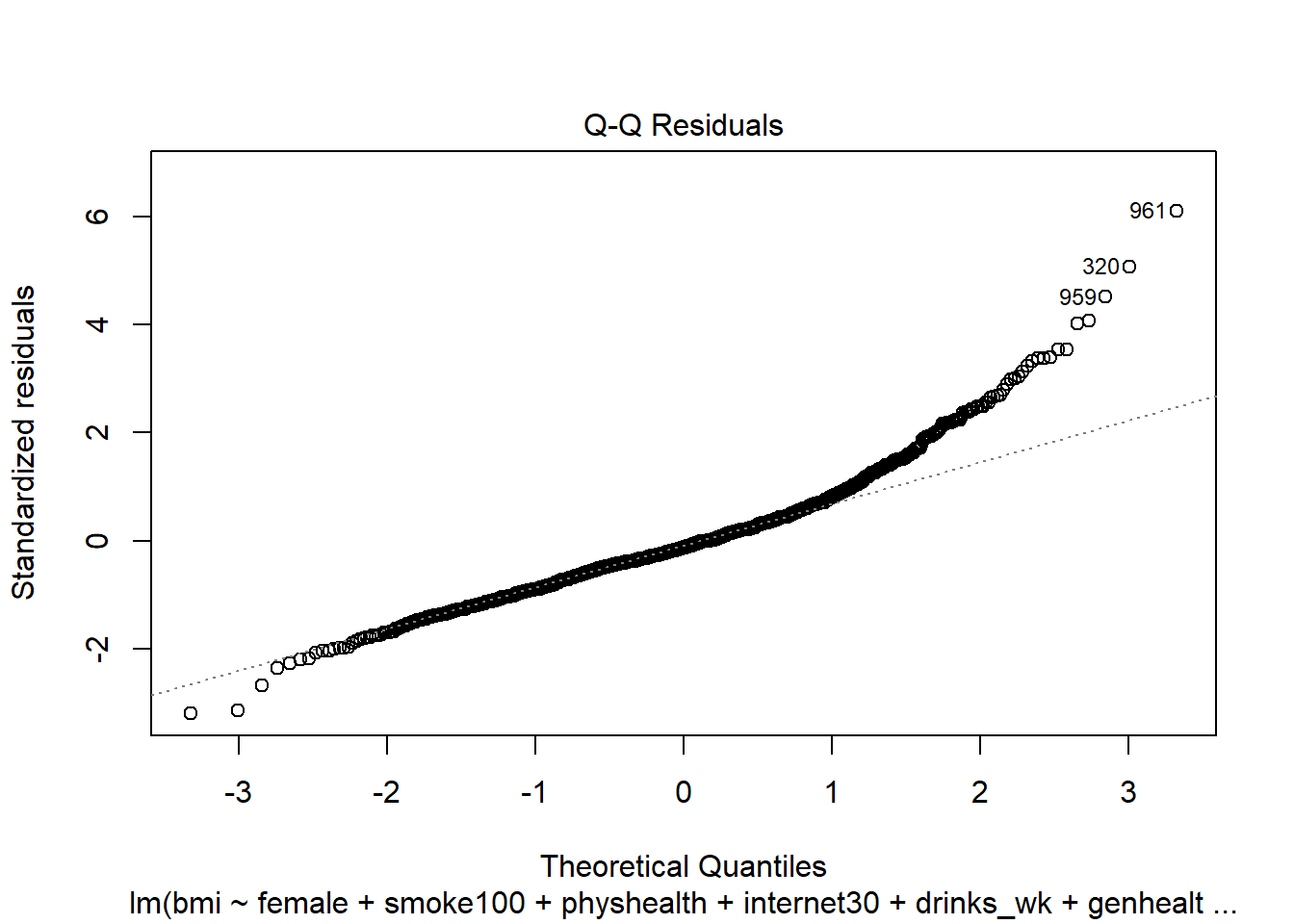

female,smoke100,physhealth,internet30anddrinks_wk, but Harry rates his health as Good, and Marty rates his as Fair, then what is the difference in the predictions? Who is predicted to have a larger BMI, and by how much?What does this normal probability plot of the residuals suggest?

plot(mod6, which = 2)

12.12 Key Regression Assumptions for Building Effective Prediction Models

- Validity - the data you are analyzing should map to the research question you are trying to answer.

- The outcome should accurately reflect the phenomenon of interest.

- The model should include all relevant predictors. (It can be difficult to decide which predictors are necessary, and what to do with predictors that have large standard errors.)

- The model should generalize to all of the cases to which it will be applied.

- Can the available data answer our question reliably?

- Additivity and linearity - most important assumption of a regression model is that its deterministic component is a linear function of the predictors. We often think about transformations in this setting.

- Independence of errors - errors from the prediction line are independent of each other

- Equal variance of errors - if this is violated, we can more efficiently estimate parameters using weighted least squares approaches, where each point is weighted inversely proportional to its variance, but this doesn’t affect the coefficients much, if at all.

- Normality of errors - not generally important for estimating the regression line

12.12.1 Checking Assumptions in model mod6

- How does the assumption of linearity behind this model look?

plot(mod6, which = 1)

We see no strong signs of serious non-linearity here. There’s no obvious curve in the plot, for example. We may have a problem with increasing variance as we move to the right.

- What can we conclude from the plot below?

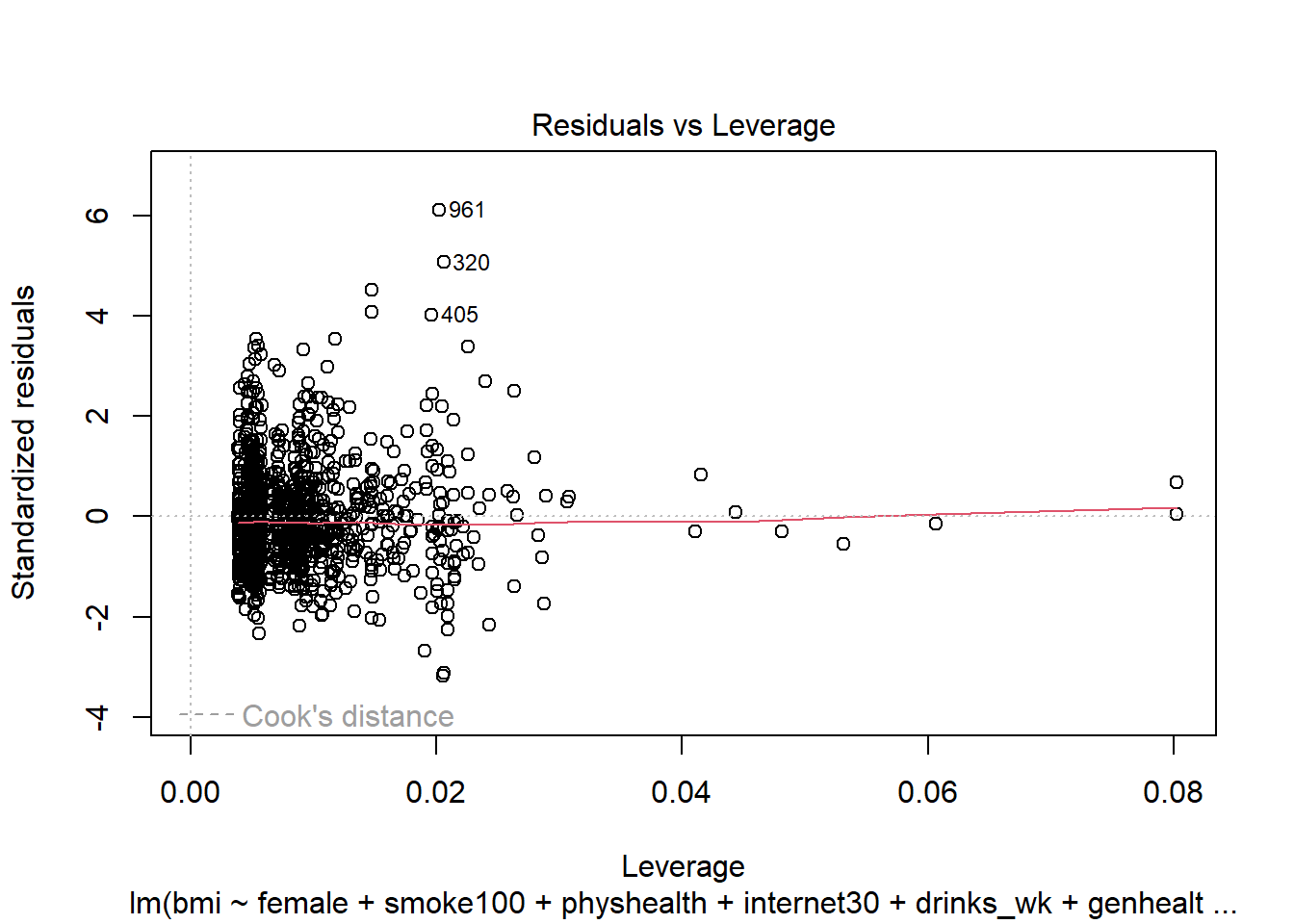

plot(mod6, which = 5)

This plot can help us identify points with large standardized residuals, large leverage values, and large influence on the model (as indicated by large values of Cook’s distance.) In this case, I see no signs of any points used in the model with especially large influence, although there are some poorly fitted points (with especially large standardized residuals.)

We might want to identify the point listed here as 961, which appears to have an enormous standardized residual. To do so, we can use the slice function from dplyr.

smart_cle1_sh |> slice(961) |> select(SEQNO)# A tibble: 1 × 1

SEQNO

<dbl>

1 2017000961Now we know exactly which subject we’re talking about.

- What other residual plots are available with

plotand how do we interpret them?

plot(mod6, which = 2)

This plot is simply a Normal Q-Q plot of the standardized residuals from our model. We’re looking here for serious problems with the assumption of Normality.

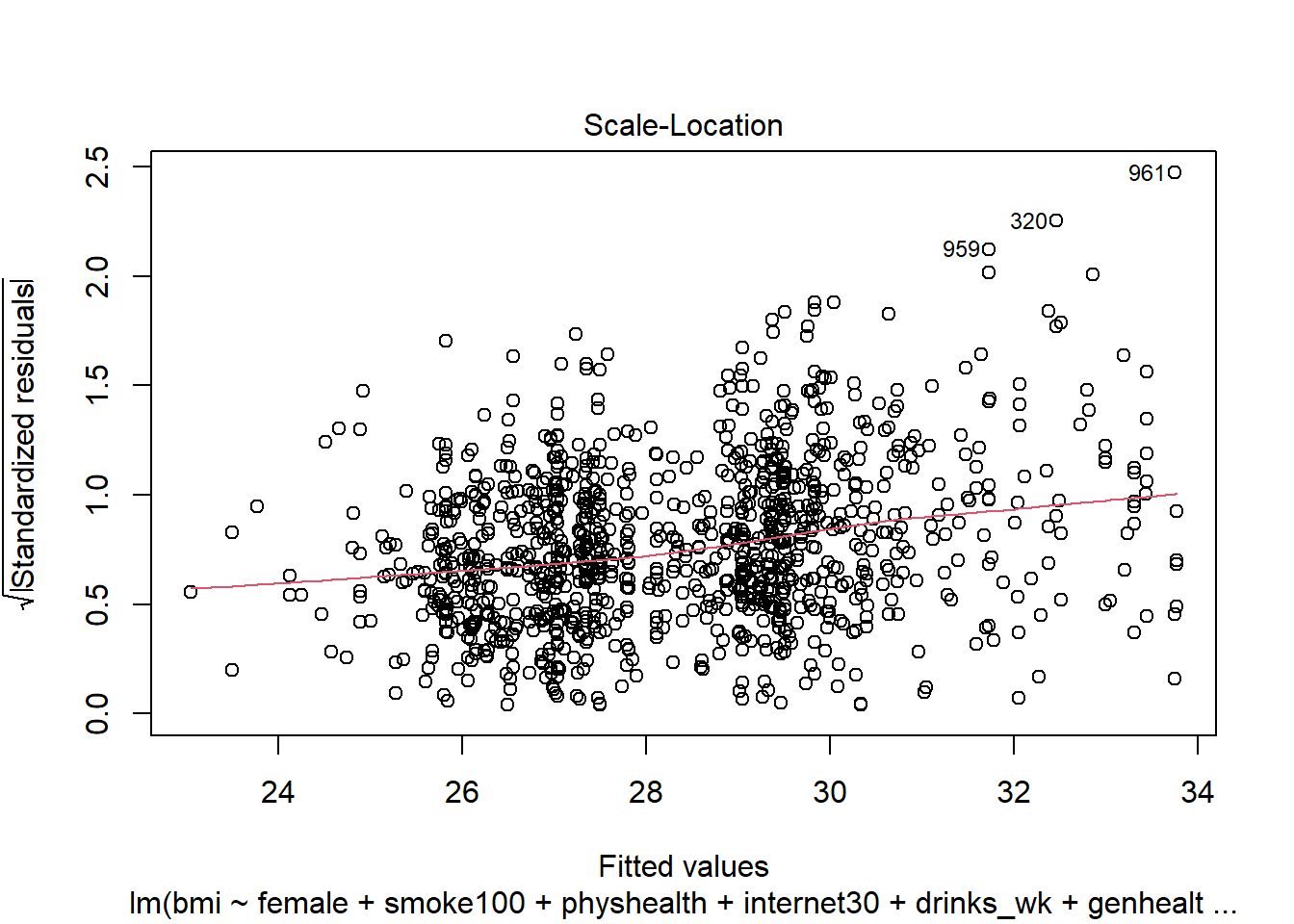

plot(mod6, which = 3)

This is a scale-location plot, designed to help us see non-constant variance in the residuals as we move across the fitted values as a linear trend, rather than as a fan shape, by plotting the square root of the residuals on the vertical axis.

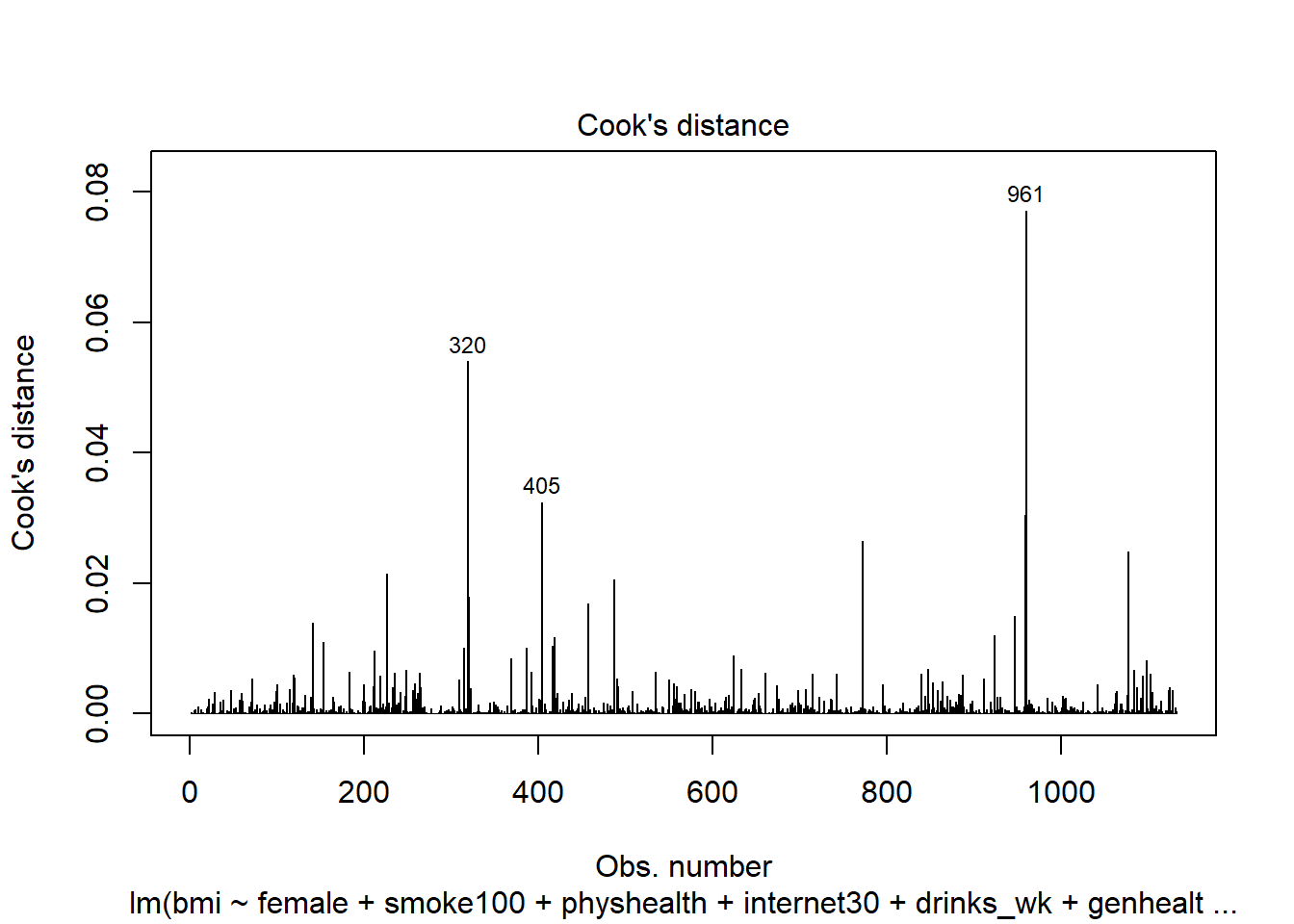

plot(mod6, which = 4)

Finally, this is an index plot of the Cook’s distance values, allowing us to identify points that are particularly large. Remember that a value of 0.5 (or perhaps even 1.0) is a reasonable boundary for a substantially influential point.

I’ll now refer to those who have smoked at least 100 cigarettes in their life as smokers, and those who have not as non-smokers to save some space.↩︎