knitr::opts_chunk$set(comment = NA)

library(janitor)

library(broom)

library(Hmisc)

library(mosaic)

library(knitr)

library(tidyverse)

theme_set(theme_bw())8 Summarizing smart_cle1

In this chapter, we’ll work with the two data files we built in Chapter 7.

8.1 R Setup Used Here

8.1.1 Data Load

smart_cle1_sh <- read_rds("data/smart_cle1_sh.Rds")

smart_cle1_cc <- read_rds("data/smart_cle1_cc.Rds")8.2 What’s in these data?

Those files (_sh contains single imputations, and a shadow set of variables which have _NA at the end of their names, while _cc contains only the complete cases) describe information on the following variables from the BRFSS 2017, who live in the Cleveland-Elyria, OH, Metropolitan Statistical Area.

| Variable | Description |

|---|---|

SEQNO |

respondent identification number (all begin with 2016) |

physhealth |

Now thinking about your physical health, which includes physical illness and injury, for how many days during the past 30 days was your physical health not good? |

genhealth |

Would you say that in general, your health is … (five categories: Excellent, Very Good, Good, Fair or Poor) |

bmi |

Body mass index, in kg/m2 |

age_imp |

Age, imputed, in years |

female |

Sex, 1 = female, 0 = male |

race_eth |

Race and Ethnicity, in five categories |

internet30 |

Have you used the internet in the past 30 days? (1 = yes, 0 = no) |

smoke100 |

Have you smoked at least 100 cigarettes in your life? (1 = yes, 0 = no) |

activity |

Physical activity (Highly Active, Active, Insufficiently Active, Inactive) |

drinks_wk |

On average, how many drinks of alcohol do you consume in a week? |

veg_day |

How many servings of vegetables do you consume per day, on average? |

8.3 General Approaches to Obtaining Numeric Summaries

8.3.1 summary for a data frame

Of course, we can use the usual summary to get some basic information about the data.

summary(smart_cle1_cc) SEQNO physhealth genhealth bmi

Min. :2.017e+09 Min. : 0.000 1_Excellent:134 Min. :13.30

1st Qu.:2.017e+09 1st Qu.: 0.000 2_VeryGood :314 1st Qu.:24.23

Median :2.017e+09 Median : 0.000 3_Good :284 Median :27.48

Mean :2.017e+09 Mean : 4.361 4_Fair :114 Mean :28.51

3rd Qu.:2.017e+09 3rd Qu.: 3.000 5_Poor : 46 3rd Qu.:31.82

Max. :2.017e+09 Max. :30.000 Max. :63.00

age_imp female race_eth

Min. :18.00 Min. :0.0000 White non-Hispanic :661

1st Qu.:43.00 1st Qu.:0.0000 Black non-Hispanic :168

Median :58.00 Median :1.0000 Other race non-Hispanic : 20

Mean :56.46 Mean :0.5807 Multiracial non-Hispanic: 17

3rd Qu.:69.00 3rd Qu.:1.0000 Hispanic : 26

Max. :95.00 Max. :1.0000

internet30 smoke100 activity drinks_wk

Min. :0.000 Min. :0.0000 Highly_Active :308 Min. : 0.000

1st Qu.:1.000 1st Qu.:0.0000 Active :155 1st Qu.: 0.000

Median :1.000 Median :0.0000 Insufficiently_Active:167 Median : 0.470

Mean :0.833 Mean :0.4787 Inactive :262 Mean : 2.727

3rd Qu.:1.000 3rd Qu.:1.0000 3rd Qu.: 2.850

Max. :1.000 Max. :1.0000 Max. :56.000

veg_day

Min. :0.000

1st Qu.:1.280

Median :1.730

Mean :1.919

3rd Qu.:2.422

Max. :7.300 8.3.2 The inspect function from the mosaic package

inspect(smart_cle1_cc)

categorical variables:

name class levels n missing

1 genhealth factor 5 892 0

2 race_eth factor 5 892 0

3 activity factor 4 892 0

distribution

1 2_VeryGood (35.2%), 3_Good (31.8%) ...

2 White non-Hispanic (74.1%) ...

3 Highly_Active (34.5%) ...

quantitative variables:

name class min Q1 median Q3

1 SEQNO numeric 2.017e+09 2.0170e+09 2.017001e+09 2.017001e+09

2 physhealth numeric 0.000e+00 0.0000e+00 0.000000e+00 3.000000e+00

3 bmi numeric 1.330e+01 2.4235e+01 2.747500e+01 3.181500e+01

4 age_imp numeric 1.800e+01 4.3000e+01 5.800000e+01 6.900000e+01

5 female numeric 0.000e+00 0.0000e+00 1.000000e+00 1.000000e+00

6 internet30 numeric 0.000e+00 1.0000e+00 1.000000e+00 1.000000e+00

7 smoke100 numeric 0.000e+00 0.0000e+00 0.000000e+00 1.000000e+00

8 drinks_wk numeric 0.000e+00 0.0000e+00 4.700000e-01 2.850000e+00

9 veg_day numeric 0.000e+00 1.2800e+00 1.730000e+00 2.422500e+00

max mean sd n missing

1 2017001133.0 2.017001e+09 326.8928344 892 0

2 30.0 4.360987e+00 8.8153373 892 0

3 63.0 2.850905e+01 6.5057975 892 0

4 95.0 5.645516e+01 18.0333027 892 0

5 1.0 5.807175e-01 0.4937185 892 0

6 1.0 8.329596e-01 0.3732212 892 0

7 1.0 4.786996e-01 0.4998263 892 0

8 56.0 2.726525e+00 5.7172011 892 0

9 7.3 1.918643e+00 1.0262415 892 08.3.3 The describe function in Hmisc

This provides some useful additional summaries, including a list of the lowest and highest values (which is very helpful when checking data.)

smart_cle1_cc |>

select(bmi, genhealth, female) |>

describe()select(smart_cle1_cc, bmi, genhealth, female)

3 Variables 892 Observations

--------------------------------------------------------------------------------

bmi

n missing distinct Info Mean pMedian Gmd .05

892 0 481 1 28.51 27.88 6.952 20.09

.10 .25 .50 .75 .90 .95

21.40 24.23 27.48 31.81 36.78 41.09

lowest : 13.3 13.64 15.71 15.75 17.07, highest: 52.74 56.31 57.12 58.98 63

--------------------------------------------------------------------------------

genhealth

n missing distinct

892 0 5

Value 1_Excellent 2_VeryGood 3_Good 4_Fair 5_Poor

Frequency 134 314 284 114 46

Proportion 0.150 0.352 0.318 0.128 0.052

--------------------------------------------------------------------------------

female

n missing distinct Info Sum Mean

892 0 2 0.73 518 0.5807

--------------------------------------------------------------------------------- The

Infomeasure is used for quantitative and binary variables. It is a relative information measure that increases towards 1 for variables with no ties, and is smaller for variables with many ties. - The

Gmdis the Gini mean difference. It is a measure of spread (or dispersion), where larger values indicate greater spread in the distribution, like the standard deviation or the interquartile range. It is defined as the mean absolute difference between any pairs of observations.

See the Help file for describe in the Hmisc package for more details on these measures, and on the settings for describe.

8.4 Counting as exploratory data analysis

Counting and/or tabulating things can be amazingly useful. Suppose we want to understand the genhealth values, after our single imputation.

smart_cle1_sh |> count(genhealth) |>

mutate(percent = 100*n / sum(n))# A tibble: 5 × 3

genhealth n percent

<fct> <int> <dbl>

1 1_Excellent 164 14.5

2 2_VeryGood 386 34.1

3 3_Good 365 32.2

4 4_Fair 158 13.9

5 5_Poor 60 5.30We might use tabyl to do this job…

smart_cle1_sh |>

tabyl(genhealth) |>

adorn_pct_formatting(digits = 1) |>

kable()| genhealth | n | percent |

|---|---|---|

| 1_Excellent | 164 | 14.5% |

| 2_VeryGood | 386 | 34.1% |

| 3_Good | 365 | 32.2% |

| 4_Fair | 158 | 13.9% |

| 5_Poor | 60 | 5.3% |

8.4.1 Did genhealth vary by smoking status?

smart_cle1_sh |>

count(genhealth, smoke100) |>

mutate(percent = 100*n / sum(n))# A tibble: 10 × 4

genhealth smoke100 n percent

<fct> <dbl> <int> <dbl>

1 1_Excellent 0 102 9.00

2 1_Excellent 1 62 5.47

3 2_VeryGood 0 217 19.2

4 2_VeryGood 1 169 14.9

5 3_Good 0 178 15.7

6 3_Good 1 187 16.5

7 4_Fair 0 65 5.74

8 4_Fair 1 93 8.21

9 5_Poor 0 24 2.12

10 5_Poor 1 36 3.18Suppose we want to find the percentage within each smoking status group. Here’s one approach…

smart_cle1_sh |>

count(smoke100, genhealth) |>

group_by(smoke100) |>

mutate(prob = 100*n / sum(n)) # A tibble: 10 × 4

# Groups: smoke100 [2]

smoke100 genhealth n prob

<dbl> <fct> <int> <dbl>

1 0 1_Excellent 102 17.4

2 0 2_VeryGood 217 37.0

3 0 3_Good 178 30.4

4 0 4_Fair 65 11.1

5 0 5_Poor 24 4.10

6 1 1_Excellent 62 11.3

7 1 2_VeryGood 169 30.9

8 1 3_Good 187 34.2

9 1 4_Fair 93 17.0

10 1 5_Poor 36 6.58And here’s another …

smart_cle1_sh |>

tabyl(smoke100, genhealth) |>

adorn_totals(where = c("row", "col")) |>

adorn_percentages(denominator = "row") |>

adorn_pct_formatting(digits = 1) |>

adorn_ns(position = "front") smoke100 1_Excellent 2_VeryGood 3_Good 4_Fair 5_Poor

0 102 (17.4%) 217 (37.0%) 178 (30.4%) 65 (11.1%) 24 (4.1%)

1 62 (11.3%) 169 (30.9%) 187 (34.2%) 93 (17.0%) 36 (6.6%)

Total 164 (14.5%) 386 (34.1%) 365 (32.2%) 158 (13.9%) 60 (5.3%)

Total

586 (100.0%)

547 (100.0%)

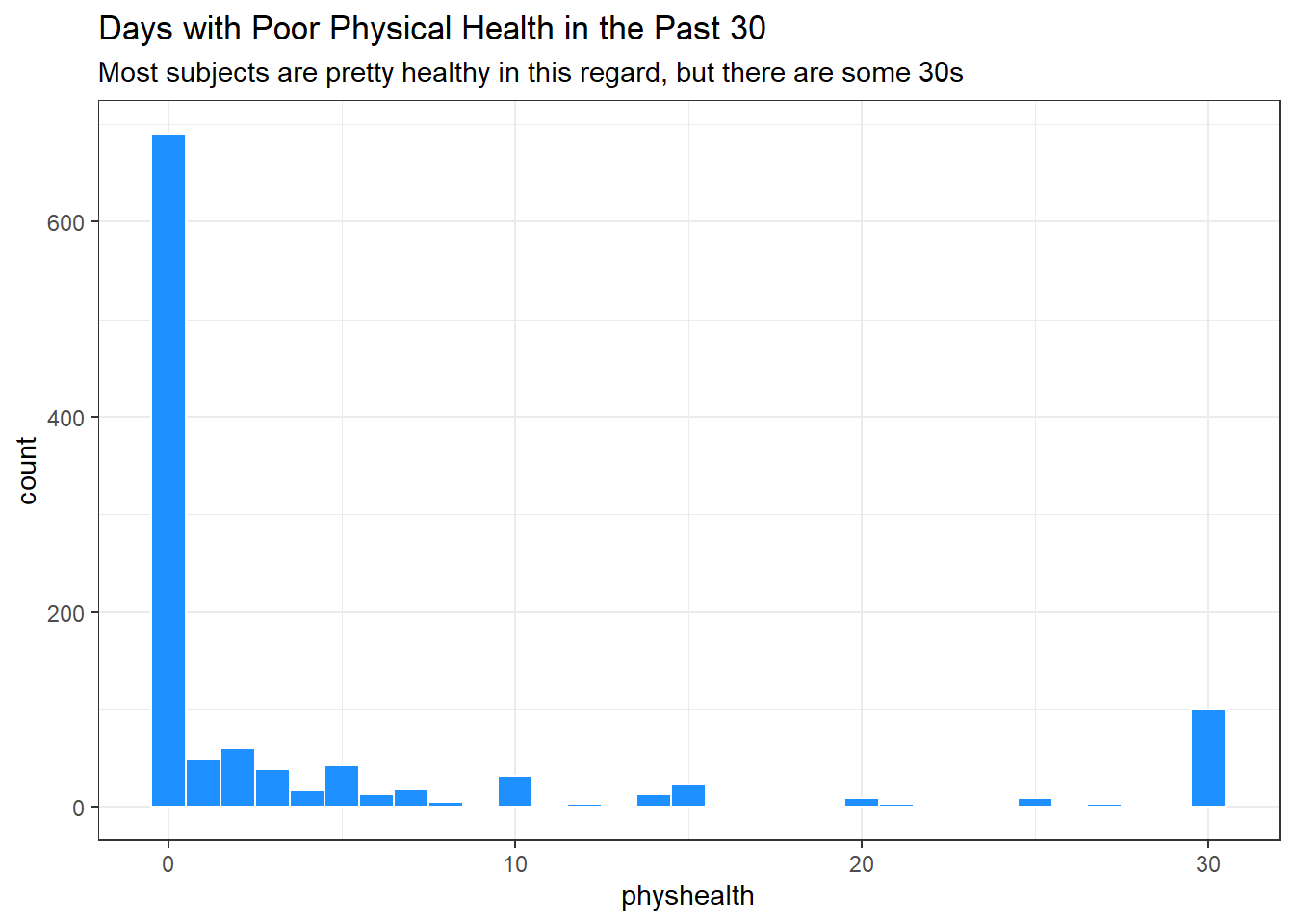

1,133 (100.0%)8.4.2 What’s the distribution of physhealth?

We can count quantitative variables with discrete sets of possible values, like physhealth, which is captured as an integer (that must fall between 0 and 30.)

smart_cle1_sh |> count(physhealth)# A tibble: 21 × 2

physhealth n

<dbl> <int>

1 0 710

2 1 49

3 2 63

4 3 38

5 4 16

6 5 30

7 6 5

8 7 18

9 8 5

10 10 32

# ℹ 11 more rowsOf course, a natural summary of a quantitative variable like this would be graphical.

ggplot(smart_cle1_sh, aes(physhealth)) +

geom_histogram(binwidth = 1,

fill = "dodgerblue", col = "white") +

labs(title = "Days with Poor Physical Health in the Past 30",

subtitle = "Most subjects are pretty healthy in this regard, but there are some 30s")

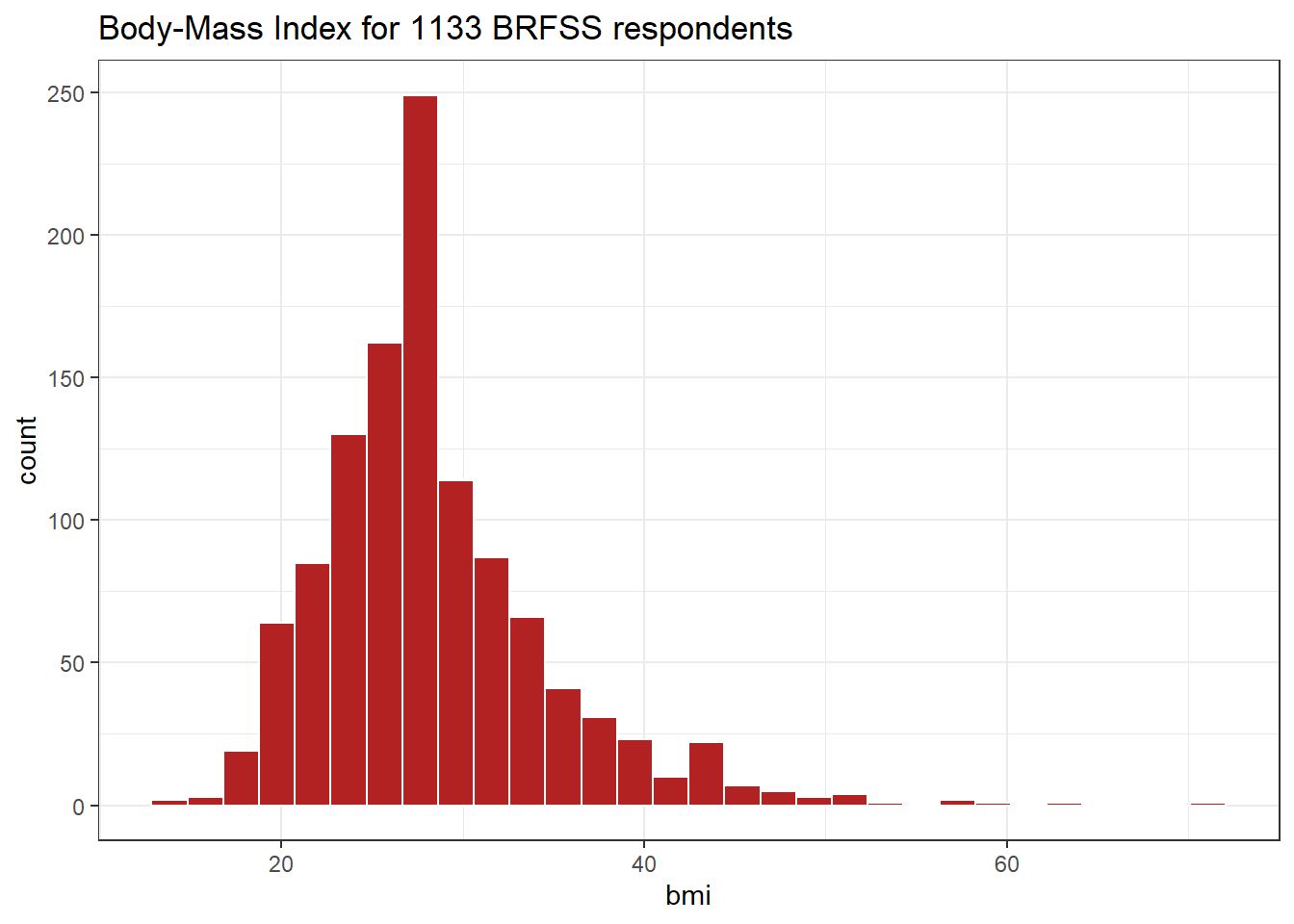

8.4.3 What’s the distribution of bmi?

bmi is the body-mass index, an indicator of size (thickness, really.)

ggplot(smart_cle1_sh, aes(bmi)) +

geom_histogram(bins = 30,

fill = "firebrick", col = "white") +

labs(title = paste0("Body-Mass Index for ",

nrow(smart_cle1_sh),

" BRFSS respondents"))

8.4.4 How many of the respondents have a BMI below 30?

smart_cle1_sh |> count(bmi < 30) |>

mutate(proportion = n / sum(n))# A tibble: 2 × 3

`bmi < 30` n proportion

<lgl> <int> <dbl>

1 FALSE 330 0.291

2 TRUE 803 0.7098.4.5 How many of the respondents with a BMI < 30 are highly active?

smart_cle1_sh |>

filter(bmi < 30) |>

tabyl(activity) |>

adorn_pct_formatting() activity n percent

Highly_Active 343 42.7%

Active 133 16.6%

Insufficiently_Active 129 16.1%

Inactive 198 24.7%8.4.6 Is obesity associated with smoking history?

smart_cle1_sh |> count(smoke100, bmi < 30) |>

group_by(smoke100) |>

mutate(percent = 100*n/sum(n))# A tibble: 4 × 4

# Groups: smoke100 [2]

smoke100 `bmi < 30` n percent

<dbl> <lgl> <int> <dbl>

1 0 FALSE 159 27.1

2 0 TRUE 427 72.9

3 1 FALSE 171 31.3

4 1 TRUE 376 68.78.4.7 Comparing drinks_wk summaries by obesity status

Can we compare the drinks_wk means, medians and 75th percentiles for respondents whose BMI is below 30 to the respondents whose BMI is not?

smart_cle1_sh |>

group_by(bmi < 30) |>

summarize(mean(drinks_wk), median(drinks_wk),

q75 = quantile(drinks_wk, 0.75))# A tibble: 2 × 4

`bmi < 30` `mean(drinks_wk)` `median(drinks_wk)` q75

<lgl> <dbl> <dbl> <dbl>

1 FALSE 1.67 0.23 1.17

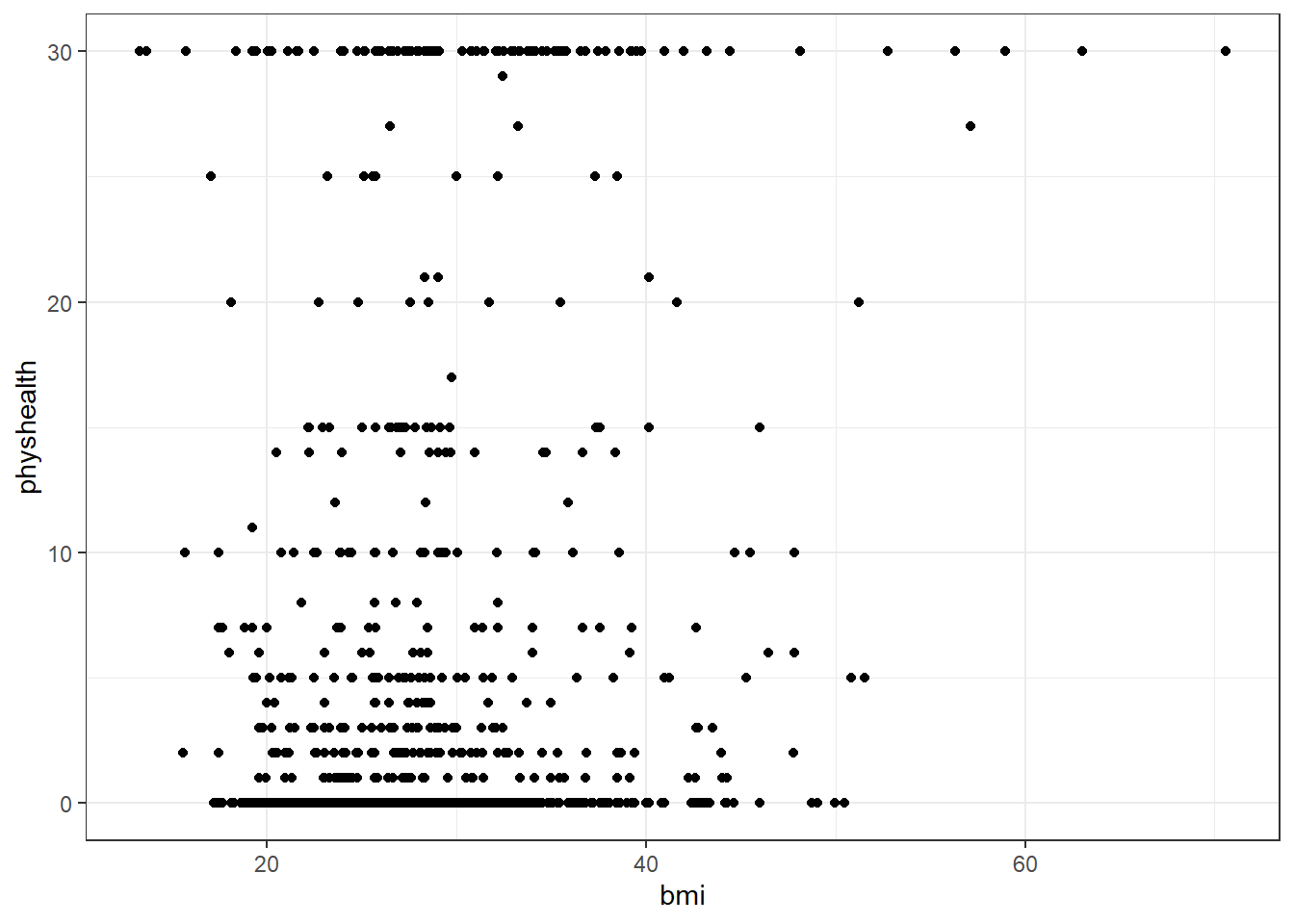

2 TRUE 2.80 0.23 2.8 8.5 Can bmi predict physhealth?

We’ll start with an effort to predict physhealth using bmi. A natural graph would be a scatterplot.

ggplot(data = smart_cle1_sh, aes(x = bmi, y = physhealth)) +

geom_point()

A good question to ask ourselves here might be: “In what BMI range can we make a reasonable prediction of physhealth?”

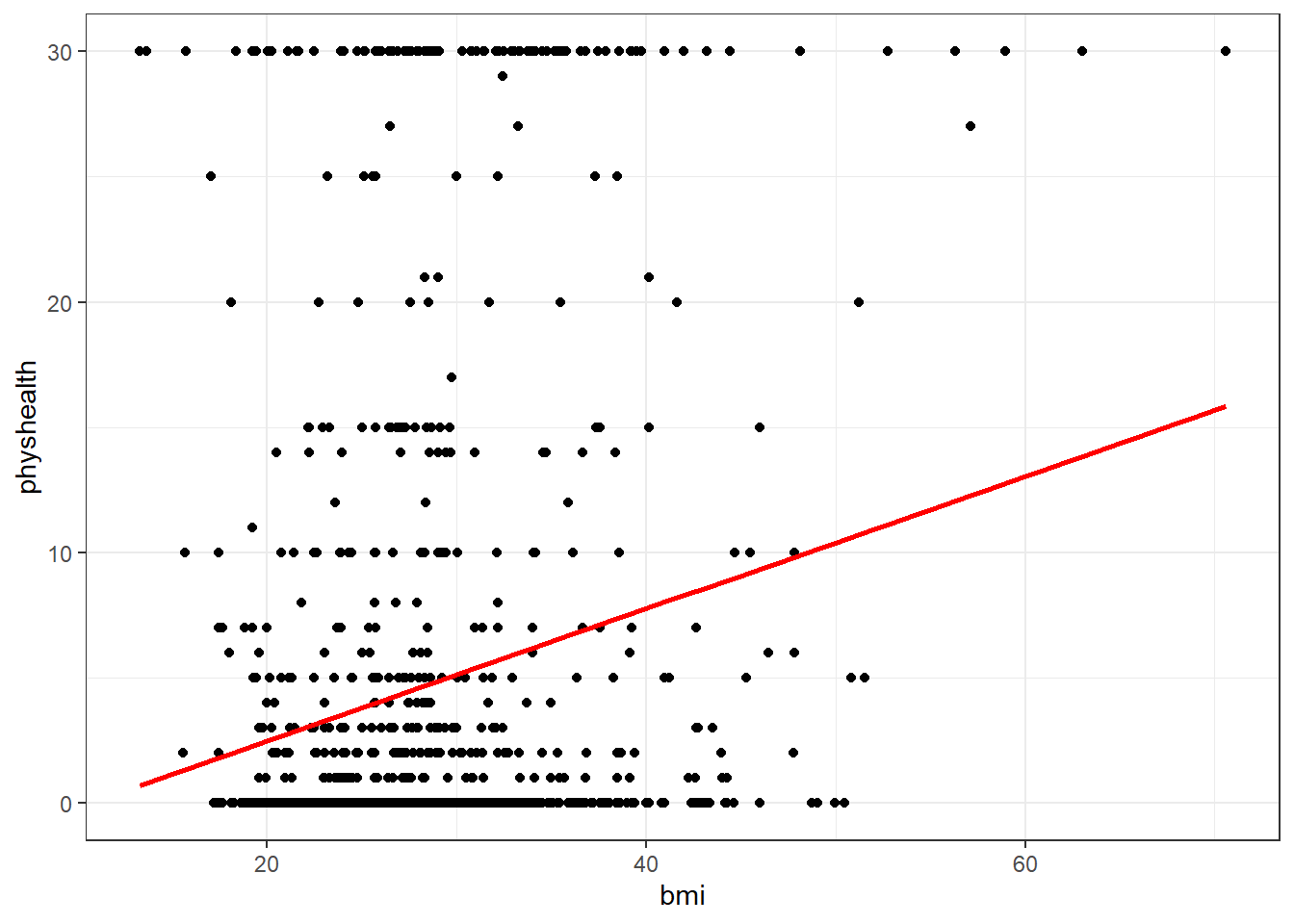

Now, we might take the plot above and add a simple linear model …

ggplot(data = smart_cle1_sh, aes(x = bmi, y = physhealth)) +

geom_point() +

geom_smooth(method = "lm", se = FALSE, col = "red")`geom_smooth()` using formula = 'y ~ x'

which shows the same least squares regression model that we can fit with the lm command.

8.5.1 Fitting a Simple Regression Model

model_A <- lm(physhealth ~ bmi, data = smart_cle1_sh)

model_A

Call:

lm(formula = physhealth ~ bmi, data = smart_cle1_sh)

Coefficients:

(Intercept) bmi

-2.6497 0.2559 summary(model_A)

Call:

lm(formula = physhealth ~ bmi, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-10.2641 -4.5596 -3.5897 -0.7778 29.2460

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -2.64970 1.22523 -2.163 0.0308 *

bmi 0.25592 0.04217 6.069 1.76e-09 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 9.031 on 1131 degrees of freedom

Multiple R-squared: 0.03154, Adjusted R-squared: 0.03068

F-statistic: 36.83 on 1 and 1131 DF, p-value: 1.757e-09confint(model_A, level = 0.95) 2.5 % 97.5 %

(Intercept) -5.0536859 -0.2457115

bmi 0.1731799 0.3386617The model coefficients can be obtained by printing the model object, and the summary function provides several useful descriptions of the model’s residuals, its statistical significance, and quality of fit.

8.5.2 Model Summary for a Simple (One-Predictor) Regression

The fitted model predicts physhealth using a prediction equation we can read off from the model coefficient estimates. Specifically, we have:

coef(model_A)(Intercept) bmi

-2.6496987 0.2559208 so the equation is physhealth = -2.82 + 0.265 bmi.

Each of the 1133 respondents included in the smart_cle1_sh data makes a contribution to this model.

8.5.2.1 Residuals

Suppose Harry is one of the people in that group, and Harry’s data is bmi = 20, and physhealth = 3.

- Harry’s observed value of

physhealthis just the value we have in the data for them, in this case, observedphyshealth= 3 for Harry. - Harry’s fitted or predicted

physhealthvalue is the result of calculating -2.82 + 0.265bmifor Harry. So, if Harry’s BMI was 20, then Harry’s predictedphyshealthvalue is -2.82 + 0.265 (20) = 2.48. - The residual for Harry is then his observed outcome minus his fitted outcome, so Harry has a residual of 3 - 2.48 = 0.52.

- Graphically, a residual represents vertical distance between the observed point and the fitted regression line.

- Points above the regression line will have positive residuals, and points below the regression line will have negative residuals. Points on the line have zero residuals.

The residuals are summarized at the top of the summary output for linear model.

summary(model_A)

Call:

lm(formula = physhealth ~ bmi, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-10.2641 -4.5596 -3.5897 -0.7778 29.2460

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -2.64970 1.22523 -2.163 0.0308 *

bmi 0.25592 0.04217 6.069 1.76e-09 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 9.031 on 1131 degrees of freedom

Multiple R-squared: 0.03154, Adjusted R-squared: 0.03068

F-statistic: 36.83 on 1 and 1131 DF, p-value: 1.757e-09- The mean residual will always be zero in an ordinary least squares model, but a five number summary of the residuals is provided by the summary, as is an estimated standard deviation of the residuals (called here the Residual standard error.)

- In the

smart_cle1_shdata, the minimum residual was -10.53, so for one subject, the observed value was 10.53 days smaller than the predicted value. This means that the prediction was 10.53 days too large for that subject. - Similarly, the maximum residual was 29.30 days, so for one subject the prediction was 29.30 days too small. Not a strong performance.

- In a least squares model, the residuals are assumed to follow a Normal distribution, with mean zero, and standard deviation (for the

smart_cle1_shdata) of about 9.0 days. We know this because the residual standard error is specified as 8.968 later in the linear model output. Thus, by the definition of a Normal distribution, we’d expect - about 68% of the residuals to be between -9 and +9 days,

- about 95% of the residuals to be between -18 and +18 days,

- about all (99.7%) of the residuals to be between -27 and +27 days.

8.5.2.2 Coefficients section

The summary for a linear model shows Estimates, Standard Errors, t values and p values for each coefficient fit.

summary(model_A)

Call:

lm(formula = physhealth ~ bmi, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-10.2641 -4.5596 -3.5897 -0.7778 29.2460

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -2.64970 1.22523 -2.163 0.0308 *

bmi 0.25592 0.04217 6.069 1.76e-09 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 9.031 on 1131 degrees of freedom

Multiple R-squared: 0.03154, Adjusted R-squared: 0.03068

F-statistic: 36.83 on 1 and 1131 DF, p-value: 1.757e-09- The Estimates are the point estimates of the intercept and slope of

bmiin our model. - In this case, our estimated slope is 0.265, which implies that if Harry’s BMI is 20 and Sally’s BMI is 21, we predict that Sally’s

physhealthwill be 0.265 days larger than Harry’s. - The Standard Errors are also provided for each estimate. We can create rough 95% uncertainty intervals for these estimated coefficients by adding and subtracting two standard errors from each coefficient, or we can get a slightly more accurate answer with the

confintfunction. - Here, the 95% uncertainty interval for the slope of

bmiis estimated to be (0.18, 0.35). This is a good measure of the uncertainty in the slope that is captured by our model. We are 95% confident in the process of building this interval, but this doesn’t mean we’re 95% sure that the true slope is actually in that interval.

Also available are a t value (just the Estimate divided by the Standard Error) and the appropriate p value for testing the null hypothesis that the true value of the coefficient is 0 against a two-tailed alternative.

- If a slope coefficient is statistically detectably different from 0, this implies that 0 will not be part of the uncertainty interval obtained through

confint. - If the slope was zero, it would suggest that

bmiwould add no predictive value to the model. But that’s unlikely here.

If the bmi slope coefficient is associated with a small p value, as in the case of our model_A, it suggests that the model including bmi is statistically detectably better at predicting physhealth than the model without bmi.

- Without

bmiourmodel_Awould become an intercept-only model, in this case, which would predict the meanphyshealthfor everyone, regardless of any other information.

8.5.2.3 Model Fit Summaries

summary(model_A)

Call:

lm(formula = physhealth ~ bmi, data = smart_cle1_sh)

Residuals:

Min 1Q Median 3Q Max

-10.2641 -4.5596 -3.5897 -0.7778 29.2460

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -2.64970 1.22523 -2.163 0.0308 *

bmi 0.25592 0.04217 6.069 1.76e-09 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 9.031 on 1131 degrees of freedom

Multiple R-squared: 0.03154, Adjusted R-squared: 0.03068

F-statistic: 36.83 on 1 and 1131 DF, p-value: 1.757e-09The summary of a linear model also displays:

- The residual standard error and associated degrees of freedom for the residuals.

- For a simple (one-predictor) least regression like this, the residual degrees of freedom will be the sample size minus 2.

- The multiple R-squared (or coefficient of determination)

- This is interpreted as the proportion of variation in the outcome (

physhealth) accounted for by the model, and will always fall between 0 and 1 as a result. - Our model_A accounts for a mere 3.4% of the variation in

physhealth. - The Adjusted R-squared value “adjusts” for the size of our model in terms of the number of coefficients included in the model.

- The adjusted R-squared will always be smaller than the Multiple R-squared.

- We still hope to find models with relatively large adjusted \(R^2\) values.

- In particular, we hope to find models where the adjusted \(R^2\) isn’t substantially less than the Multiple R-squared.

- The adjusted R-squared is usually a better estimate of likely performance of our model in new data than is the Multiple R-squared.

- The adjusted R-squared result is no longer interpretable as a proportion of anything - in fact, it can fall below 0.

- We can obtain the adjusted \(R^2\) from the raw \(R^2\), the number of observations N and the number of predictors p included in the model, as follows:

\[ R^2_{adj} = 1 - \frac{(1 - R^2)(N - 1)}{N - p - 1}, \]

- The F statistic and p value from a global ANOVA test of the model.

- Obtaining a statistically significant result here is usually pretty straightforward, since the comparison is between our model, and a model which simply predicts the mean value of the outcome for everyone.

- In a simple (one-predictor) linear regression like this, the t statistic for the slope is just the square root of the F statistic, and the resulting p values for the slope’s t test and for the global F test will be identical.

- To see the complete ANOVA F test for this model, we can run

anova(model_A).

anova(model_A)Analysis of Variance Table

Response: physhealth

Df Sum Sq Mean Sq F value Pr(>F)

bmi 1 3004 3003.58 36.83 1.757e-09 ***

Residuals 1131 92237 81.55

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 18.5.3 Using the broom package

The broom package has three functions of particular use in a linear regression model:

8.5.3.1 The tidy function

tidy builds a data frame/tibble containing information about the coefficients in the model, their standard errors, t statistics and p values.

tidy(model_A)# A tibble: 2 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) -2.65 1.23 -2.16 0.0308

2 bmi 0.256 0.0422 6.07 0.00000000176It’s often useful to include other summaries in this tidying, for instance:

tidy(model_A, conf.int = TRUE, conf.level = 0.9) |>

select(term, estimate, conf.low, conf.high)# A tibble: 2 × 4

term estimate conf.low conf.high

<chr> <dbl> <dbl> <dbl>

1 (Intercept) -2.65 -4.67 -0.633

2 bmi 0.256 0.186 0.3258.5.3.2 The glance function

glance` builds a data frame/tibble containing summary statistics about the model, including

- the (raw) multiple \(R^2\) and adjusted R^2

sigmawhich is the residual standard error- the F

statistic,p.valuemodeldfanddf.residualassociated with the global ANOVA test, plus - several statistics that will be useful in comparing models down the line:

- the model’s log likelihood function value,

logLik - the model’s Akaike’s Information Criterion value,

AIC - the model’s Bayesian Information Criterion value,

BIC - and the model’s

deviancestatistic

glance(model_A)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.0315 0.0307 9.03 36.8 0.00000000176 1 -4100. 8206. 8221.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>8.5.3.3 The augment function

augment builds a data frame/tibble which adds fitted values, residuals and other diagnostic summaries that describe each observation to the original data used to fit the model, and this includes

.fittedand.resid, the fitted and residual values, in addition to.hat, the leverage value for this observation.cooksd, the Cook’s distance measure of influence for this observation.stdresid, the standardized residual (think of this as a z-score - a measure of the residual divided by its associated standard deviation.sigma)- and

se.fitwhich will help us generate prediction intervals for the model downstream

Note that each of the new columns begins with . to avoid overwriting any data.

head(augment(model_A))# A tibble: 6 × 8

physhealth bmi .fitted .resid .hat .sigma .cooksd .std.resid

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 4 27.9 4.50 -0.503 0.000886 9.03 0.00000138 -0.0557

2 0 23.0 3.25 -3.25 0.00149 9.03 0.0000971 -0.360

3 0 26.9 4.24 -4.24 0.000927 9.03 0.000102 -0.470

4 0 26.5 4.13 -4.13 0.000956 9.03 0.000100 -0.458

5 0 24.2 3.56 -3.56 0.00125 9.03 0.0000971 -0.394

6 2 27.7 4.45 -2.45 0.000891 9.03 0.0000328 -0.271 For more on the broom package, you may want to look at this vignette.

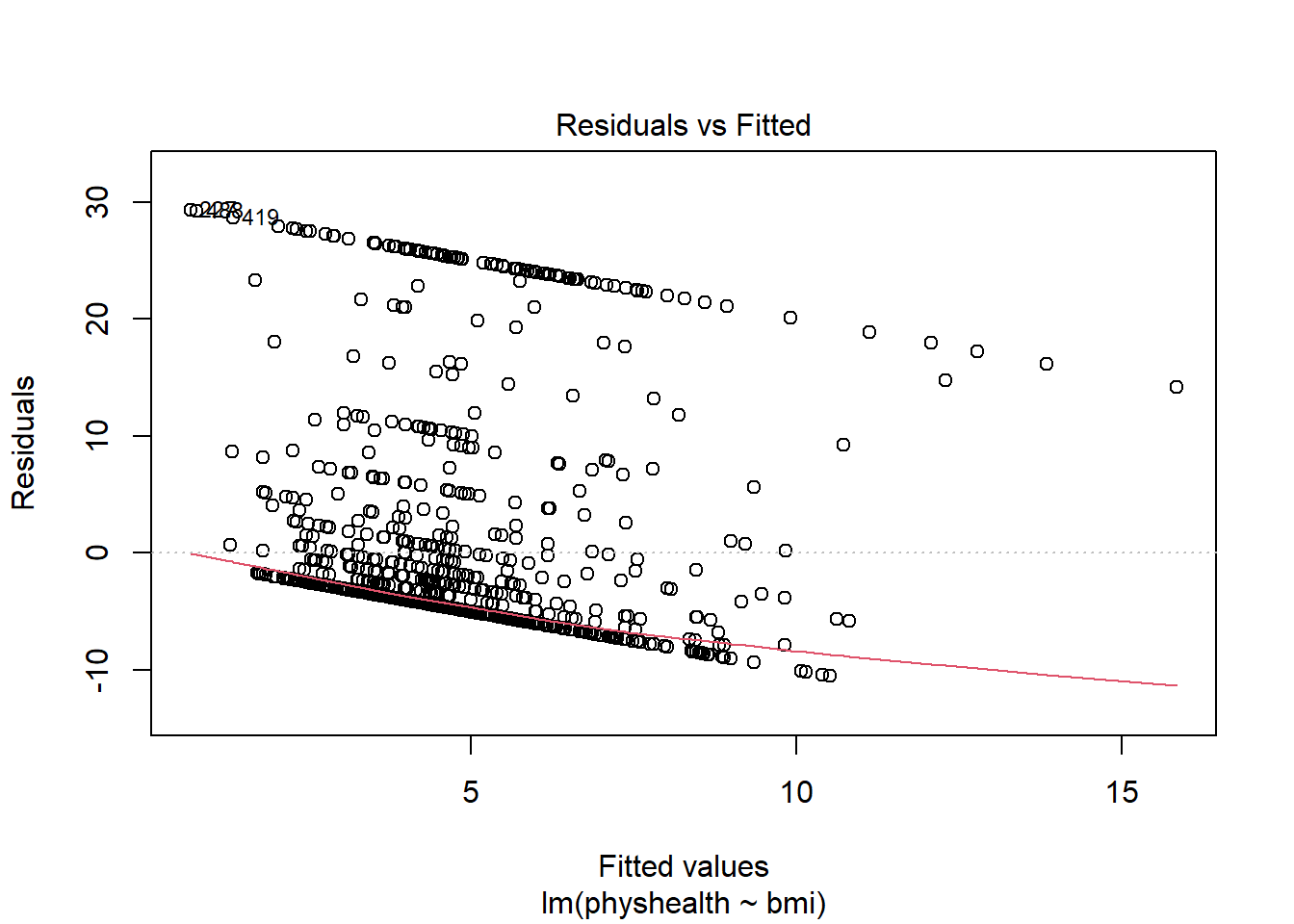

8.5.4 How does the model do? (Residuals vs. Fitted Values)

- Remember that the \(R^2\) value was about 3.4%.

plot(model_A, which = 1)

This is a plot of residuals vs. fitted values. The goal here is for this plot to look like a random scatter of points, perhaps like a “fuzzy football”, and that’s not what we have. Why?

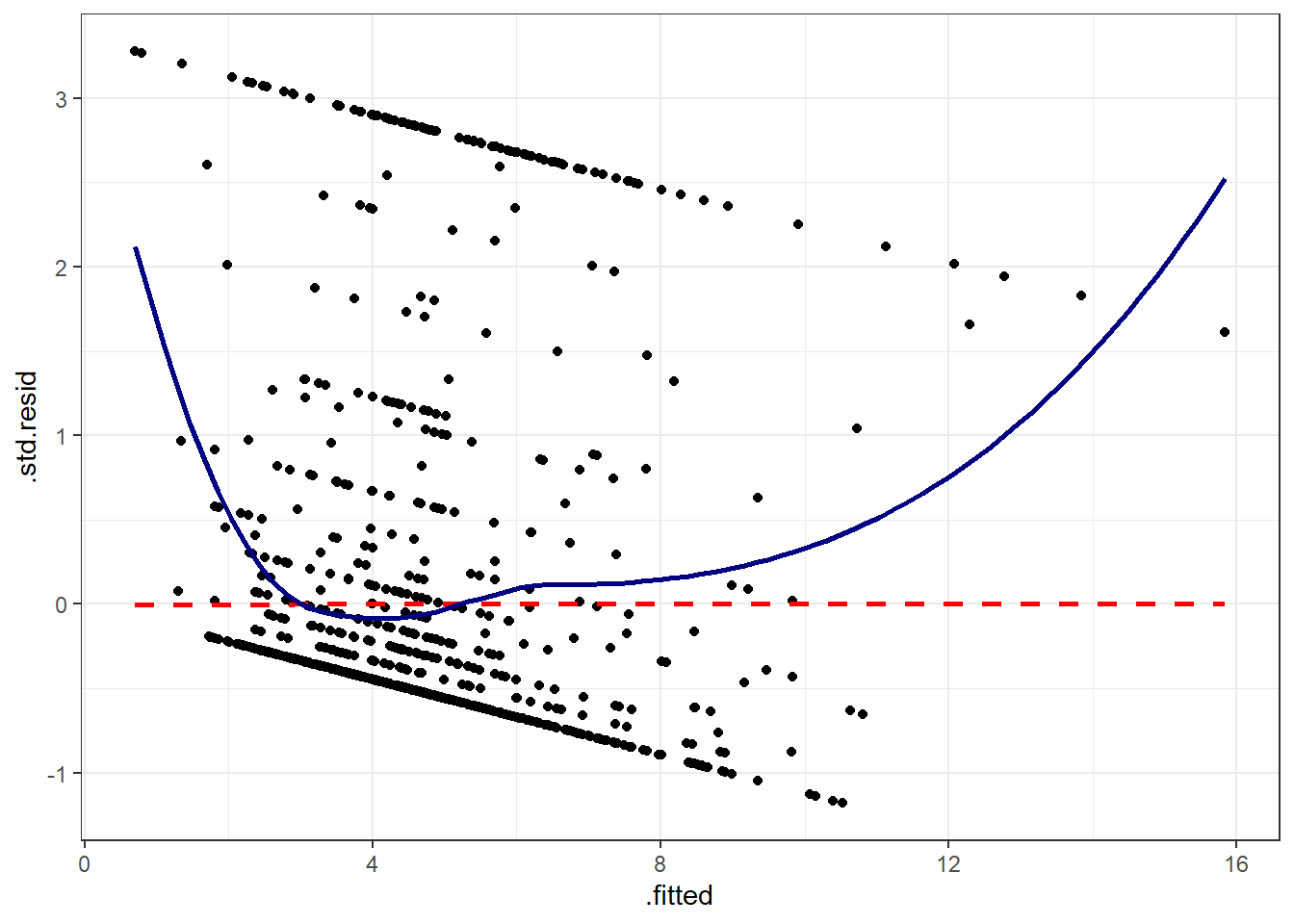

If you prefer, here’s a ggplot2 version of a similar plot, now looking at standardized residuals instead of raw residuals, and adding a loess smooth and a linear fit to the result.

ggplot(augment(model_A), aes(x = .fitted, y = .std.resid)) +

geom_point() +

geom_smooth(method = "lm", se = FALSE, col = "red", linetype = "dashed") +

geom_smooth(method = "loess", se = FALSE, col = "navy") +

theme_bw()`geom_smooth()` using formula = 'y ~ x'

`geom_smooth()` using formula = 'y ~ x'

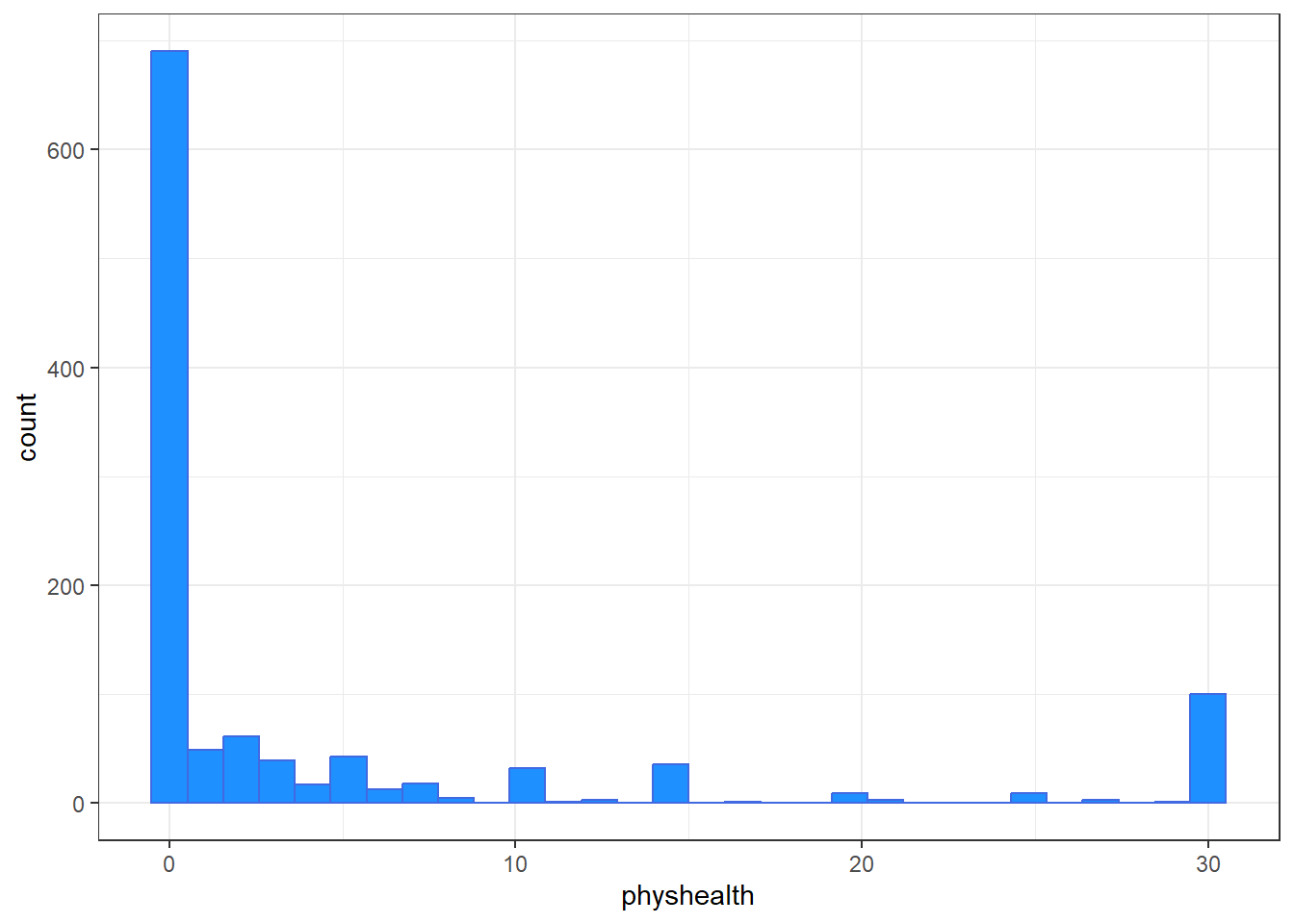

The problem we’re having here becomes, I think, a little more obvious if we look at what we’re predicting. Does physhealth look like a good candidate for a linear model?

ggplot(smart_cle1_sh, aes(x = physhealth)) +

geom_histogram(bins = 30, fill = "dodgerblue",

color = "royalblue")

smart_cle1_sh |> count(physhealth == 0, physhealth == 30)# A tibble: 3 × 3

`physhealth == 0` `physhealth == 30` n

<lgl> <lgl> <int>

1 FALSE FALSE 322

2 FALSE TRUE 101

3 TRUE FALSE 710No matter what model we fit, if we are predicting physhealth, and most of the data are values of 0 and 30, we have limited variation in our outcome, and so our linear model will be somewhat questionable just on that basis.

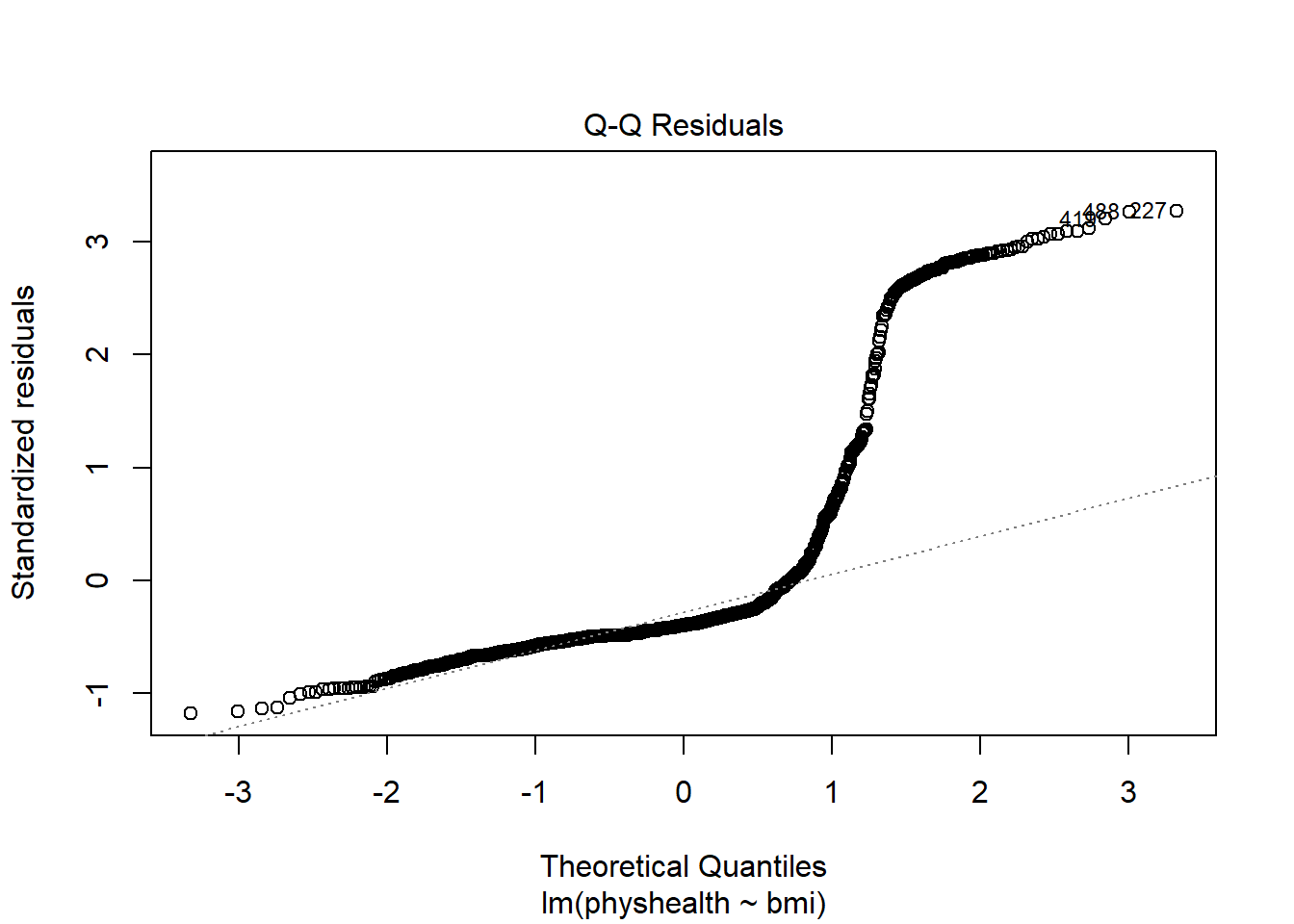

A normal Q-Q plot of the standardized residuals for our model_A shows this problem, too.

plot(model_A, which = 2)

We’re going to need a method to deal with this sort of outcome, that has both a floor and a ceiling. We’ll get there eventually, but linear regression alone doesn’t look promising.

All right, so that didn’t go anywhere great. We’ll try again, with a new outcome, in the next chapter.